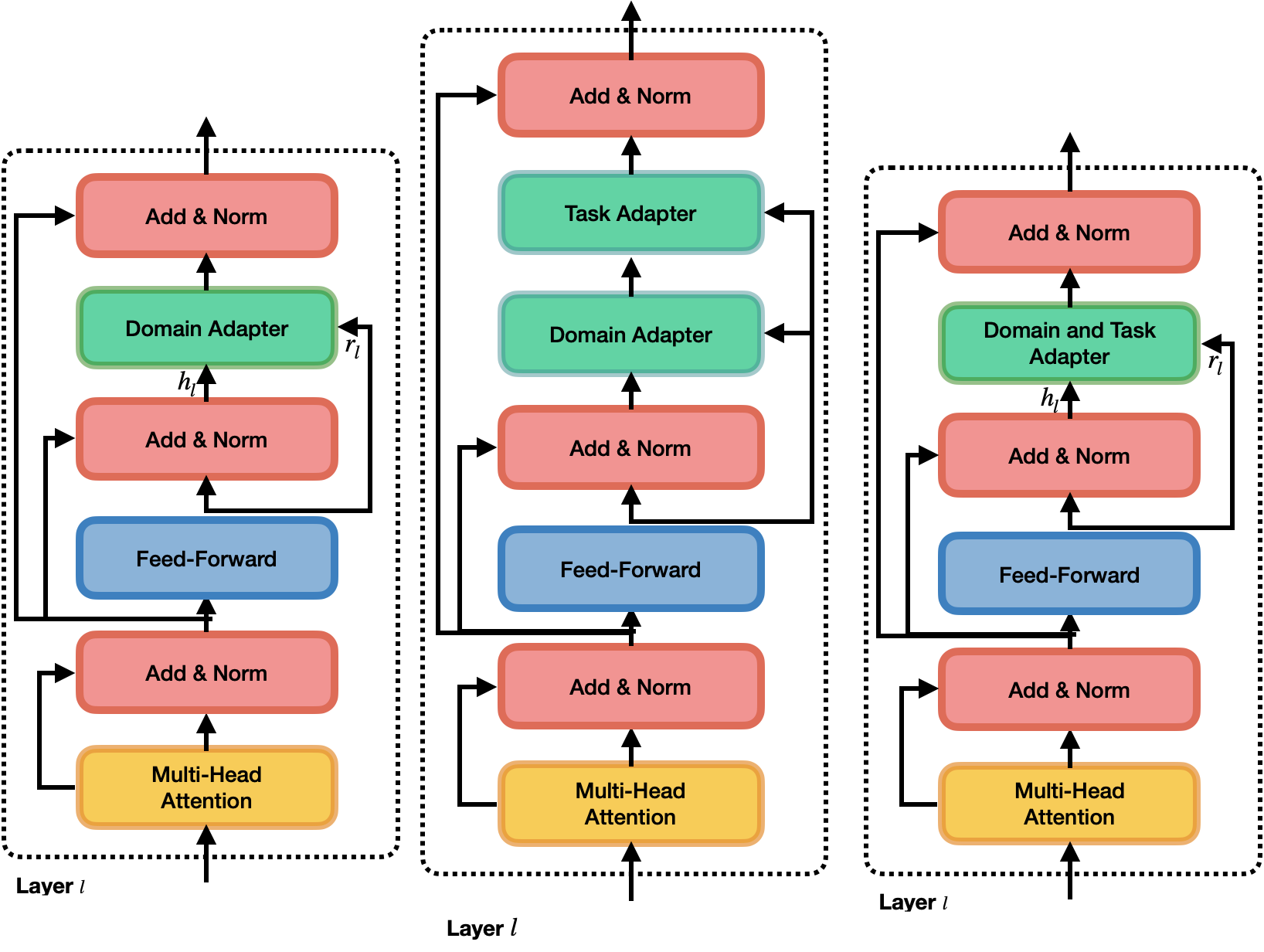

Code for our paper at EACL'23: UDAPTER-Efficient Domain Adaptation Using Adapters. Domadapter trains adapters for Domain Adaptation in NLP. The idea is to use principles

of unsupervised domain adaptation and parameter efficient fine-tuning to make domain

adaptation more efficient.

Coming soon

- Python >= 3.8

- Poetry for dependency and environment management

- direnv (Optional) - For automatically exporting environment variables

We use environment variables to store certain paths

- Pretrained Transformer Models (PT_MODEL_CACHE_DIR)

- Datasets (DATASET_CACHE_DIR)

- Experiments (OUTPUT_DIR)

- Results (OUTPUT_DIR)

Change the following variables in the .envrc file.

export DATASET_CACHE_DIR=""export PT_MODELS_CACHE_DIR=""export OUTPUT_DIR=""

Run source .envrc

- Run

poetry installto install the dependencies - Use

poetry shellto create a virtual environment

Commit your

poetry.lockfile if you install any new library.

Note: We have tested this on a linux machine. If you are using Macbook M1 then you might encounter in to some errors installing scipy, sklearn etc.

- Run

domadapter download mnlito download themnlidataset - Run

domadapter download sato download theamazondataset.

Using Google Colab?

!pip install pytorch-lightning==1.4.2 datasets transformers pandas click wandb numpy rich

See the scripts folder to train models.

For example to train the Joint-DT-:electric_plug: on the MNLI dataset run

bash train_joint_da_ta_mnli.sh

.

├── commands (domadapter terminal commands)

├── datamodules (Pytorch Lightning DataModules to load the SA and MNLI Dataset)

├── divergences (Different Divergence measures)

├── models (All the models listed in the paper)

├── orchestration (Instantiates the model, dataset, trainer and runs the experiments)

├── scripts (Bash scripts to run experiments)

├── utils (Useful utilities)

└── console.py (Python `Rich` console to pretty print everything)

@misc{https://doi.org/10.48550/arxiv.2302.03194,

doi = {10.48550/ARXIV.2302.03194},

url = {https://arxiv.org/abs/2302.03194},

author = {Malik, Bhavitvya and Kashyap, Abhinav Ramesh and Kan, Min-Yen and Poria, Soujanya},

keywords = {Computation and Language (cs.CL), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {UDApter -- Efficient Domain Adaptation Using Adapters},

publisher = {arXiv},

year = {2023},

copyright = {Creative Commons Attribution 4.0 International

}