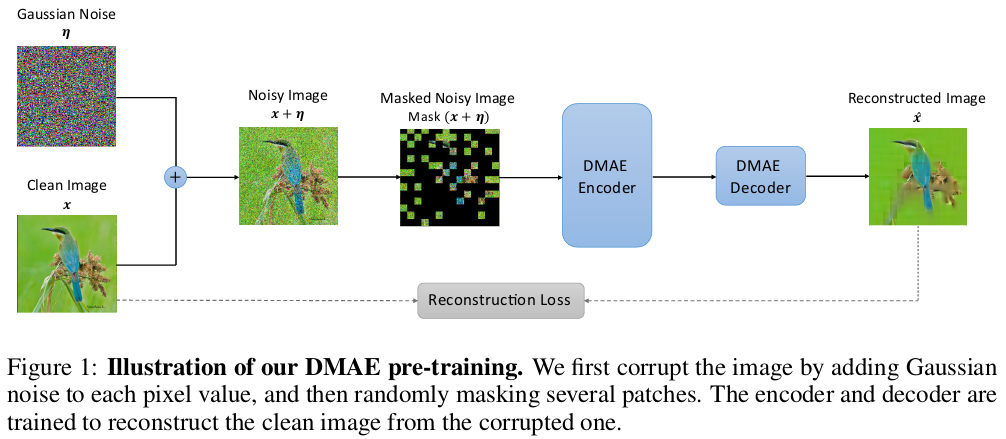

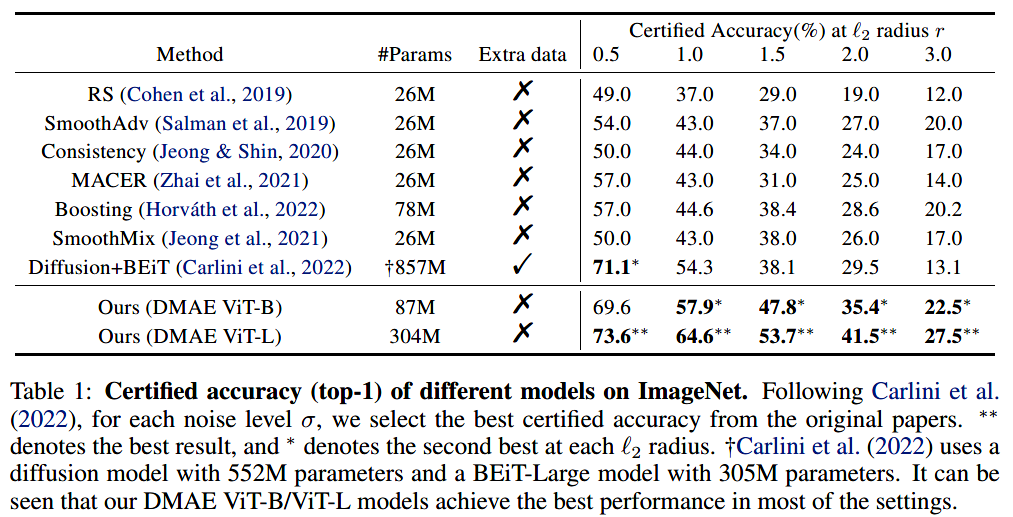

This repository is the official implementation of “Denoising Masked Autoencoders Help Robust Classification”, based on the official implementation of MAE in PyTorch.

@Article{dmae2022,

author = {Quanlin Wu and Hang Ye, Yuntian Gu and Huishuai Zhang, Liwei Wang and Di He},

journal = {arXiv:2210.06983},

title = {Denoising Masked Autoencoders Are Certifiable Robust Vision Learners},

year = {2022},

}

The pre-training instruction is in PRETRAIN.md.

The following table provides the pre-trained checkpoints used in the paper:

| Model | Size | Epochs | Link |

|---|---|---|---|

| DMAE-Base | 427MB | 1100 | download |

| DMAE-Large | 1.23GB | 1600 | download |

The fine-tuning and evaluation instruction is in FINETUNE.md.

This project is under the CC-BY-NC 4.0 license. See LICENSE for details.