This repository contains starter code for the 2022 challenge, details of the tasks, and training and evaluation setups. For an overview of SoundSpaces Challenge visit soundspaces.org/challenge.

This year, we are hosting challenges on audio-visual navigation task [1], where an agent is tasked to find a sound-making object in unmapped 3D environments with visual and auditory perception.

In AudioGoal navigation (AudioNav), an agent is spawned at a random starting position and orientation in an unseen environment. A sound-emitting object is also randomly spawned at a location in the same environment. The agent receives a one-second audio input in the form of a waveform at each time step and needs to navigate to the target location. No ground-truth map is available and the agent must only use its sensory input (audio and RGB-D) to navigate.

The challenge will be conducted on the SoundSpaces Dataset, which is based on AI Habitat, Matterport3D, and Replica. For this challenge, we use the Matterport3D dataset due to its diversity and scale of environments. This challenge focuses on evaluating agents' ability to generalize to unheard sounds and unseen environments. The training and validation splits are the same as used in Unheard Sound experiments reported in the SoundSpaces paper. They can be downloaded from the SoundSpaces dataset page (including minival).

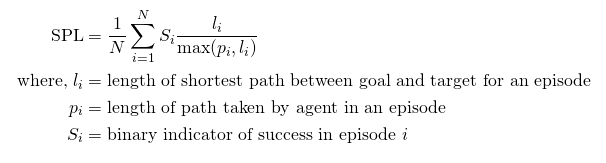

After calling the STOP action, the agent is evaluated using the 'Success weighted by Path Length' (SPL) metric [2].

An episode is deemed successful if on calling the STOP action, the agent is within 0.36m (2x agent-radius) of the goal position.

Participate in the contest by registering on the EvalAI challenge page and creating a team. Participants will upload JSON files containing the evaluation metric values for the challenge and the trajectories executed by their model. The trajectories will be used to validate the submitted performance values. Suspicious submissions will be reviewed and if necessary, the participating team will be disqualified. Instructions for evaluation and online submission are provided below.

-

Clone the challenge repository:

git clone https://github.com/changanvr/soundspaces-challenge.git cd soundspaces-challenge -

Implement your own agent or try one of ours. We provide an agent in

agent.pythat takes random actions:import habitat import soundspaces class RandomAgent(habitat.Agent): def reset(self): pass def act(self, observations): return numpy.random.choice(len(self._POSSIBLE_ACTIONS)) def main(): agent = RandomAgent(task_config=config) challenge = soundspaces.Challenge() challenge.submit(agent)

-

Following instructions for downloading SoundSpaces dataset and place all data under

data/folder. -

Evaluate the random agent locally:

env CHALLENGE_CONFIG_FILE="configs/challenge_random.local.yaml" python agent.pyThis calls

eval.py, which dumps a JSON file that contains a Python dictionary of the following type:eval_dict = {"ACTIONS": {f"{scene_id_1}_{episode_id_1}": [action_1_1, ..., 0], f"{scene_id_2}_{episode_id_2}": [action_2_1, ..., 0]}, "SPL": average_spl, "SOFT_SPL": average_softspl, "DISTANCE_TO_GOAL": average_distance_to_goal, "SUCCESS": average_success}

Make sure that the json that gets dumped upon evaluating your agent is of the exact same type. The easiest way to ensure that is by not modifying

eval.py.

Follow instructions in the submit tab of the EvalAI challenge page to upload your evaluation JSON file.

Valid challenge phases are soundspaces22-audionav-{minival, test-std}.

The challenge consists of the following phases:

- Minival phase: This split is same as the one used in

./test_locally_audionav_rgbd.sh. The purpose of this phase/split is sanity checking -- to confirm that your online submission to EvalAI doesn't run into any issue during evaluation. Each team is allowed maximum of 30 submission per day for this phase. - Test Standard phase: The purpose of this phase/split is to serve as the public leaderboard establishing the state of the art; this is what should be used to report results in papers. Each team is allowed maximum of 10 submission per day for this phase. As a reminder, the submitted trajectories will be used to validate the submitted performance values. Suspicious submissions will be reviewed and if necessary, the participating team will be disqualified.

Note: If you face any issues or have questions you can ask them by mailing the organizers or opening an issue on this repository.

We included both the configs and Python scripts for av-nav and av-wan. Note that the MapNav environment used by av-wan is baked into the environment container and can't be changed. We suggest you to re-write that planning for loop in the agent code if you want to modify mapping or planning.

Thank Habitat team for the challenge template.

[1] SoundSpaces: Audio-Visual Navigation in 3D Environments. Changan Chen*, Unnat Jain*, Carl Schissler, Sebastia Vicenc Amengual Gari, Ziad Al-Halah, Vamsi Krishna Ithapu, Philip Robinson, Kristen Grauman. ECCV, 2020.

[2] On evaluation of embodied navigation agents. Peter Anderson, Angel Chang, Devendra Singh Chaplot, Alexey Dosovitskiy, Saurabh Gupta, Vladlen Koltun, Jana Kosecka, Jitendra Malik, Roozbeh Mottaghi, Manolis Savva, Amir R. Zamir. arXiv:1807.06757, 2018.

This repo is MIT licensed, as found in the LICENSE file.