This repository provides the official Pytorch implementation for C3-SL.

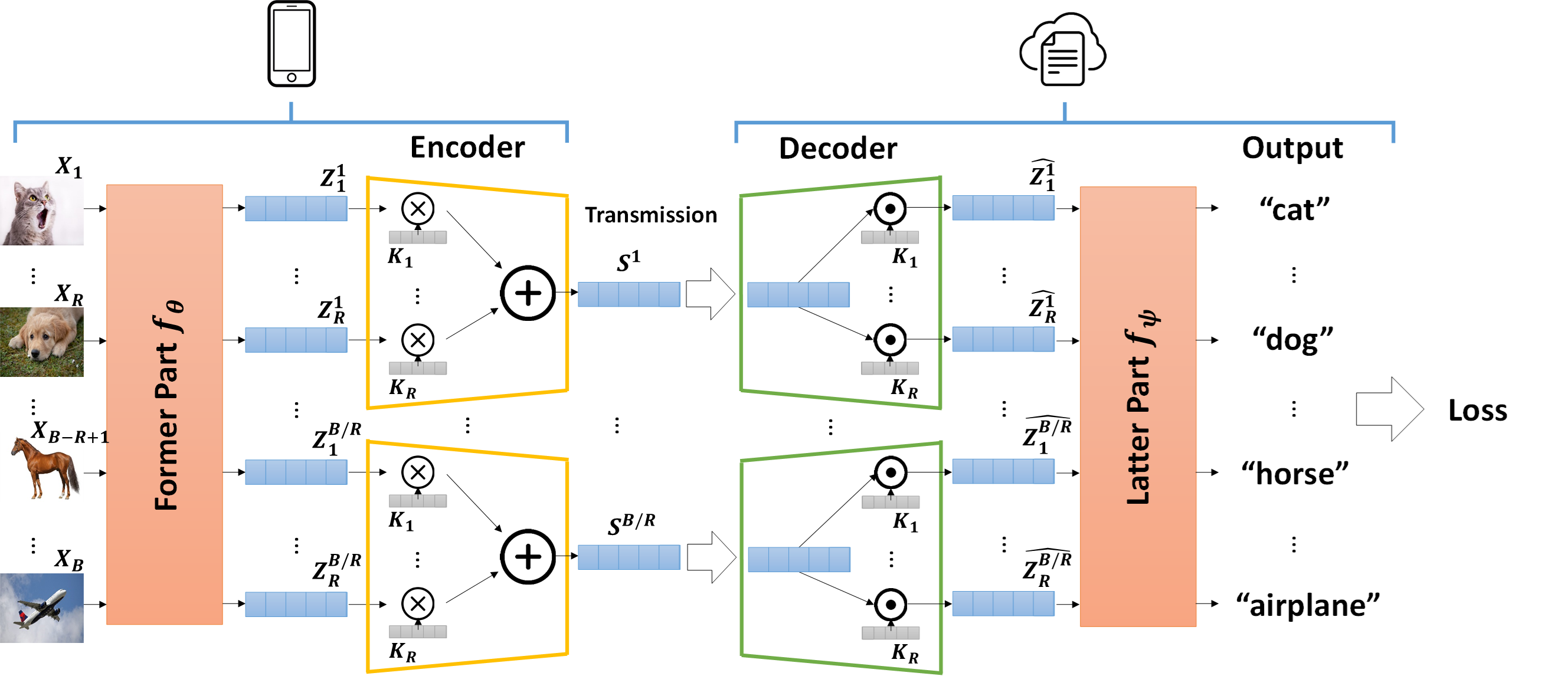

- Batch-Wise Compression (A new Compression Paradigm for Split Learning)

- Exploit Circular Convolution and Orthogonality of features to avoid information loss

- Reduce 1152x memory and 2.25x computation overhead compared to the SOTA dimension-wise compression method

Presentation Video : Click here

Presentation Video : Click here

The source code can be found in CIFAR-10/data preprocess src and CIFAR-100/data preprocess src.

| Tasks | Datasets:point_down: |

|---|---|

| Image Classification | CIFAR-10, CIFAR-100 |

- Use the following commands:

$ cd CIFAR10 # or cd CIFAR100

$ cd "data preprocess src"

$ python download_data.py- The data structure should be formatted like this:

CIFAR10(Current dir)

├──CIFAR

│ ├── train

│ ├── val

├──data preprocess src

│ ├── download_data.py

- Python 3.6

- Pytorch 1.4.0

- torchvision

- CUDA 10.2

- tensorflow_datasets

- Other dependencies: numpy, matplotlib, tensorflow

Modify parameters in the shell script.

| Parameters | Definition |

|---|---|

| --batch | batch size |

| --epoch | number of training epochs |

| --dump_path | save path of experiment logs and checkpoints |

| --arch | model architecture (resnet50/vgg16) |

| --split | the split point of model |

| --bcr | Batch Compression Ratio R |

cd CIFAR10/C3-SL

./train.sh- Comparable Accuracy with SOTA

- Greatly Reduce Resource Overhead

@article{hsieh2022c3,

title={C3-SL: Circular Convolution-Based Batch-Wise Compression for Communication-Efficient Split Learning},

author={Hsieh, Cheng-Yen and Chuang, Yu-Chuan and others},

journal={arXiv preprint arXiv:2207.12397},

year={2022}

}