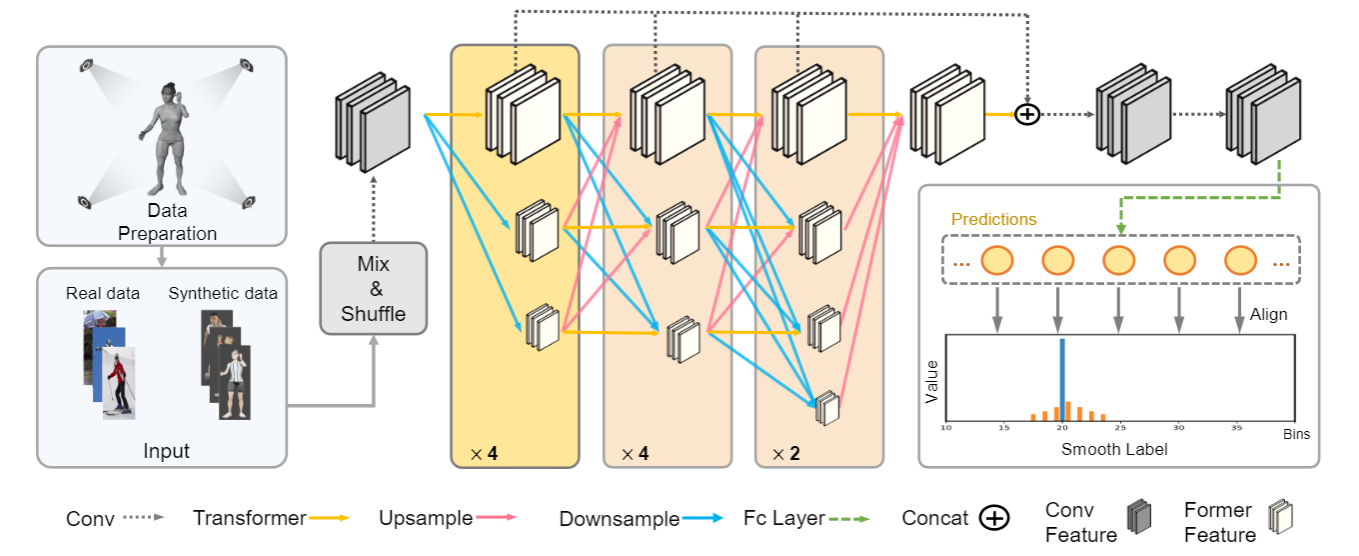

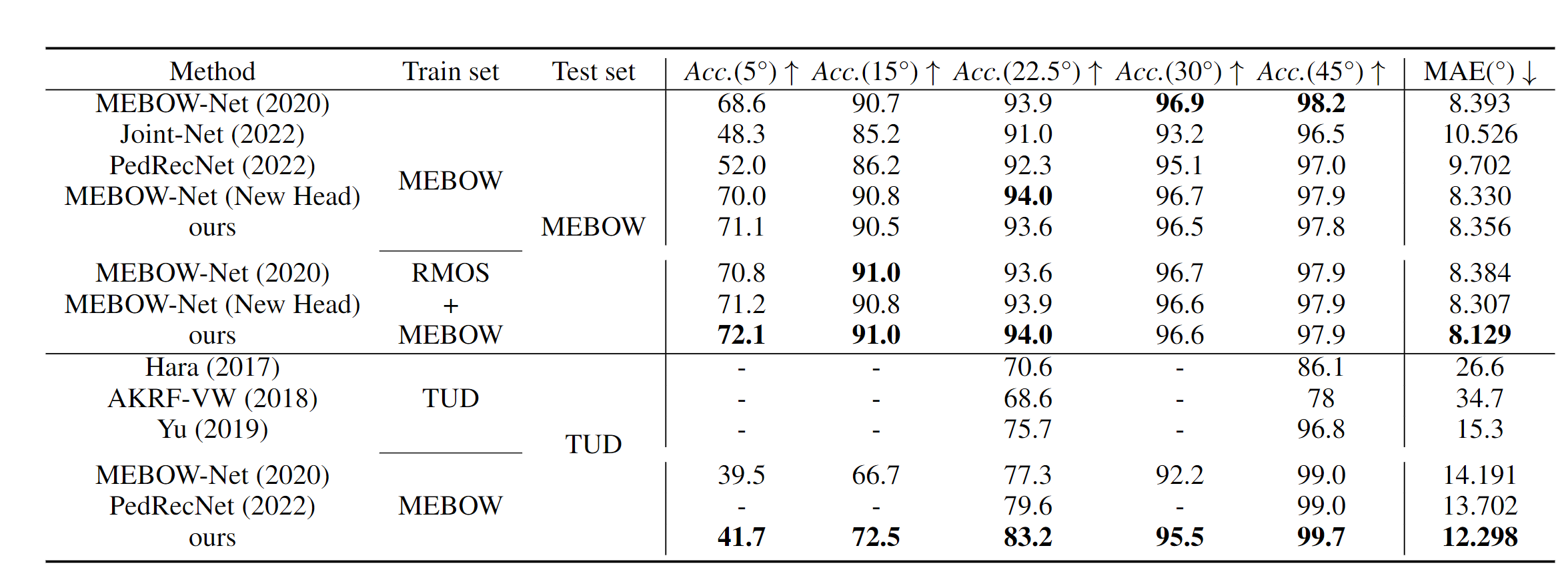

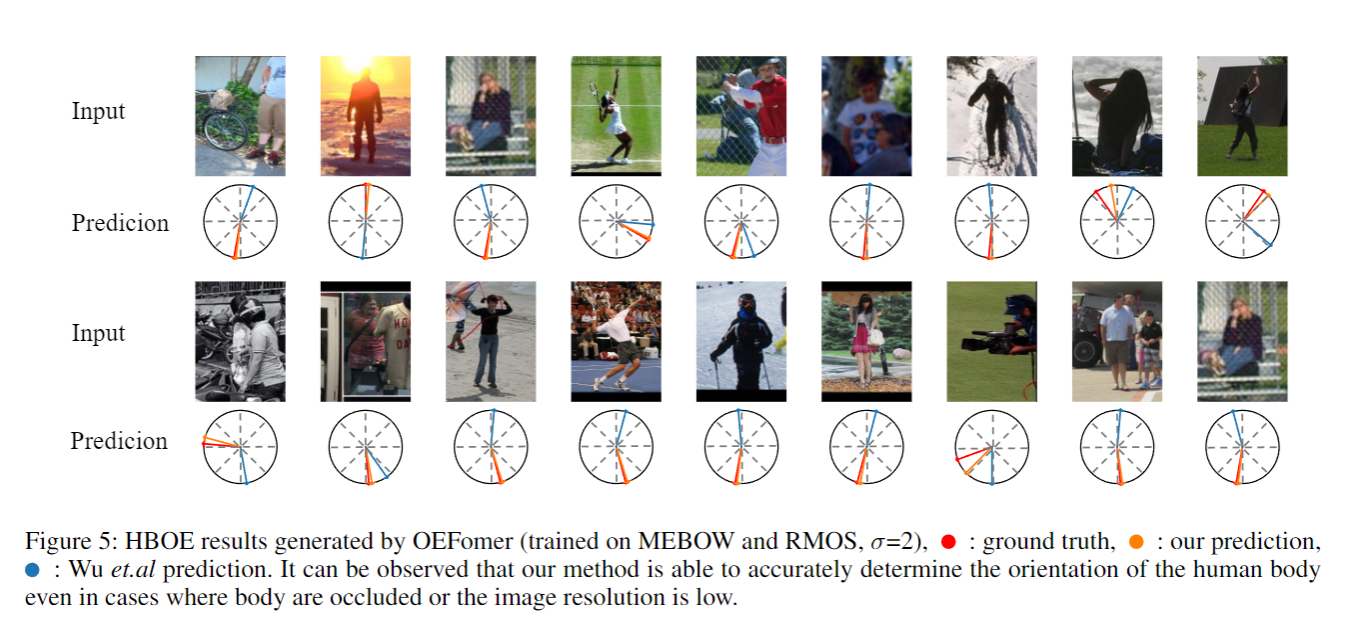

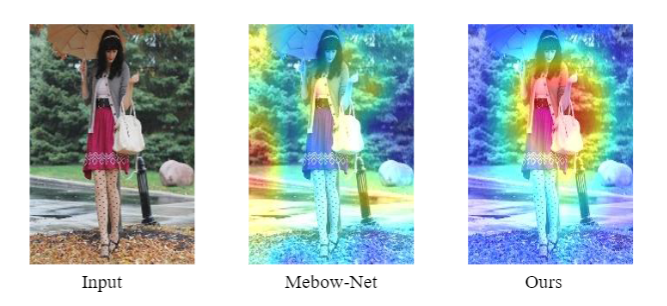

Towards Fine-Grained HBOE with Rendered Orientation Set and Laplace Smoothing

Ruisi Zhao, Mingming Li, Zheng Yang, Binbin Lin, Xiaohui Zhong, Xiaobo Ren, Deng Cai, Boxi Wu

Official codes for Towards Fine-Grained HBOE. This repository contains two version of implementation.

- Original version (Paper results, evaluation)

- Optimized version (Less memory usage, simpler model. Please see the code for details, specifically in the model section, we design a new head with local-window attention. Compared to OEFormer, this version model significantly reduces training costs. We show the results in the following image.)

- [√] Code Released.

- [√] Checkpoint Released.

- [√] Data Released.

-

Prepare environment

conda create -n hboe python=3.9 -y conda activate hboe pip install -r requirements.txt

-

Clone repo & install

git clone https://github.com/Whalesong-zrs/Towards-Fine-grained-HBOE.git cd towards-fine-grained-hboe

Download the checkpoints and put them in correct paths.

pretrained ckpt:

pretrained_models/pretrained_hrnet.pth

pretrained_models/pretrained_oeformer.pth

trained ckpt:

checkpoints/hrnet_head.pth

checkpoints/oeformer.pthIf you want to quickly reimplement our methods, we provide the following resources used in the paper. For MEBOW dataset, please go to MEBOW for more details.

| Paper Resources | Rendered Datasets | Checkpoints |

|---|---|---|

| Download Link | Google Drive | Google Drive |

After downloading, the path should be arranged as follows:

towards-fine-grained-hboe

├── checkpoints

| ├── hrnet_head.pth # Trained checkpoints

│ ├── oeformer.pth

├── experiments

| ├── hrnet_head.yaml # Training / Inference setting

| ├── oeformer.yaml

├── imgs

├── lib

│ ├── config # Some scripts about config

│ ├── core # Train and enaluate

│ ├── dataset

│ ├── utils

├── logs

├── model_arch

├── output

├── pretrained_models

├── tools

...

# check your config

bash train.sh# check your config

bash test.shThis codebase builds on MEBOW. Thanks for open-sourcing! Besides, we acknowledge following great open-sourcing projects:

@inproceedings{zhao2024towards,

title={Towards Fine-Grained HBOE with Rendered Orientation Set and Laplace Smoothing},

author={Zhao, Ruisi and Li, Mingming and Yang, Zheng and Lin, Binbin and Zhong, Xiaohui and Ren, Xiaobo and Cai, Deng and Wu, Boxi},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

volume={38},

number={7},

pages={7505--7513},

year={2024}

}If you have any questions and improvement suggestions, please email Ruisi Zhao (zhaors00@zju.edu.cn), or open an issue.