This package provides functionalities to perform imaging and reconstruction with a lensless camera known as DiffuserCam [1]. We use a more rudimentary version of the DiffuserCam where we use a piece of tape instead of the lens and the Raspberry Pi HQ camera sensor (V2 sensor is also supported). However, the same principles can be used for a different diffuser and a different sensor (although the capture script would change). The content of this project is largely based off of the work from Prof. Laura Waller's group at UC Berkeley:

So a huge kudos to them for the idea and making the tools/code/data available!

We've also made a few Medium articles to guide you through the process of building the DiffuserCam, measuring data with it, and reconstruction:

- Raspberry Pi setup and SSH'ing without password (needed for the remote capture/display scripts).

- Building DiffuserCam.

- Measuring DiffuserCam PSF and raw data.

- Imaging with DiffuserCam.

Note that this material has been prepared for our graduate signal processing

course at EPFL, and therefore includes some exercises / code to complete. If you

are an instructor or trying to replicate this tutorial, feel free to send an

email to eric[dot]bezzam[at]epfl[dot]ch.

The expected workflow is to have a local computer which interfaces remotely with a Raspberry Pi equipped with the HQ camera sensor (or V2 sensor as in the original tutorial).

The software from this repository has to be installed on your both your local

machine and the Raspberry Pi (from the home directory of the Pi). Below are

commands that worked for our configuration (Ubuntu 21.04), but there are

certainly other ways to download a repository and install the library locally.

# download from GitHub

git clone git@github.com:LCAV/DiffuserCam.git

# install in virtual environment

cd DiffuserCam

python3.9 -m venv diffcam_env

source diffcam_env/bin/activate

pip install -e .On the Raspberry Pi, you may also have to install the following:

sudo apt-get install libimage-exiftool-perl

sudo apt-get install libatlas-base-devNote that we highly recommend using Python 3.9, as its end-of-life is Oct 2025. Some Python library versions may not be available with earlier versions of Python.

For plotting on your local computer, you may also need to install Tk.

The scripts for remote capture and remote display assume that you can SSH to the Raspberry Pi without a password. To see this up you can follow instruction from this page. Do not set a password for your key pair, as this will not work with the provided scripts.

We have noticed problems with locale when running the remote capture and display scripts, for example:

perl: warning: Setting locale failed.

perl: warning: Please check that your locale settings:

...This may arise due to incompatible locale settings between your local machine and the Raspberry Pi. There are two possible solutions to this, as proposed in this forum.

- Comment

SendEnv LANG LC_*in/etc/ssh/ssh_configon your laptop. - Comment

AcceptEnv LANG LC_*in/etc/ssh/sshd_configon the Raspberry Pi.

You can download example PSFs and raw data that we've measured here.

We recommend placing this content in the data folder.

You can download a subset for the DiffuserCam Lensless Mirflickr Dataset

that we've prepared here

with scripts/prepare_mirflickr_subset.py.

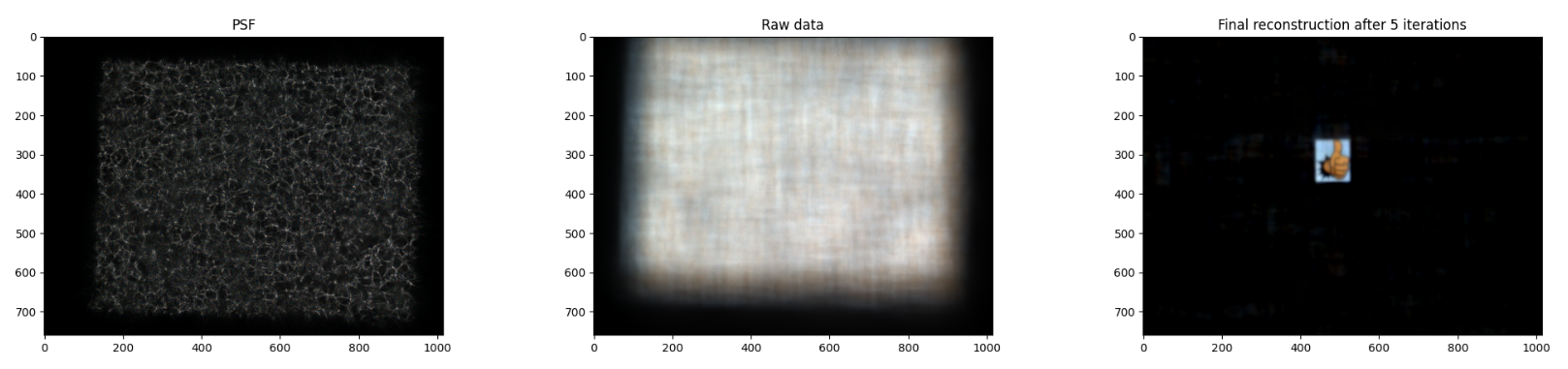

There is one script / algorithm available for reconstruction - ADMM [3].

python scripts/admm.py --psf_fp data/psf/diffcam_rgb.png \

--data_fp data/raw_data/thumbs_up_rgb.png --n_iter 5A template - scripts/reconstruction_template.py - can be used to implement

other reconstruction approaches. Moreover, the abstract class

diffcam.recon.ReconstructionAlgorithm

can be used to define new reconstruction algorithms by defining a few methods:

forward model and its adjoint (_forward and _backward), the update step

(_update), a method to reset state variables (_reset), and an image

formation method (_form_method). Functionality for iterating, saving, and

visualization are implemented within the abstract class. diffcam.admm.ADMM

shows an example reconstruction implementation deriving from this abstract

class.

You can run ADMM on the DiffuserCam Lensless Mirflickr Dataset with the following script.

python scripts/evaluate_mirflickr_admm.py --data <FP>where <FP> is the path to the dataset.

However, the original dataset is quite large (25000 files, 100 GB). So we've prepared this subset (200 files, 725 MB) which you can also pass to the script. It is also possible to set the number of files.

python scripts/evaluate_mirflickr_admm.py \

--data DiffuserCam_Mirflickr_200_3011302021_11h43_seed11 \

--n_files 10 --saveThe --save flag will save a viewable image for each reconstruction.

You can also apply ADMM on a single image and visualize the iterative reconstruction.

python scripts/apply_admm_single_mirflickr.py \

--data DiffuserCam_Mirflickr_200_3011302021_11h43_seed11 \

--fid 172You can remotely capture raw Bayer data with the following script.

python scripts/remote_capture.py --exp 0.1 --iso 100 --bayer --fp <FN> --hostname <HOSTNAME>where <HOSTNAME> is the hostname or IP address of your Raspberry Pi, <FN> is

the name of the file to save the Bayer data, and the other arguments can be used

to adjust camera settings.

For collecting images displayed on a screen, we have prepared some software to remotely display images on a Raspberry Pi installed with this software and connected to a monitor.

You first need to install the feh command line tool on your Raspberry Pi.

sudo apt-get install fehThen make a folder where we will create and read prepared images.

mkdir DiffuserCam_display

mv ~/DiffuserCam/data/original_images/rect.jpg ~/DiffuserCam_display/test.jpgThen we can use feh to launch the image viewer.

feh DiffuserCam_display --scale-down --auto-zoom -R 0.1 -x -F -YThen from your laptop you can use the following script to display an image on the Raspberry Pi:

python scripts/remote_display.py --fp <FP> --hostname <HOSTNAME> \

--pad 80 --vshift 10 --brightness 90where <HOSTNAME> is the hostname or IP address of your Raspberry Pi, <FN> is

the path on your local computer of the image you would like to display, and the

other arguments can be used to adjust the positioning of the image and its

brightness.

You can use black to format your code.

First install the library.

pip install blackThen run the formatting script we've prepared.

./format_code.sh[1] Antipa, N., Kuo, G., Heckel, R., Mildenhall, B., Bostan, E., Ng, R., & Waller, L. (2018). DiffuserCam: lensless single-exposure 3D imaging. Optica, 5(1), 1-9.

[2] Monakhova, K., Yurtsever, J., Kuo, G., Antipa, N., Yanny, K., & Waller, L. (2019). Learned reconstructions for practical mask-based lensless imaging. Optics express, 27(20), 28075-28090.

[3] Boyd, S., Parikh, N., & Chu, E. (2011). Distributed optimization and statistical learning via the alternating direction method of multipliers. Now Publishers Inc.