🏆 Run benchmarks against the most common ASR tools on the market.

Important

Deepgram benchmark results have been updated with the latest Nova 2 model.

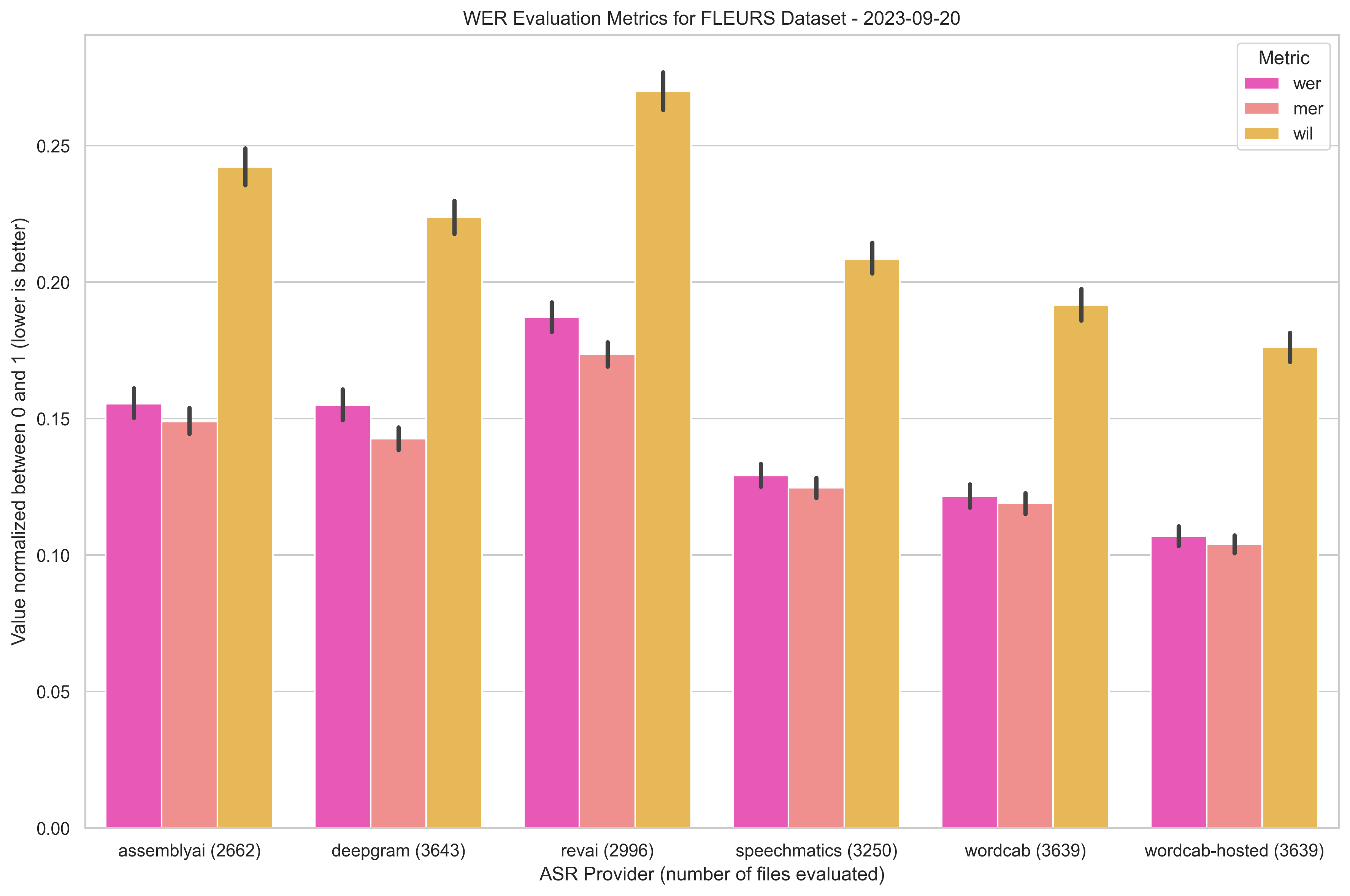

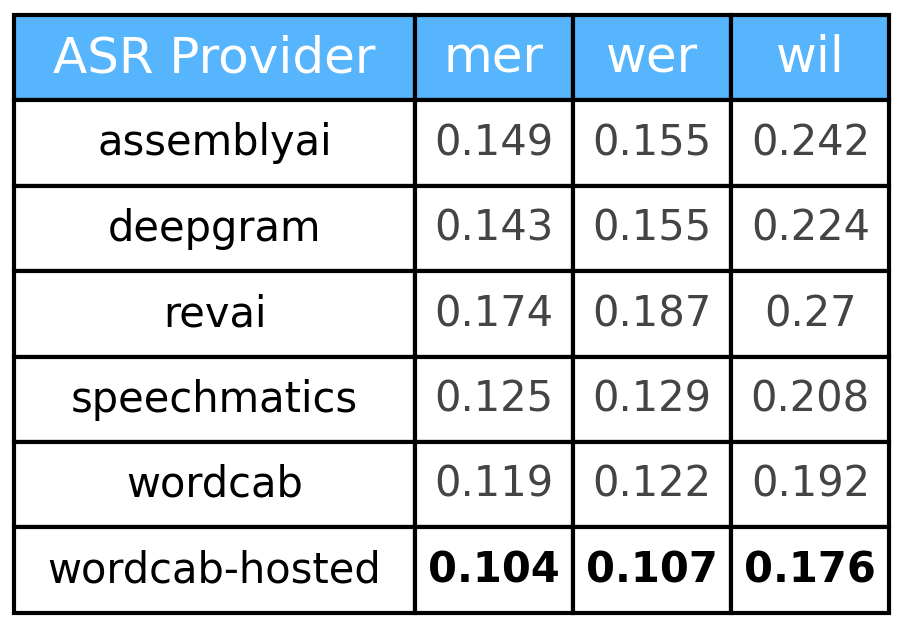

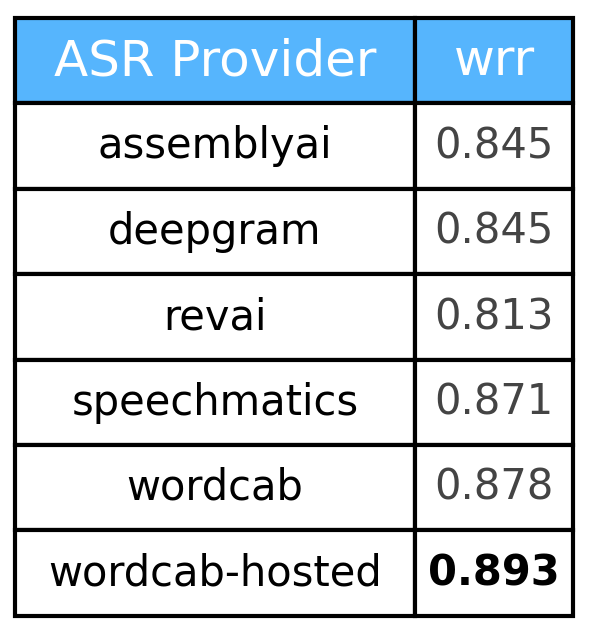

wer = Word Error Rate, mer = Match Error Rate, wil = Word Information Lost, wrr = Word Recognition Rate

- Dataset: Fleurs

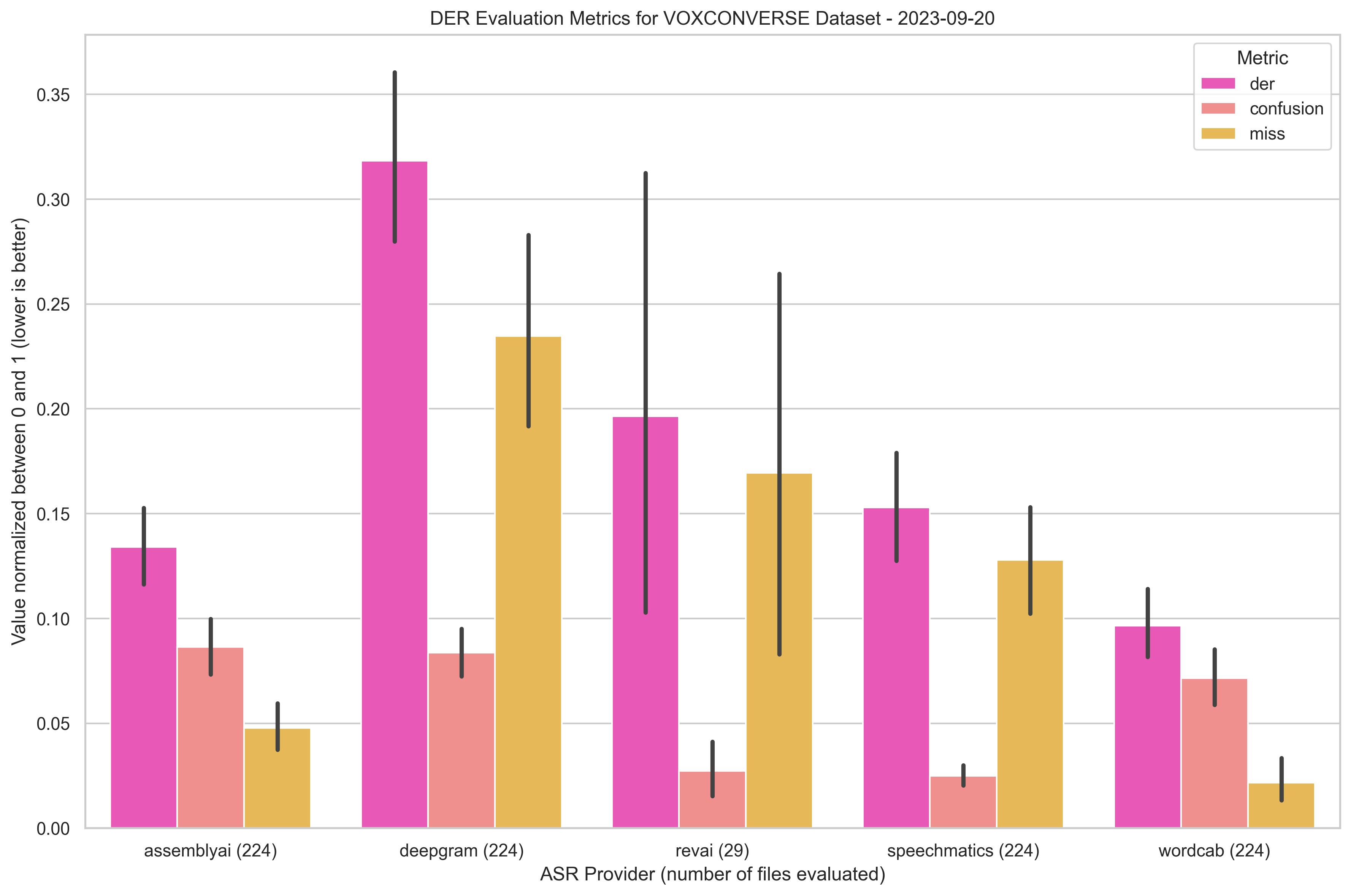

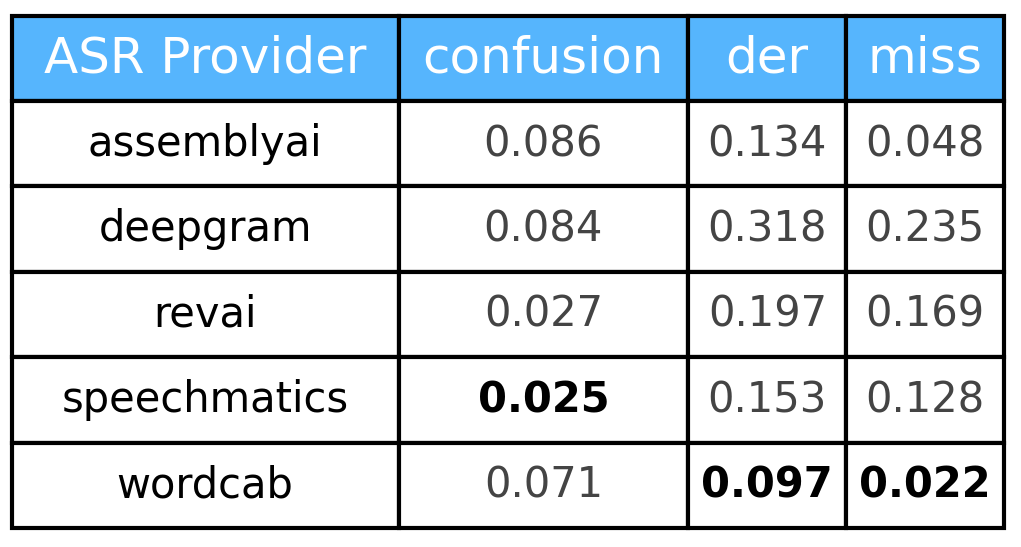

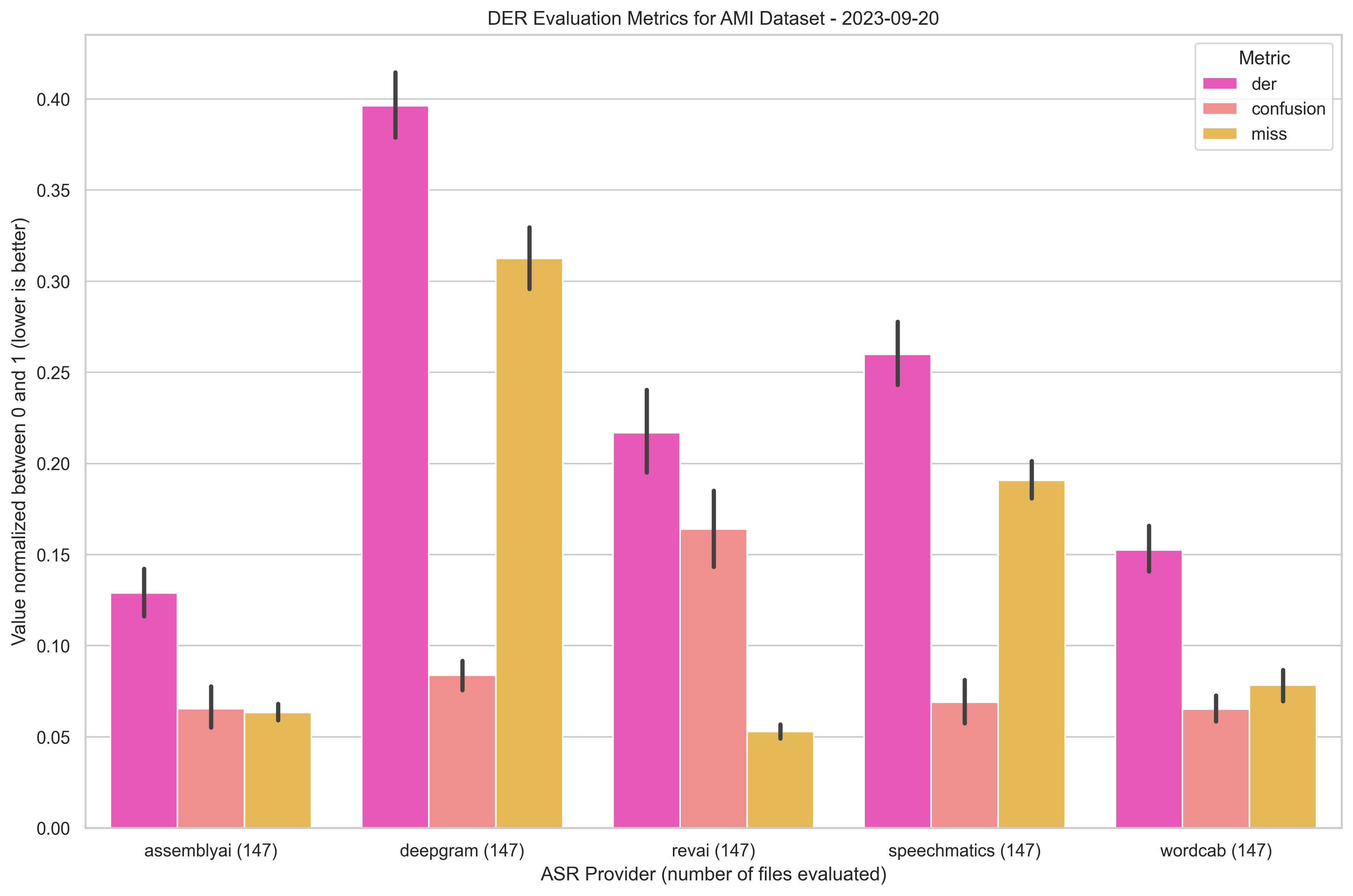

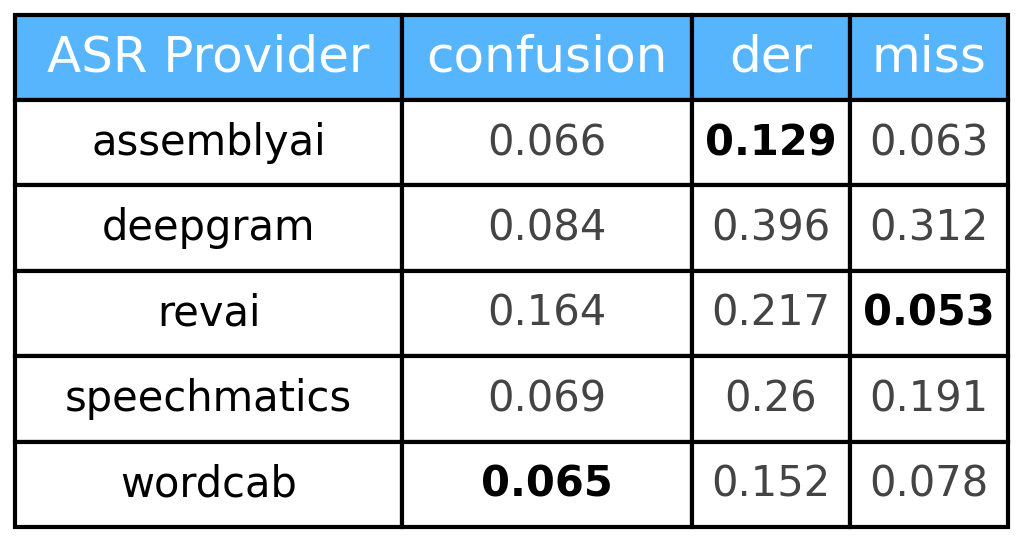

der = Diarization Error Rate, miss = missed detection, confusion = incorrect detection, fa = false alarm

- Dataset: VoxConverse

Note

Click on the images to get a bigger display.

- Dataset: AMI Corpus

Note

Click on the images to get a bigger display.

pip install rtasrgit clone https://github.com/Wordcab/rtasr

cd rtasr

pip install .The CLI is available through the rtasr command.

rtasr --help# List everything

rtasr list

# List only datasets

rtasr list -t datasets

# List only metrics

rtasr list -t metrics

# List only providers

rtasr list -t providersAvailable datasets are:

ami: AMI Corpusvoxconverse: VoxConverse

rtasr download -d <dataset>Implemented ASR providers are:

-

assemblyai: AssemblyAI -

aws: AWS Transcribe -

azure: Azure Speech -

deepgram: Deepgram -

google: Google Cloud Speech-to-Text -

revai: RevAI -

speechmatics: Speechmatics -

wordcab: Wordcab

Run ASR transcription on a given dataset with a given provider.

rtasr transcription -d <dataset> -p <provider>You can specify as many providers as you want:

rtasr transcription -d <dataset> -p <provider1> <provider2> <provider3> ...You can specify the dataset split to use:

rtasr transcription -d <dataset> -p <provider> -s <split>If not specified, all the available splits will be used.

By default, the transcription results are cached in the ~/.cache/rtasr/transcription directory for each provider.

If you don't want to use the cache, use the --no-cache flag.

rtasr transcription -d <dataset> -p <provider> --no-cacheNote: the cache is used to avoid running the same file twice. By removing the cache, you will run the transcription on the whole dataset again. We aren't responsible for any extra costs.

Use the --debug flag to run only one file by split for each provider.

rtasr transcription -d <dataset> -p <provider> --debugThe evaluation command allows you to run an evaluation on the transcription results.

If you don't specify the split, the evaluation will be run on the whole dataset.

rtasr evaluation -m der -d <dataset> -s <split>rtasr evaluation -m wer -d <dataset> -s <split>To get the plots of the evaluation results, use the plot command.

If you don't specify the split, the plots will be generated for all the available splits.

rtasr plot -m der -d <dataset> -s <split>rtasr plot -m wer -d <dataset> -s <split>To get the total length of a dataset, use the audio-length command.

This command allow you to get the number of minutes of audio for each split of a dataset.

If you don't specify the split, the total length of the dataset will be returned for all the available splits.

rtasr audio-length -d <dataset> -s <split>Be sure to have hatch installed.

- Run quality checks:

hatch run quality:check - Run quality formatting:

hatch run quality:format

- Run tests:

hatch run tests:run