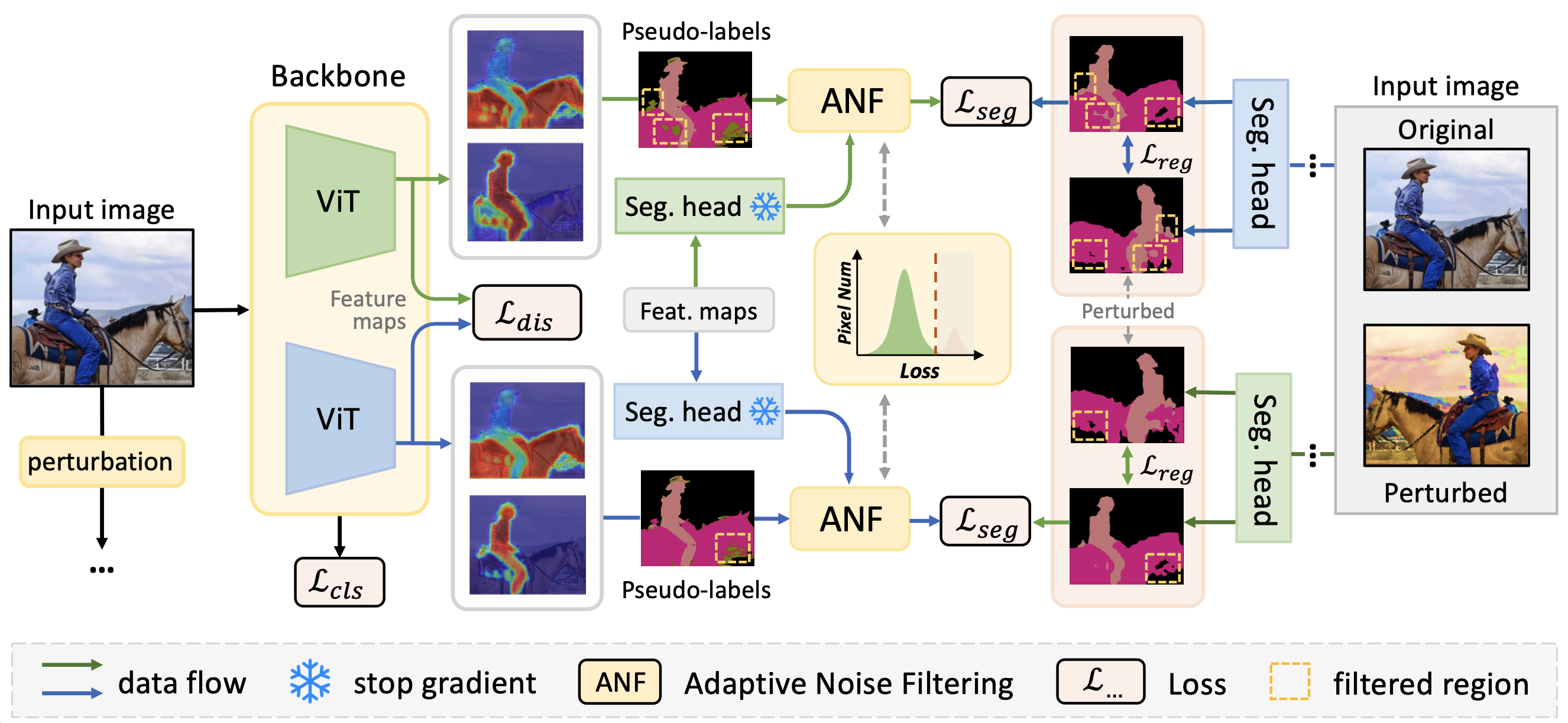

This repository contains the source code of CVPR 2024 paper: "DuPL: Dual Student with Trustworthy Progressive Learning for Robust Weakly Supervised Semantic Segmentation".

- Mar. 21, 2024 (U2): Add the evaluation / visualization scripts for CAM and segmentation inference.

- Mar. 21, 2024 (U1): The pre-trained checkpoints and segmentation results released 🔥🔥🔥.

- Mar. 17, 2024: Basic training code released.

The implementation is based on PyTorch 1.13.1 with single-node multi-gpu training. Please install the required packages by running:

pip install -r requirements.txtVOC dataset

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2012/VOCtrainval_11-May-2012.tar

tar –xvf VOCtrainval_11-May-2012.tarThe augmented annotations are from SBD dataset. Here is a download link of the augmented annotations at

DropBox. After downloading SegmentationClassAug.zip, you should unzip it and move it to VOCdevkit/VOC2012. The directory should be:

VOCdevkit/

└── VOC2012

├── Annotations

├── ImageSets

├── JPEGImages

├── SegmentationClass

├── SegmentationClassAug

└── SegmentationObjectCOCO dataset

wget http://images.cocodataset.org/zips/train2014.zip

wget http://images.cocodataset.org/zips/val2014.zipThe training pipeline use the VOC-style segmentation labels for COCO dataset, please download the converted masks from One Drive. The directory should be:

MSCOCO/

├── coco2014

│ ├── train2014

│ └── val2014

└── SegmentationClass (extract the downloaded "coco_mask.tar.gz")

├── train2014

└── val2014NOTE: You can also use the scripts provided at this repo to covert the COCO segmentation masks.

# For Pascal VOC 2012

python -m torch.distributed.run --nproc_per_node=2 train_final_voc.py --data_folder [../VOC2012]NOTE:

- The vaild batch size should be

4(num_gpus * sampler_per_gpu). - The

--nproc_per_nodeshould be set according to your environment (recommend: 2x NVIDIA RTX 3090 GPUs).

# For MSCOCO

python -m torch.distributed.run --nproc_per_node=4 train_final_coco.py --data_folder [../MSCOCO/coco2014]NOTE:

- The vaild batch size should be

8(num_gpus * sampler_per_gpu). - The

--nproc_per_nodeshould be set according to your environment (recommend: 4x NVIDIA RTX 3090 GPUs).

pip install git+https://github.com/lucasb-eyer/pydensecrf.gitNOTE: using pip install pydensecrf will install an incompatible version

# For Pascal VOC

python eval_seg_voc.py --data_folder [../VOC2012] --model_path [path_to_model]

# For MSCOCO

python -m torch.distributed.launch --nproc_per_node=4 eval_seg_coco_ddp.py --data_folder [../MSCOCO/coco2014] --label_folder [../MSCOCO/SegmentationClass] --model_path [path_to_model]NOTE:

- The segmentation results will be saved at the checkpoint directory

- DuPL has two independent models (students), and we select the best one for evaluation. IN FACT, we can use some tricks, such as

ensembleormodel soup, to further improve the performance (maybe).

# modify the "dir" and "target_dir" before running

python convert_voc_rgb.pypython infer_cam_voc.py --data_folder [../VOC2012] --model_path [path_to_model]NOTE: The CAM results will be saved at the checkpoint directory.

- The evaluation on MS COCO use DDP to accelerate the evaluation stage. Please make sure the

torch.distributed.launchis available in your environment. - We highly recommend use high-performance CPU for CRF post-processing. This processing is quite time-consuming. On

MS COCO, it may cost several hours for CRF post-processing.

We have provided DuPL's pre-trained checkpoints on VOC and COCO datasets. With these checkpoints, it should be expected to reproduce the exact performance listed below.

| Dataset | val | Log | Weights | val (with MS+CRF) | test (with MS+CRF) |

|---|---|---|---|---|---|

| VOC | 69.9 | log | weights | 72.2 | 71.6 |

| VOC (21k) | -- | log | weights | 73.3 | 72.8 |

| COCO | -- | log | weights | 43.5 | -- |

| COCO (21k) | -- | log | weights | 44.6 | -- |

The VOC test results are evaluated on the official server, and the result links are provided in the paper.

We have provided the visualization of CAMs and segmentation images (RGB) on VOC 2012 (val and test) and MS COCO in the following links. Hope they can help you to easily compare with other works :)

| Dataset | Link | Model |

|---|---|---|

| VOC - Validaion | dupl_voc_val.zip | DuPL (VOC test: 71.6) |

| VOC - Test | dupl_voc_test.zip | DuPL (VOC test: 71.6) |

| COCO - Validation | dupl_coco_val.zip | DuPL (COCO val: 43.5) |

Please kindly cite our paper if you find it's helpful in your work:

@inproceedings{wu2024dupl,

title={DuPL: Dual Student with Trustworthy Progressive Learning for Robust Weakly Supervised Semantic Segmentation},

author={Wu, Yuanchen and Ye, Xichen and Yang, Kequan and Li, Jide and Li, Xiaoqiang},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={3534--3543},

year={2024}

}We would like to thank all the researchers who open source their works to make this project possible, especially thanks to the authors of Toco for their brilliant work.