Jiang Wu1 · Rui Li1,2 · Haofei Xu2,3 · Wenxun Zhao1 · Yu Zhu1

Jinqiu Sun1 · Yanning Zhang1

1Northwestern Polytechnical University · 2ETH Zürich · 3University of Tübingen, Tübingen AI Center

CVPR 2024

In this paper,

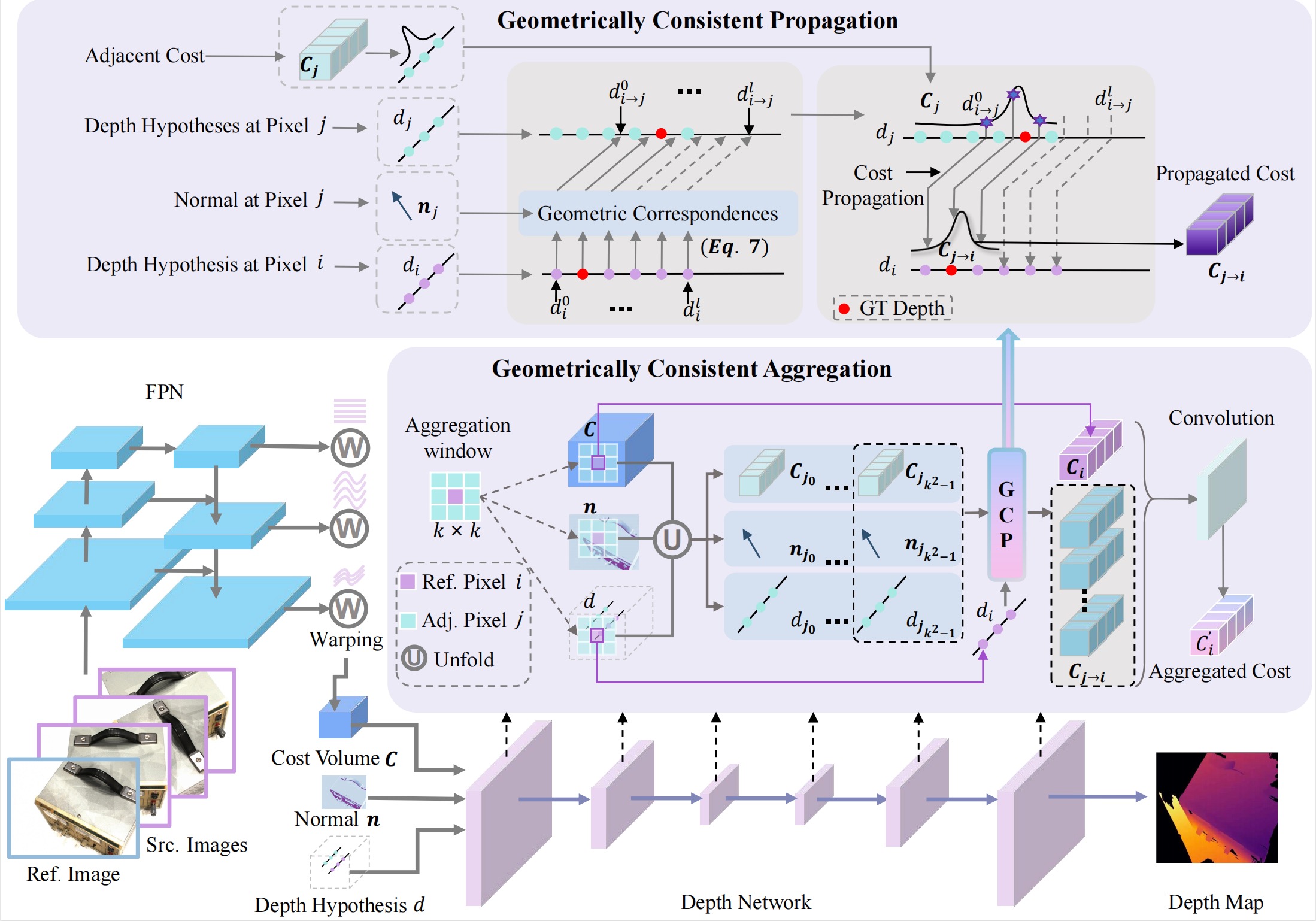

we propose GoMVS to aggregate geometrically consistent costs, yielding better utilization of adjacent geometries. Specifically, we correspond and propagate adjacent costs to the reference pixel by leveraging the local geometric smoothness in conjunction with surface normals. We achieve this by the geometric consistent propagation (GCP) module. It computes the correspondence from the adjacent

depth hypothesis space to the reference depth space using surface normals, then uses the correspondence to propagate adjacent costs to the reference geometry, followed by a convolution for aggregation. Our method achieves new

state-of-the-art performance on DTU, Tanks & Temple, and ETH3D datasets. Notably, our method ranks 1st on the

Tanks & Temple Advanced benchmark.

conda create -n gomvs python=3.8 # use python 3.8

conda activate gomvs

pip install -r requirements.txt

#Our code is tested on NVIDIA A100 GPUs (with python=3.8 ,pytorch=1.12, cuda=11.6)

We first train the model on DTU dataset and then fine-tune on the BlendedMVS dataset.

You can download the DTU training data and Depths_raw (preprocessed by MVSNet), and unzip them like:

dtu_training

├── Cameras

├── Depths

├── Depths_raw

└── Rectified

For DTU testing set, you can also download the preprocessed DTU testing data and unzip it as the test data folder, which should be:

dtu_testing

├── Cameras

├── scan1

├── scan2

├── ...

Download the low-res set from BlendedMVS and unzip it like below:

BlendedMVS

├── 5a0271884e62597cdee0d0eb

│ ├── blended_images

│ ├── cams

│ └── rendered_depth_maps

├── 59338e76772c3e6384afbb15

├── 59f363a8b45be22330016cad

├── ...

├── all_list.txt

├── training_list.txt

└── validation_list.txt

You can download the Tanks and Temples here and unzip it. For the intermediate set, unzip "short_range_caemeras_for_mvsnet.zip" and replace the camera parameter files inside the "cam" folder with them.

tanksandtemples

├── advanced

│ ├── Auditorium

│ ├── ...

└── intermediate

├── Family

├── ...

We provide the normal maps generated by the Omnidata monocular normal estimation network. We generate high-resolution normals using scripts provided by MonoSDF and you can download our preprocessed normal maps.

Download DTU Training and DTU Test normal maps and unzip it as follows:

dtu_training_normal

├── scan1_train

| ├── 000000_normal.npy

│ ├── 000000_normal.png

| ├── ...

├── scan2_train

└── ...

dtu_test_normal

├── scan1

| ├── 000000_normal.npy

│ ├── 000000_normal.png

| ├── ...

├── scan4

├── scan9

└── ...

We also provide the normal maps for the BlendedMVS low-res set. You can down it here.

BlendedMVS_normal

├── 5a0271884e62597cdee0d0eb

| ├── 000000_normal.npy

│ ├── 000000_normal.png

│ ├── ...

├── 59338e76772c3e6384afbb15

├── 59f363a8b45be22330016cad

└── ...

The structure of the TNT normal data is as follows.

TnT_normal

├── Auditorium

├── ...

├── Family

├── ...

| ├── 000000_normal.npy

│ ├── 000000_normal.png

| ├── ...

We provide scripts in the 'scripts' folder for training and testing on different datasets. Before running, please configure the paths in the script files.

Specify MVS_TRAINING and NORMAL_PATH in scripts/train/*.sh.

Then, you can run the script to train the model on DTU dataset:

bash scripts/train/train_dtu.sh

To finetune the model on BlendedMVS, set CKPT as path of the pre-trained model on DTU dataset. Then run:

bash scripts/train/train_bld_fintune.sh

To start testing on DTU, set the configuration in scripts/test/test_dtu.sh,

and run:

bash scripts/test/test_dtu.sh

For testing on the TNT dataset, use 'test_tnt.sh' to generate depth maps and 'dynamic_fusion.sh' to generate the final point results.

bash scripts/test/test_tnt.sh

bash scripts/test/dynamic_fusion.sh

Setting CKPT_FILE to the fine-tuned model may yield better results.

| Methods | Acc. | Comp. | Overall. |

|---|---|---|---|

| GoMVS(Pre-trained model) | 0.347 | 0.227 | 0.287 |

| Methods | Mean | Aud. | Bal. | Cou. | Mus. | Pal. | Temp. |

|---|---|---|---|---|---|---|---|

| GoMVS(Pre-trained model) | 43.07 | 35.52 | 47.15 | 42.52 | 52.08 | 36.34 | 44.82 |

| Methods | Mean | Fam. | Fra. | Hor. | Lig. | M60 | Pan. | Pla. | Tra. |

|---|---|---|---|---|---|---|---|---|---|

| GoMVS(Pre-trained model) | 66.44 | 82.68 | 69.23 | 69.19 | 63.56 | 65.13 | 62.10 | 58.81 | 60.80 |

Please cite our paper if you use the code in this repository:

@inproceedings{wu2024gomvs,

title={GoMVS: Geometrically Consistent Cost Aggregation for Multi-View Stereo},

author={Wu, Jiang and Li, Rui and Xu, Haofei and Zhao, Wenxun and Zhu, Yu and Sun, Jinqiu and Zhang, Yanning},

booktitle={CVPR},

year={2024}

}

We borrow the code from TransMVSNet, GeoMVSNet, IronDepth. We express gratitude for these works' contributions!