EventCLIP: Adapting CLIP for Event-based Object Recognition

Ziyi Wu,

Xudong Liu,

Igor Gilitschenski

arXiv'23 |

GitHub |

arXiv

This is the official PyTorch implementation for paper: EventCLIP: Adapting CLIP for Event-based Object Recognition. The code contains:

- Zero-shot EventCLIP inference on N-Caltech, N-Cars, N-ImageNet datasets

- Few-shot adaptation of EventCLIP on the three datasets, with SOTA results in the low-data regime

- Data-efficient fine-tuning of EventCLIP on N-Caltech & N-ImageNet, achieving superior accuracy over fully-trained baselines

- Learning from unlabeled data on N-Caltech & N-ImageNet, including both fully unsupervised and semi-supervised settings

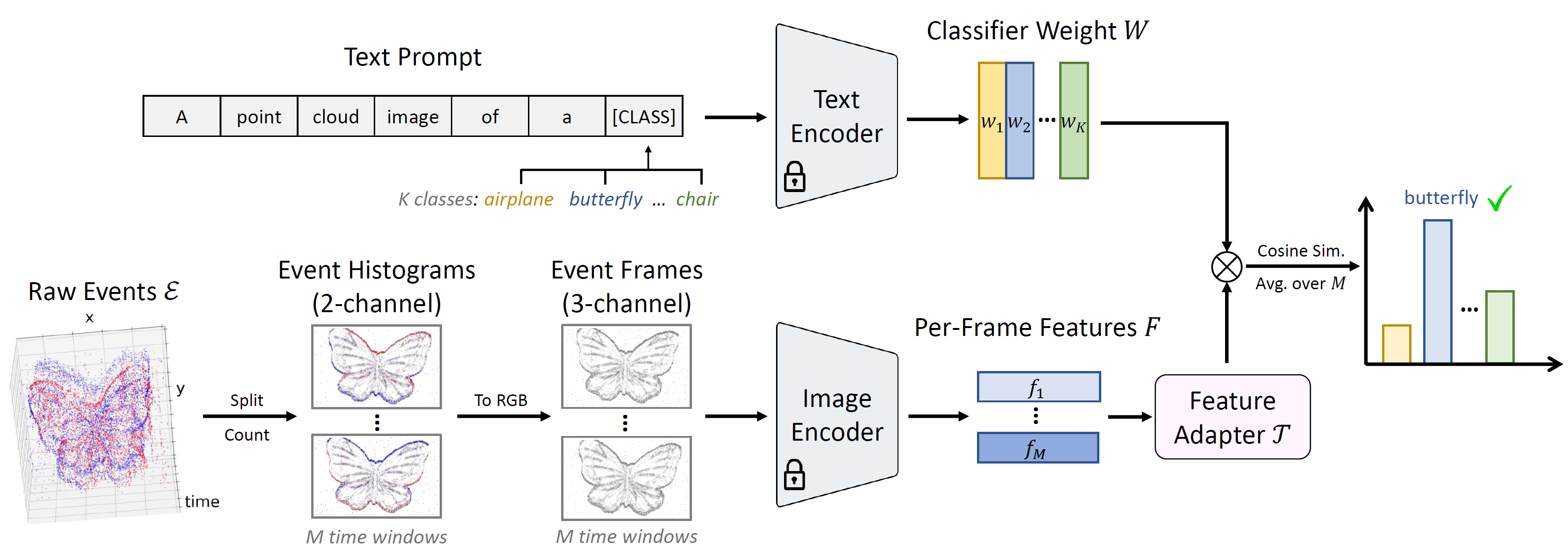

Event cameras are bio-inspired low-latency and energy-efficient sensors, which have gained significant interest recently. However, due to the lack of large-scale datasets, the event-based vision community cannot enjoy the recent success of foundation models in RGB vision. This paper thus seeks to adapt one of the most impactful VLM, CLIP, to recognize event data. We study common practice in data-efficient model adaptation, and propose a general framework named EventCLIP. The overall pipeline is shown below:

- 2023.9.14: Release code for learning with unlabeled data

- 2023.7.17: Release fine-tuning code

- 2023.5.17: Initial code release!

Please refer to install.md for step-by-step guidance on how to install the packages.

This codebase is tailored to Slurm GPU clusters with preemption mechanism. For the configs, we mainly use A40 with 40GB memory (though many experiments don't require so much memory). Please modify the code accordingly if you are using other hardware settings:

- Please go through

train.pyand change the fields marked byTODO: - Please read the config file for the model you want to train. We use DDP with multiple GPUs to accelerate training. You can use less GPUs to achieve a better memory-speed trade-off

Please refer to data.md for dataset downloading and pre-processing.

Please see benchmark.md for detailed instructions on how to reproduce our results in the paper.

See the troubleshooting section of nerv for potential issues.

Please open an issue if you encounter any errors running the code.

Please cite our paper if you find it useful in your research:

@article{wu2023eventclip,

title={{EventCLIP}: Adapting CLIP for Event-based Object Recognition},

author={Wu, Ziyi and Liu, Xudong and Gilitschenski, Igor},

journal={arXiv preprint arXiv:2306.06354},

year={2023}

}

We thank the authors of CLIP, EST, n_imagenet, PointCLIP, LoRA for opening source their wonderful works.

EventCLIP is released under the MIT License. See the LICENSE file for more details.

If you have any questions about the code, please contact Ziyi Wu dazitu616@gmail.com