Recently, the workflow of "pre-train, fine-tune" has been shown less effective and efficient when dealing with diverse downstream tasks on graph domain. Inspired by the prompt learning in natural language processing (NLP) domain, the "pre-train, prompt" workflow has emerged as a promising solution.

This repo aims to provide a curated list of research papers that explore the prompt learning on graphs. It is based on our Survey Paper: Graph Prompt Learning: A Comprehensive Survey and Beyond. We will try to make this list updated frequently. If you found any error or any missed paper, please don't hesitate to open issues or pull requests.🌹

- Awesome-Graph-Prompt

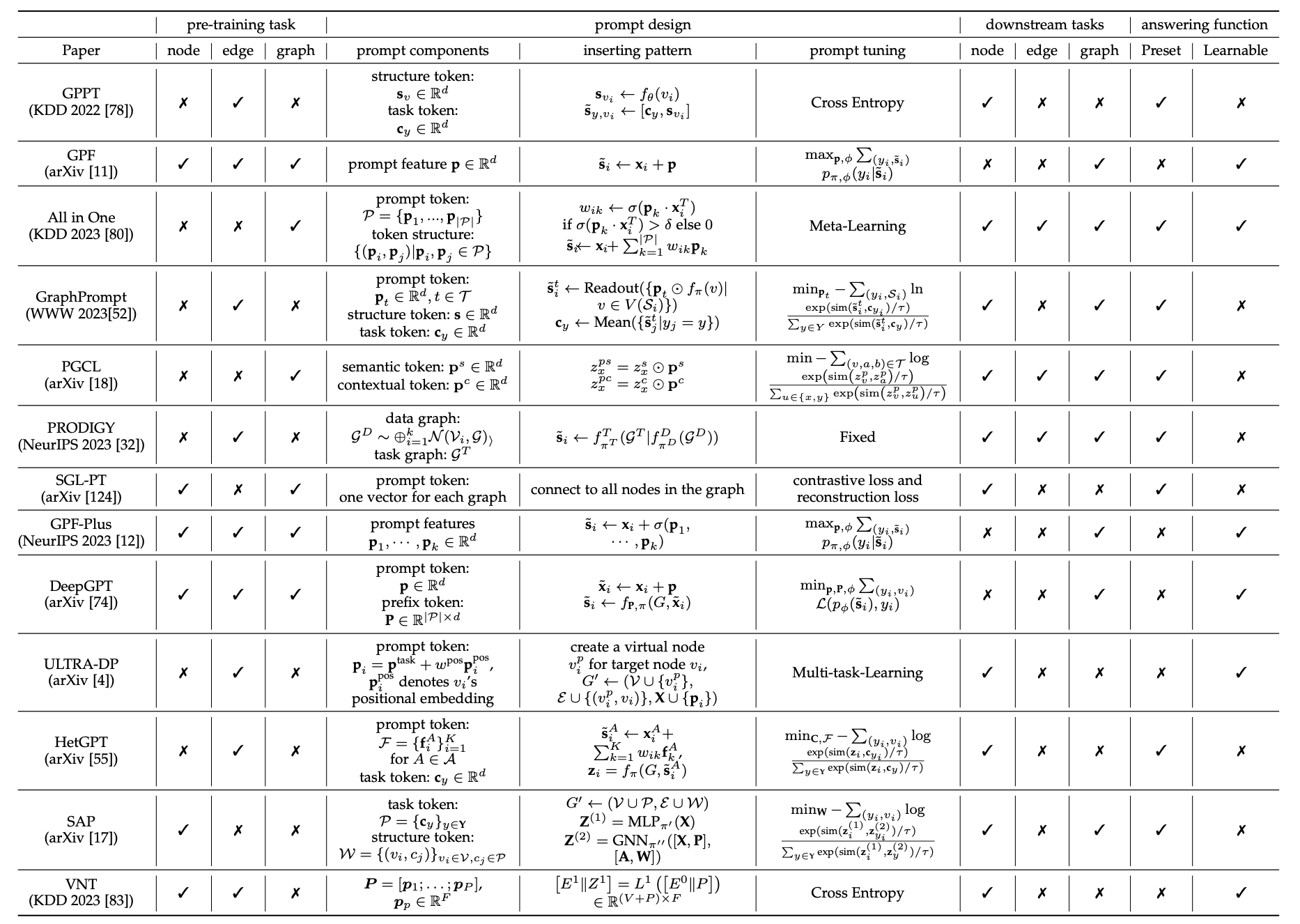

Summary of existing representative works on graph prompt.

-

Does Graph Prompt Work? A Data Operation Perspective with Theoretical Analysis. In arXiv, [Paper].

-

GPPT: Graph Pre-training and Prompt Tuning to Generalize Graph Neural Networks. In KDD'2022, [Paper] [Code].

-

SGL-PT: A Strong Graph Learner with Graph Prompt Tuning. In arXiv, [Paper].

-

GraphPrompt: Unifying Pre-Training and Downstream Tasks for Graph Neural Networks. In WWW'2023, [Paper] [Code].

-

All in One: Multi-Task Prompting for Graph Neural Networks. In KDD'2023 Best Paper Award 🌟, [Paper] [Code].

-

Deep Graph Reprogramming. In CVPR'2023 Highlight 🌟, [Paper].

-

Virtual Node Tuning for Few-shot Node Classification. In KDD'2023, [Paper].

-

PRODIGY: Enabling In-context Learning Over Graphs. In NeurIPS'2023 Spotlight 🌟, [Paper] [Code].

-

Universal Prompt Tuning for Graph Neural Networks. In NeurIPS'2023, [Paper] [Code].

-

Deep Prompt Tuning for Graph Transformers. In arXiv, [Paper].

-

Prompt Tuning for Multi-View Graph Contrastive Learning. In arXiv, [Paper].

-

ULTRA-DP:Unifying Graph Pre-training with Multi-task Graph Dual Prompt. In arXiv, [Paper].

-

HetGPT: Harnessing the Power of Prompt Tuning in Pre-Trained Heterogeneous Graph Neural Networks. In WWW'2024, [Paper].

-

Enhancing Graph Neural Networks with Structure-Based Prompt. In arXiv, [Paper].

-

Generalized Graph Prompt: Toward a Unification of Pre-Training and Downstream Tasks on Graphs. In TKDE'2024, [Paper] [Code].

-

HGPROMPT: Bridging Homogeneous and Heterogeneous Graphs for Few-shot Prompt Learning. In AAAI'2024, [Paper] [Code].

-

MultiGPrompt for Multi-Task Pre-Training and Prompting on Graphs. In WWW'2024, [Paper] [Code].

-

Subgraph-level Universal Prompt Tuning. In arXiv, [Paper] [Code].

-

Inductive Graph Alignment Prompt: Bridging the Gap between Graph Pre-training and Inductive Fine-tuning From Spectral Perspective. In WWW'2024, [Paper].

-

A Unified Graph Selective Prompt Learning for Graph Neural Networks. In arXiv, [Paper].

-

Augmenting Low-Resource Text Classification with Graph-Grounded Pre-training and Prompting. In SIGIR'2023, [Paper] [Code].

-

Prompt Tuning on Graph-augmented Low-resource Text Classification. In arXiv, [Paper] [Code].

-

Prompt-Based Zero- and Few-Shot Node Classification: A Multimodal Approach. In arXiv, [Paper].

-

Prompt-based Node Feature Extractor for Few-shot Learning on Text-Attributed Graphs. In arXiv, [Paper].

-

Large Language Models as Topological Structure Enhancers for Text-Attributed Graphs. In arXiv, [Paper].

-

ZeroG: Investigating Cross-dataset Zero-shot Transferability in Graphs. In KDD'2024, [Paper] [Code].

-

Pre-Training and Prompting for Few-Shot Node Classification on Text-Attributed Graphs. In KDD'2024, [Paper] [Code].

For this research line, please refer to Awesome LLMs with Graph Tasks [Survey Paper | Github Repo]

We highly recommend this work as they have provided a comprehensive survey to summarize the works on the integration of LLM and Graph 👍

-

GraphAdapter: Tuning Vision-Language Models With Dual Knowledge Graph. In NeurIPS'2023, [Paper] [Code].

Graph+Text+Image -

SynerGPT: In-Context Learning for Personalized Drug Synergy Prediction and Drug Design. In arXiv, [Paper].

Graph+Text -

Which Modality should I use - Text, Motif, or Image? Understanding Graphs with Large Language Models. In arXiv, [Paper].

Graph+Text+Image

-

GraphGLOW: Universal and Generalizable Structure Learning for Graph Neural Networks. In KDD'2023, [Paper] [Code].

-

GraphControl: Adding Conditional Control to Universal Graph Pre-trained Models for Graph Domain Transfer Learning. In WWW'2024, [Paper] [Code] [Chinese Blog].

-

All in One and One for All: A Simple yet Effective Method towards Cross-domain Graph Pretraining. In KDD'2024, [Paper] [Code].

-

Text-Free Multi-domain Graph Pre-training: Toward Graph Foundation Models. In arXiv, [Paper].

- Prompt Learning on Temporal Interaction Graphs. In arXiv, [Paper].

- Prompt-Enhanced Spatio-Temporal Graph Transfer Learning. In arXiv, [Paper].

- DyGPrompt: Learning Feature and Time Prompts on Dynamic Graphs. In arXiv, [Paper].

- Prompt-and-Align: Prompt-Based Social Alignment for Few-Shot Fake News Detection.

In CIKM'2023, [Paper] [Code].

Fake News Detection - Voucher Abuse Detection with Prompt-based Fine-tuning on Graph Neural Networks.

In CIKM'2023, [Paper].

Fraud Detection - ProCom: A Few-shot Targeted Community Detection Algorithm.

In KDD'2024, [Paper] [Code].

Community Detection

- Contrastive Graph Prompt-tuning for Cross-domain Recommendation.

In TOIS'2023, [Paper].

Cross-domain Recommendation - An Empirical Study Towards Prompt-Tuning for Graph Contrastive Pre-Training in Recommendations.

In NeurIPS'2023, [Paper] [Code].

General Recommendation - Motif-Based Prompt Learning for Universal Cross-Domain Recommendation.

In WSDM'2024, [Paper].

Cross-domain Recommendation - GraphPro: Graph Pre-training and Prompt Learning for Recommendation.

In WWW'2024, [Paper] [Code].

General Recommendation - GPT4Rec: Graph Prompt Tuning for Streaming Recommendation.

In SIGIR'2024, [Paper].

General Recommendation

- Structure Pretraining and Prompt Tuning for Knowledge Graph Transfer. In WWW'2023, [Paper] [Code].

- Graph Neural Prompting with Large Language Models. In AAAI'2024, [Paper].

- Knowledge Graph Prompting for Multi-Document Question Answering. In arXiv, [Paper] [Code].

- Multi-domain Knowledge Graph Collaborative Pre-training and Prompt Tuning for Diverse Downstream Tasks. In arXiv, [Paper] [Code].

- Can Large Language Models Empower Molecular Property Prediction? In arXiv, [Paper] [Code].

- GIMLET: A Unified Graph-Text Model for Instruction-Based Molecule Zero-Shot Learning. In NeurIPS'2023, [Paper] [Code].

- MolCA: Molecular Graph-Language Modeling with Cross-Modal Projector and Uni-Modal Adapter. In EMNLP'2023, [Paper] [Code].

- ReLM: Leveraging Language Models for Enhanced Chemical Reaction Prediction. In EMNLP'2023, [Paper] [Code].

- MolCPT: Molecule Continuous Prompt Tuning to Generalize Molecular Representation Learning. In WSDM'2024, [Paper].

- Protein Multimer Structure Prediction via PPI-guided Prompt Learning. In ICLR'2024, [Paper].

- DDIPrompt: Drug-Drug Interaction Event Prediction based on Graph Prompt Learning. In CIKM'2024, [Paper].

- A Data-centric Framework to Endow Graph Neural Networks with Out-Of-Distribution Detection Ability.

In KDD'2023, [Paper] [Code].

OOD Detection - MMGPL: Multimodal Medical Data Analysis with Graph Prompt Learning. In arXiv, [Paper].

- Instruction-based Hypergraph Pretraining.

In SIGIR'2024, [Paper].

Hypergraph Prompt - Cross-Context Backdoor Attacks against Graph Prompt Learning.

In KDD'2024, [Paper] [Code].

Cross-Context Backdoor Attacks - Urban Region Pre-training and Prompting: A Graph-based Approach.

In arXiv, [Paper].

Urban Region Representation

-

ProG: A Unified Library for Graph Prompting [Website] [Code]

ProG (Prompt Graph) is a library built upon PyTorch to easily conduct single or multiple task prompting for a pre-trained Graph Neural Networks (GNNs).

-

ProG: A Graph Prompt Learning Benchmark [Paper]

ProG benchmark integrates SIX pre-training methods and FIVE state-of-the-art graph prompt techniques, evaluated across FIFTEEN diverse datasets to assess performance, flexibility, and efficiency.

Datasets that are commonly used in GNN prompting papers.

Citation Networks

| Dataset | #Node | #Edge | #Feature | #Class |

|---|---|---|---|---|

| Cora | 2708 | 5429 | 1433 | 7 |

| CoraFull | 19793 | 63421 | 8710 | 70 |

| Citeseer | 3327 | 4732 | 3703 | 6 |

| DBLP | 17716 | 105734 | 1639 | 4 |

| Pubmed | 19717 | 44338 | 500 | 3 |

| Coauthor-CS | 18333 | 81894 | 6805 | 15 |

| Coauthor-Physics | 34493 | 247962 | 8415 | 5 |

| ogbn-arxiv | 169343 | 1166243 | 128 | 40 |

Purchase Networks

| Dataset | #Node | #Edge | #Feature | #Class |

|---|---|---|---|---|

| Amazon-Computers | 13752 | 245861 | 767 | 10 |

| Amazon-Photo | 7650 | 119081 | 745 | 8 |

| ogbn-products | 2449029 | 61859140 | 100 | 47 |

Social Networks

| Dataset | #Node | #Edge | #Feature | #Class |

|---|---|---|---|---|

| 232965 | 11606919 | 602 | 41 | |

| Flickr | 89250 | 899756 | 500 | 7 |

Molecular Graphs

| Dataset | #Graph | #Node (Avg.) | #Edge (Avg.) | #Feature | #Class |

|---|---|---|---|---|---|

| COX2 | 467 | 41.22 | 43.45 | 3 | 2 |

| ENZYMES | 600 | 32.63 | 62.14 | 18 | 6 |

| MUTAG | 188 | 17.93 | 19.79 | 7 | 2 |

| MUV | 93087 | 24.23 | 26.28 | - | 17 |

| HIV | 41127 | 25.53 | 27.48 | - | 2 |

| SIDER | 1427 | 33.64 | 35.36 | - | 27 |

- Official Presentation of All in One Link

- A Chinese Blog that provides a comprehensive introduction of ALL graph prompting works [Zhihu]

👍 Contributions to this repository are welcome!

If you have come across relevant resources, feel free to open an issue or submit a pull request.

If you find this repo helpful to you, please feel free to cite these works:

@article{sun2023graph,

title = {Graph Prompt Learning: A Comprehensive Survey and Beyond},

author = {Sun, Xiangguo and Zhang, Jiawen and Wu, Xixi and Cheng, Hong and Xiong, Yun and Li, Jia},

year = {2023},

journal = {arXiv:2311.16534},

eprint = {2311.16534},

archiveprefix = {arxiv}

}@inproceedings{li2024graph,

title={Graph Intelligence with Large Language Models and Prompt Learning},

author={Li, Jia and Sun, Xiangguo and Li, Yuhan and Li, Zhixun and Cheng, Hong and Yu, Jeffrey Xu},

booktitle={Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining},

pages={6545--6554},

year={2024}

}

@inproceedings{sun2023all,

title={All in One: Multi-Task Prompting for Graph Neural Networks},

author={Sun, Xiangguo and Cheng, Hong and Li, Jia and Liu, Bo and Guan, Jihong},

booktitle={Proceedings of the 26th ACM SIGKDD international conference on knowledge discovery \& data mining (KDD'23)},

year={2023},

pages = {2120–2131},

location = {Long Beach, CA, USA},

isbn = {9798400701030},

url = {https://doi.org/10.1145/3580305.3599256},

doi = {10.1145/3580305.3599256}

}@article{wang2024does,

title={Does Graph Prompt Work? A Data Operation Perspective with Theoretical Analysis},

author={Qunzhong Wang and Xiangguo Sun and Hong Cheng},

year={2024},

journal = {arXiv preprint arXiv:2410.01635},

url={https://arxiv.org/abs/2410.01635}

}Other Representative Works:

🔥 All in One A Representative GNN Prompting Framework

@inproceedings{sun2023all,

title={All in One: Multi-Task Prompting for Graph Neural Networks},

author={Sun, Xiangguo and Cheng, Hong and Li, Jia and Liu, Bo and Guan, Jihong},

booktitle={Proceedings of the 26th ACM SIGKDD international conference on knowledge discovery \& data mining (KDD'23)},

year={2023},

pages = {2120–2131},

location = {Long Beach, CA, USA},

isbn = {9798400701030},

url = {https://doi.org/10.1145/3580305.3599256},

doi = {10.1145/3580305.3599256}

}🔥 All in One and One for All A Cross-domain Graph Pre-training Framework

@article{zhao2024all,

title={All in One and One for All: A Simple yet Effective Method towards Cross-domain Graph Pretraining},

author={Haihong Zhao and Aochuan Chen and Xiangguo Sun and Hong Cheng and Jia Li},

year={2024},

eprint={2402.09834},

archivePrefix={arXiv}

}🔥 TIGPrompt A Temporal Interation Graph Prompting Framework

@article{chen2024prompt,

title={Prompt Learning on Temporal Interaction Graphs},

author={Xi Chen and Siwei Zhang and Yun Xiong and Xixi Wu and Jiawei Zhang and Xiangguo Sun and Yao Zhang and Yinglong Zhao and Yulin Kang},

year={2024},

eprint={2402.06326},

archivePrefix={arXiv},

journal = {arXiv:2402.06326}

}🔥 Graph Prompting Works on Biology Domain

@inproceedings{gao2024protein,

title={Protein Multimer Structure Prediction via {PPI}-guided Prompt Learning},

author={Ziqi Gao and Xiangguo Sun and Zijing Liu and Yu Li and Hong Cheng and Jia Li},

booktitle={The Twelfth International Conference on Learning Representations (ICLR)},

year={2024},

url={https://openreview.net/forum?id=OHpvivXrQr}

}

@article{wang2024ddiprompt,

title={DDIPrompt: Drug-Drug Interaction Event Prediction based on Graph Prompt Learning},

author={Yingying Wang and Yun Xiong and Xixi Wu and Xiangguo Sun and Jiawei Zhang},

year={2024},

eprint={2402.11472},

archivePrefix={arXiv},

journal = {arXiv:2402.11472}

}🔥 Graph Prompting Works on Urban Computing

@article{jin2024urban,

title={Urban Region Pre-training and Prompting: A Graph-based Approach},

author={Jin, Jiahui and Song, Yifan and Kan, Dong and Zhu, Haojia and Sun, Xiangguo and Li, Zhicheng and Sun, Xigang and Zhang, Jinghui},

journal={arXiv preprint arXiv:2408.05920},

year={2024}

}