Peng Hu, Xi Peng, Hongyuan Zhu, Liangli Zhen, Jie Lin, Learning Cross-modal Retrieval with Noisy Labels, IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Jun. 19-25, 2021. (PyTorch Code)

Recently, cross-modal retrieval is emerging with the help of deep multimodal learning. However, even for unimodal data, collecting large-scale well-annotated data is expensive and time-consuming, and not to mention the additional challenges from multiple modalities. Although crowd-sourcing annotation, e.g., Amazon's Mechanical Turk, can be utilized to mitigate the labeling cost, but leading to the unavoidable noise in labels for the non-expert annotating. To tackle the challenge, this paper presents a general Multimodal Robust Learning framework (MRL) for learning with multimodal noisy labels to mitigate noisy samples and correlate distinct modalities simultaneously. To be specific, we propose a Robust Clustering loss (RC) to make the deep networks focus on clean samples instead of noisy ones. Besides, a simple yet effective multimodal loss function, called Multimodal Contrastive loss (MC), is proposed to maximize the mutual information between different modalities, thus alleviating the interference of noisy samples and cross-modal discrepancy. Extensive experiments are conducted on four widely-used multimodal datasets to demonstrate the effectiveness of the proposed approach by comparing to 14 state-of-the-art methods.

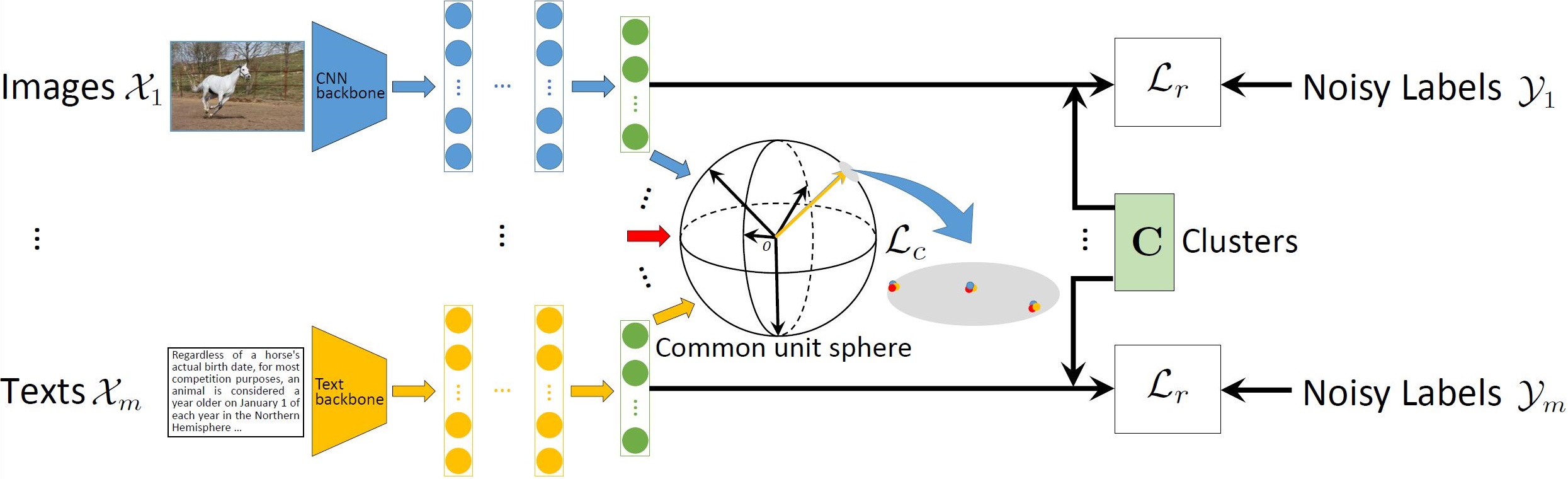

Figure 1 The pipeline of the proposed method for 𝓂 modalities, e.g., images 𝒳₁ with noisy labels 𝒴₁, and texts 𝒳𝓂 with noisy labels 𝒴𝓂. The modality-specific networks learn common representations for 𝓂 different modalities. The Robust Clustering loss ℒ𝓇 is adopted to mitigate the noise in labels for learning discrimination and narrow the heterogeneous gap. The outputs of networks interact with each other to learn common representations by using instance- and pair-level contrast, i.e., multimodal contrastive learning (ℒ𝒸), thus further mitigating noisy labels and cross-modal discrepancy. ℒ𝒸 tries to maximally scatter inter-modal samples while compacting intra-modal points over the common unit sphere/space.

To train a model with 0.6 noise rate on Wikipedia, just run main_noisy.py:

python main_noisy.py --max_epochs 30 --log_name noisylabel_mce --loss MCE --lr 0.0001 --train_batch_size 100 --beta 0.7 --noisy_ratio 0.6 --data_name wikiYou can get outputs as follows:

Epoch: 24 / 30

[================= 22/22 ==================>..] Step: 12ms | Tot: 277ms | Loss: 2.365 | LR: 1.28428e-05

Validation: Img2Txt: 0.480904 Txt2Img: 0.436563 Avg: 0.458733

Test: Img2Txt: 0.474708 Txt2Img: 0.440001 Avg: 0.457354

Saving..

Epoch: 25 / 30

[================= 22/22 ==================>..] Step: 12ms | Tot: 275ms | Loss: 2.362 | LR: 9.54915e-06

Validation: Img2Txt: 0.48379 Txt2Img: 0.437549 Avg: 0.460669

Test: Img2Txt: 0.475301 Txt2Img: 0.44056 Avg: 0.45793

Saving..

Epoch: 26 / 30

[================= 22/22 ==================>..] Step: 12ms | Tot: 276ms | Loss: 2.361 | LR: 6.69873e-06

Validation: Img2Txt: 0.482946 Txt2Img: 0.43729 Avg: 0.460118

Epoch: 27 / 30

[================= 22/22 ==================>..] Step: 12ms | Tot: 273ms | Loss: 2.360 | LR: 4.32273e-06

Validation: Img2Txt: 0.480506 Txt2Img: 0.437512 Avg: 0.459009

Epoch: 28 / 30

[================= 22/22 ==================>..] Step: 12ms | Tot: 269ms | Loss: 2.360 | LR: 2.44717e-06

Validation: Img2Txt: 0.481429 Txt2Img: 0.437096 Avg: 0.459263

Epoch: 29 / 30

[================= 22/22 ==================>..] Step: 12ms | Tot: 275ms | Loss: 2.359 | LR: 1.09262e-06

Validation: Img2Txt: 0.482126 Txt2Img: 0.437257 Avg: 0.459691

Evaluation on Last Epoch:

Img2Txt: 0.475 Txt2Img: 0.440

Evaluation on Best Validation:

Img2Txt: 0.475 Txt2Img: 0.441

Table 1: Performance comparison in terms of MAP scores under the symmetric noise rates of 0.2, 0.4, 0.6 and 0.8 on the Wikipedia and INRIA-Websearch datasets. The highest MAP score is shown in bold.

| Method | Wikipedia | INRIA-Websearch | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Image → Text | Text → Image | Image → Text | Text → Image | |||||||||||||

| 0.2 | 0.4 | 0.6 | 0.8 | 0.2 | 0.4 | 0.6 | 0.8 | 0.2 | 0.4 | 0.6 | 0.8 | 0.2 | 0.4 | 0.6 | 0.8 | |

| MCCA | 0.202 | 0.202 | 0.202 | 0.202 | 0.189 | 0.189 | 0.189 | 0.189 | 0.275 | 0.275 | 0.275 | 0.275 | 0.277 | 0.277 | 0.277 | 0.277 |

| PLS | 0.337 | 0.337 | 0.337 | 0.337 | 0.320 | 0.320 | 0.320 | 0.320 | 0.387 | 0.387 | 0.387 | 0.387 | 0.398 | 0.398 | 0.398 | 0.398 |

| DCCA | 0.281 | 0.281 | 0.281 | 0.281 | 0.260 | 0.260 | 0.260 | 0.260 | 0.188 | 0.188 | 0.188 | 0.188 | 0.182 | 0.182 | 0.182 | 0.182 |

| DCCAE | 0.308 | 0.308 | 0.308 | 0.308 | 0.286 | 0.286 | 0.286 | 0.286 | 0.167 | 0.167 | 0.167 | 0.167 | 0.164 | 0.164 | 0.164 | 0.164 |

| GMA | 0.200 | 0.178 | 0.153 | 0.139 | 0.189 | 0.160 | 0.141 | 0.136 | 0.425 | 0.372 | 0.303 | 0.245 | 0.437 | 0.378 | 0.315 | 0.251 |

| MvDA | 0.379 | 0.285 | 0.217 | 0.144 | 0.350 | 0.270 | 0.207 | 0.142 | 0.286 | 0.269 | 0.234 | 0.186 | 0.285 | 0.265 | 0.233 | 0.185 |

| MvDA-VC | 0.389 | 0.330 | 0.256 | 0.162 | 0.355 | 0.304 | 0.241 | 0.153 | 0.288 | 0.272 | 0.241 | 0.192 | 0.286 | 0.268 | 0.238 | 0.190 |

| GSS-SL | 0.444 | 0.390 | 0.309 | 0.174 | 0.398 | 0.353 | 0.287 | 0.169 | 0.487 | 0.424 | 0.272 | 0.075 | 0.510 | 0.451 | 0.307 | 0.085 |

| ACMR | 0.276 | 0.231 | 0.198 | 0.135 | 0.285 | 0.194 | 0.183 | 0.138 | 0.175 | 0.096 | 0.055 | 0.023 | 0.157 | 0.114 | 0.048 | 0.021 |

| deep-SM | 0.441 | 0.387 | 0.293 | 0.178 | 0.392 | 0.364 | 0.248 | 0.177 | 0.495 | 0.422 | 0.238 | 0.046 | 0.509 | 0.421 | 0.258 | 0.063 |

| FGCrossNet | 0.403 | 0.322 | 0.233 | 0.156 | 0.358 | 0.284 | 0.205 | 0.147 | 0.278 | 0.192 | 0.105 | 0.027 | 0.261 | 0.189 | 0.096 | 0.025 |

| SDML | 0.464 | 0.406 | 0.299 | 0.170 | 0.448 | 0.398 | 0.311 | 0.184 | 0.506 | 0.419 | 0.283 | 0.024 | 0.512 | 0.412 | 0.241 | 0.066 |

| DSCMR | 0.426 | 0.331 | 0.226 | 0.142 | 0.390 | 0.300 | 0.212 | 0.140 | 0.500 | 0.413 | 0.225 | 0.055 | 0.536 | 0.464 | 0.237 | 0.052 |

| SMLN | 0.449 | 0.365 | 0.275 | 0.251 | 0.403 | 0.319 | 0.246 | 0.237 | 0.331 | 0.291 | 0.262 | 0.214 | 0.391 | 0.349 | 0.292 | 0.254 |

| Ours | 0.514 | 0.491 | 0.464 | 0.435 | 0.461 | 0.453 | 0.421 | 0.400 | 0.559 | 0.543 | 0.512 | 0.417 | 0.587 | 0.571 | 0.533 | 0.424 |

Table 2: Performance comparison in terms of MAP scores under the symmetric noise rates of 0.2, 0.4, 0.6 and 0.8 on the NUS-WIDE and XMediaNet datasets. The highest MAP score is shown in bold.

| Method | NUS-WIDE | XMediaNet | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Image → Text | Text → Image | Image → Text | Text → Image | |||||||||||||

| 0.2 | 0.4 | 0.6 | 0.8 | 0.2 | 0.4 | 0.6 | 0.8 | 0.2 | 0.4 | 0.6 | 0.8 | 0.2 | 0.4 | 0.6 | 0.8 | |

| MCCA | 0.523 | 0.523 | 0.523 | 0.523 | 0.539 | 0.539 | 0.539 | 0.539 | 0.233 | 0.233 | 0.233 | 0.233 | 0.249 | 0.249 | 0.249 | 0.249 |

| PLS | 0.498 | 0.498 | 0.498 | 0.498 | 0.517 | 0.517 | 0.517 | 0.517 | 0.276 | 0.276 | 0.276 | 0.276 | 0.266 | 0.266 | 0.266 | 0.266 |

| DCCA | 0.527 | 0.527 | 0.527 | 0.527 | 0.537 | 0.537 | 0.537 | 0.537 | 0.152 | 0.152 | 0.152 | 0.152 | 0.162 | 0.162 | 0.162 | 0.162 |

| DCCAE | 0.529 | 0.529 | 0.529 | 0.529 | 0.538 | 0.538 | 0.538 | 0.538 | 0.149 | 0.149 | 0.149 | 0.149 | 0.159 | 0.159 | 0.159 | 0.159 |

| GMA | 0.545 | 0.515 | 0.488 | 0.469 | 0.547 | 0.517 | 0.491 | 0.475 | 0.400 | 0.380 | 0.344 | 0.276 | 0.376 | 0.364 | 0.336 | 0.277 |

| MvDA | 0.590 | 0.551 | 0.568 | 0.471 | 0.609 | 0.585 | 0.596 | 0.498 | 0.329 | 0.318 | 0.301 | 0.256 | 0.324 | 0.314 | 0.296 | 0.254 |

| MvDA-VC | 0.531 | 0.491 | 0.512 | 0.421 | 0.567 | 0.525 | 0.550 | 0.434 | 0.331 | 0.319 | 0.306 | 0.274 | 0.322 | 0.310 | 0.296 | 0.265 |

| GSS-SL | 0.639 | 0.639 | 0.631 | 0.567 | 0.659 | 0.658 | 0.650 | 0.592 | 0.431 | 0.381 | 0.256 | 0.044 | 0.417 | 0.361 | 0.221 | 0.031 |

| ACMR | 0.530 | 0.433 | 0.318 | 0.269 | 0.547 | 0.476 | 0.304 | 0.241 | 0.181 | 0.069 | 0.018 | 0.010 | 0.191 | 0.043 | 0.012 | 0.009 |

| deep-SM | 0.693 | 0.680 | 0.673 | 0.628 | 0.690 | 0.681 | 0.669 | 0.629 | 0.557 | 0.314 | 0.276 | 0.062 | 0.495 | 0.344 | 0.021 | 0.014 |

| FGCrossNet | 0.661 | 0.641 | 0.638 | 0.594 | 0.669 | 0.669 | 0.636 | 0.596 | 0.372 | 0.280 | 0.147 | 0.053 | 0.375 | 0.281 | 0.160 | 0.052 |

| SDML | 0.694 | 0.677 | 0.633 | 0.389 | 0.693 | 0.681 | 0.644 | 0.416 | 0.534 | 0.420 | 0.216 | 0.009 | 0.563 | 0.445 | 0.237 | 0.011 |

| DSCMR | 0.665 | 0.661 | 0.653 | 0.509 | 0.667 | 0.665 | 0.655 | 0.505 | 0.461 | 0.224 | 0.040 | 0.008 | 0.477 | 0.224 | 0.028 | 0.010 |

| SMLN | 0.676 | 0.651 | 0.646 | 0.525 | 0.685 | 0.650 | 0.639 | 0.520 | 0.520 | 0.445 | 0.070 | 0.070 | 0.514 | 0.300 | 0.303 | 0.226 |

| Ours | 0.696 | 0.690 | 0.686 | 0.669 | 0.697 | 0.695 | 0.688 | 0.673 | 0.625 | 0.581 | 0.384 | 0.334 | 0.623 | 0.587 | 0.408 | 0.359 |

Table 3: Comparison between our MRL (full version) and its three counterparts (CE and two variations of MRL) under the symmetric noise rates of 0.2, 0.4, 0.6 and 0.8 on the Wikipedia dataset. The highest score is shown in bold.

| Method | Image → Text | |||

|---|---|---|---|---|

| 0.2 | 0.4 | 0.6 | 0.8 | |

| CE | 0.441 | 0.387 | 0.293 | 0.178 |

| MRL (with ℒ𝓇 only) | 0.482 | 0.434 | 0.363 | 0.239 |

| MRL (with ℒ𝒸 only) | 0.412 | 0.412 | 0.412 | 0.412 |

| Full MRL | 0.514 | 0.491 | 0.464 | 0.435 |

| Text → Image | ||||

| CE | 0.392 | 0.364 | 0.248 | 0.177 |

| MRL (with ℒ𝓇 only) | 0.429 | 0.389 | 0.320 | 0.202 |

| MRL (with ℒ𝒸 only) | 0.383 | 0.382 | 0.383 | 0.383 |

| Full MRL | 0.461 | 0.453 | 0.421 | 0.400 |

If you find MRL useful in your research, please consider citing:

@inproceedings{hu2021MRL,

title={Learning Cross-modal Retrieval with Noisy Labels},

author={Peng Hu, Xi Peng, Hongyuan Zhu, Liangli Zhen, Jie Lin},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month={June},

year={2021}

}