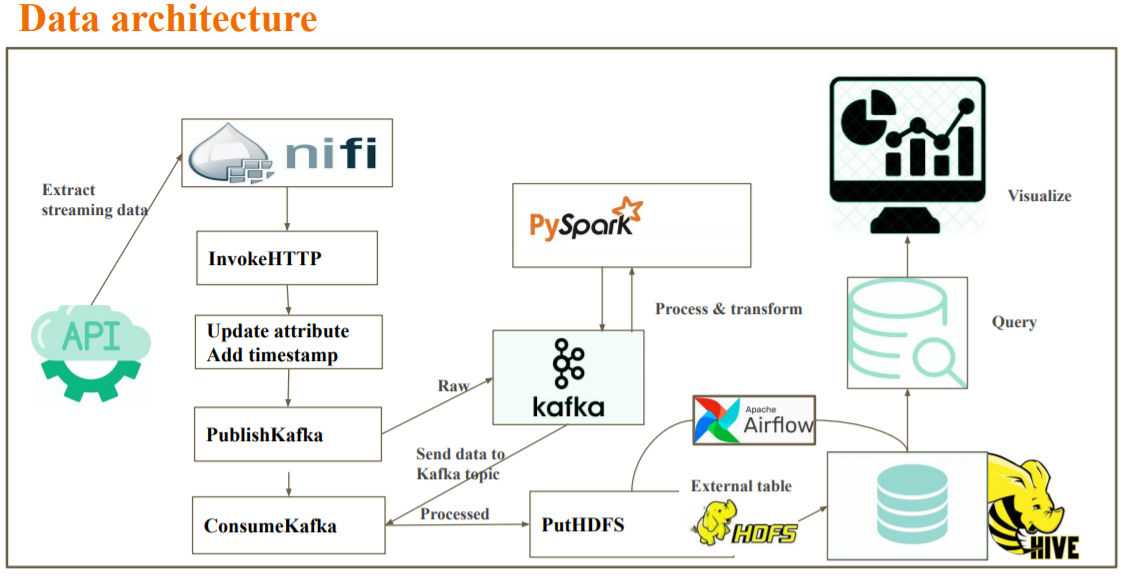

- The purpose is to collect the real time streaming data from COVID19 open API for every 5 minutes into the ecosystem using NiFi and to process it and store it in the data lake on AWS.Data processing includes parsing the data from complex JSON format to csv format then publishing to Kafka for persistent delivery of messages into PySpark for further processing.The processed data is then fed into output Kafka topic which is inturn consumed by Nifi and stored in HDFS .A Hive external table is created on top of HDFS processed data for which the process is Orchestrated using Airflow to run for every time interval. Finally KPIs are visualised in tableau.

- Tools used

- Nifi -nifi-1.10.0

- Hadoop -hadoop_2.7.3

- Hive-apache-hive-2.1.0

- Spark-spark-2.4.5

- Zookeeper-zookeeper-2.3.5

- Kafka-kafka_2.11-2.4.0

- Airflow-airflow-1.8.1

- Tableau

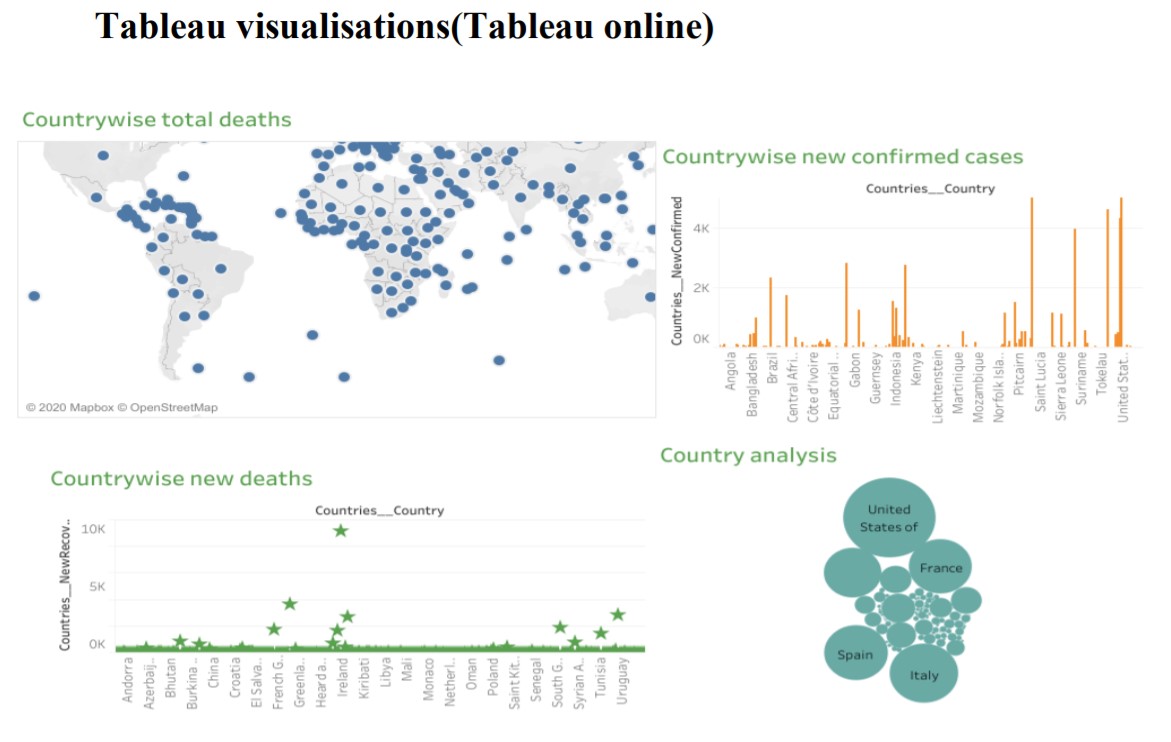

- Visualization using Tableau