This repository is the official PyTorch implementation of our paper PanBench: Towards High-Resolution and High-Performance Pansharpening.

To install dependencies:

pip install -r requirements.txtPanBench

├─GF1

│ ├─NIR_256

│ ├─PAN_1024

│ └─RGB_256

├─GF2

│ ├─NIR_256

│ ├─PAN_1024

│ └─RGB_256

├─GF6

│ ├─NIR_256

│ ├─PAN_1024

│ └─RGB_256

├─IN

│ ├─NIR_256

│ ├─PAN_1024

│ └─RGB_256

├─LC7

│ ├─NIR_256

│ ├─PAN_1024

│ └─RGB_256

├─LC8

│ ├─NIR_256

│ ├─PAN_1024

│ └─RGB_256

├─QB

│ ├─NIR_256

│ ├─PAN_1024

│ └─RGB_256

├─WV2

│ ├─NIR_256

│ ├─PAN_1024

│ └─RGB_256

├─WV3

│ ├─NIR_256

│ ├─PAN_1024

│ └─RGB_256

└─WV4

├─NIR_256

├─PAN_1024

└─RGB_256To train the models in the paper, run these commands:

python src/train.py experiment=cmfnetTo test the models in the paper, run these commands:

python src/train.py experiment=cmfnetTo evaluate the models in the paper, run these commands:

python src/eval.py experiment=cmfnetYou can download pretrained models here: CMFNet.

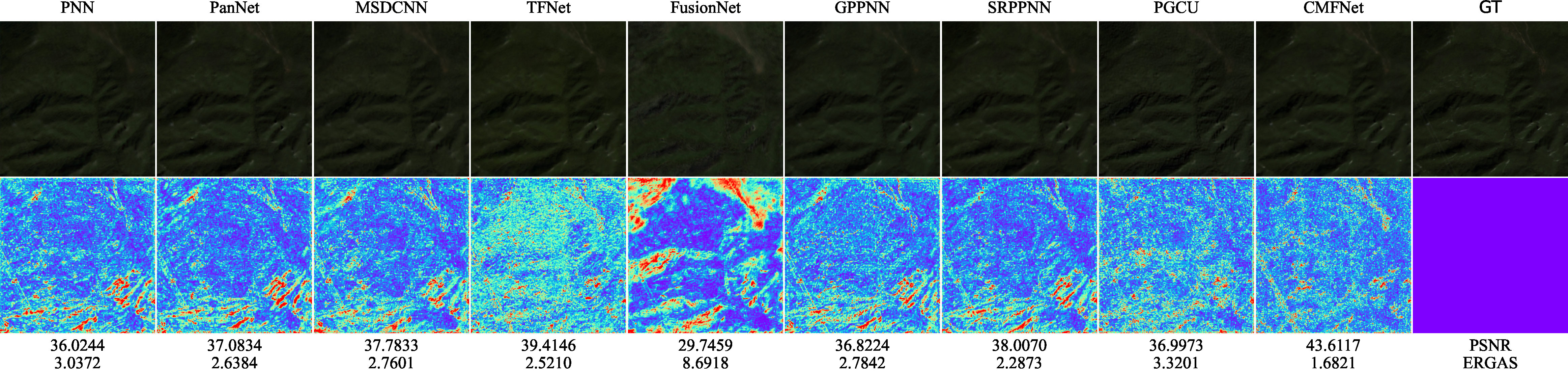

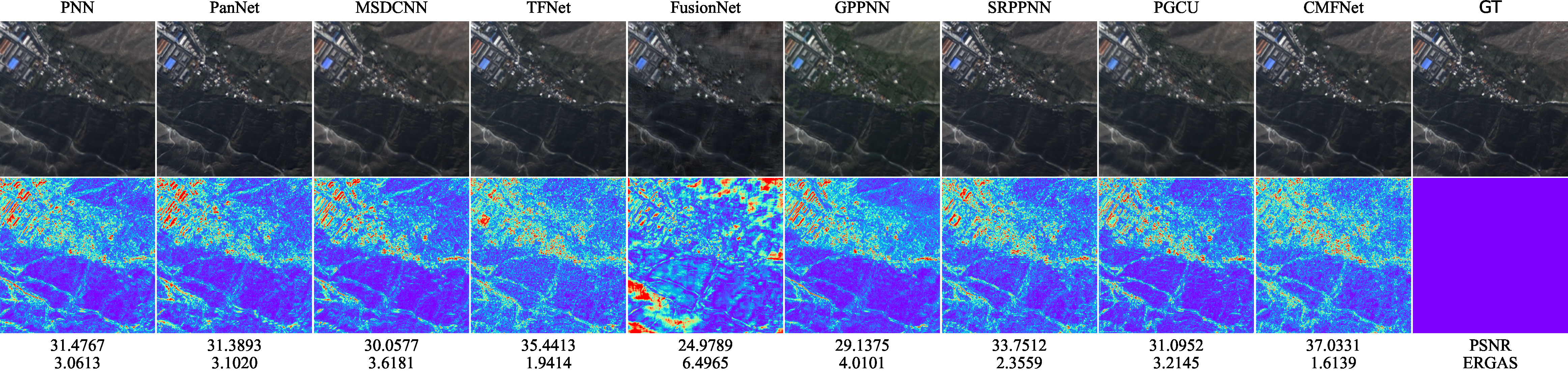

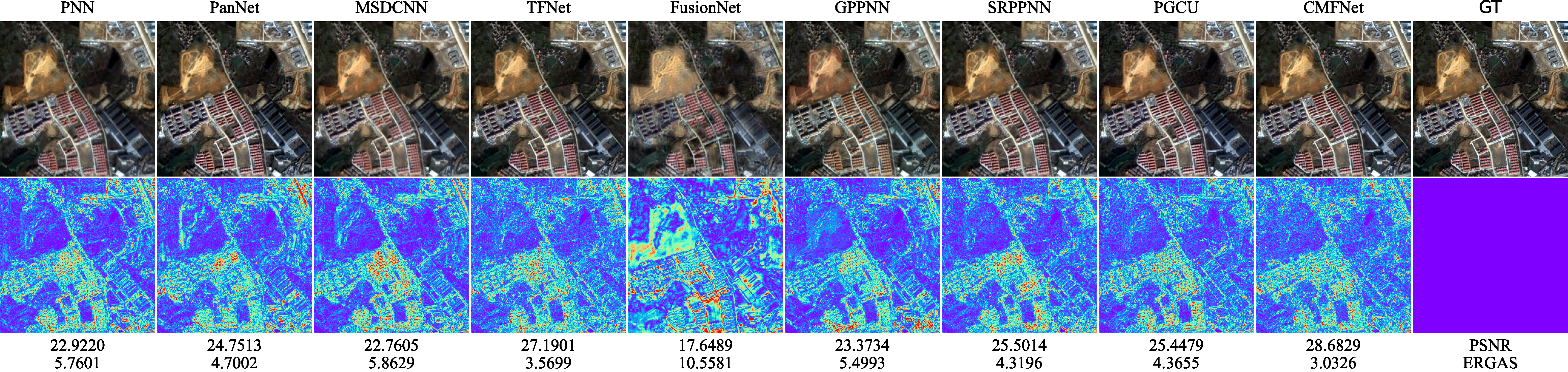

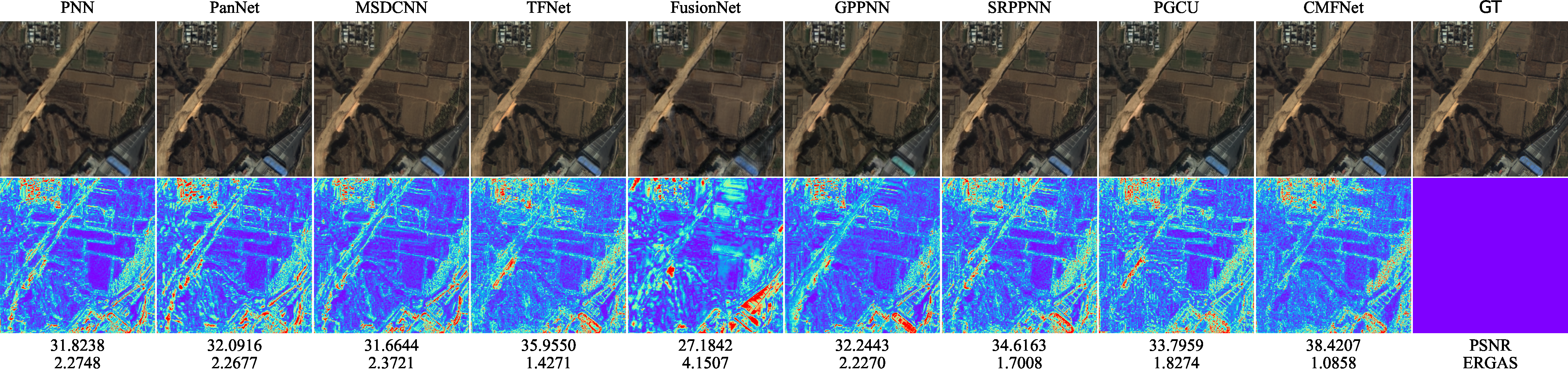

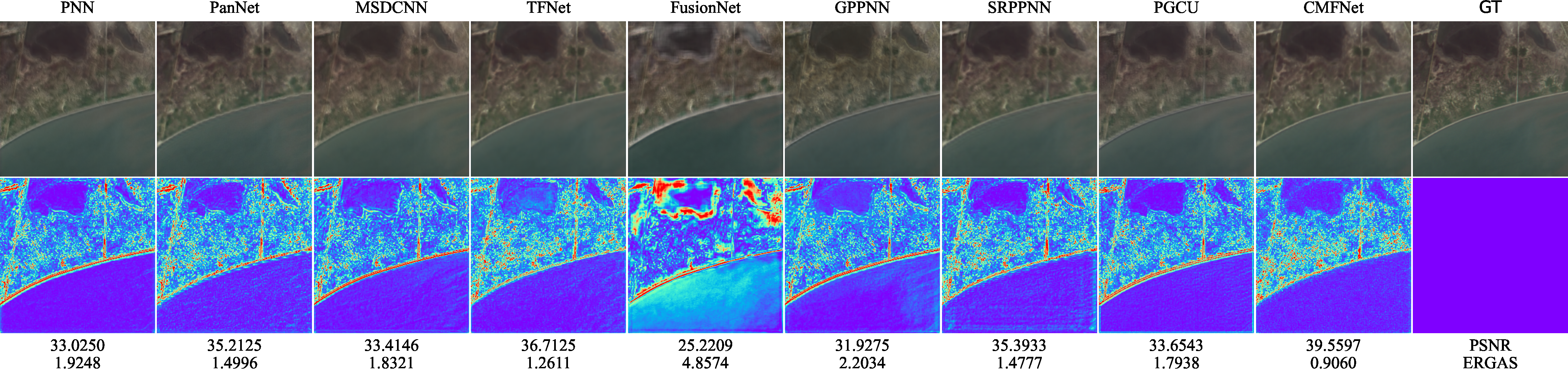

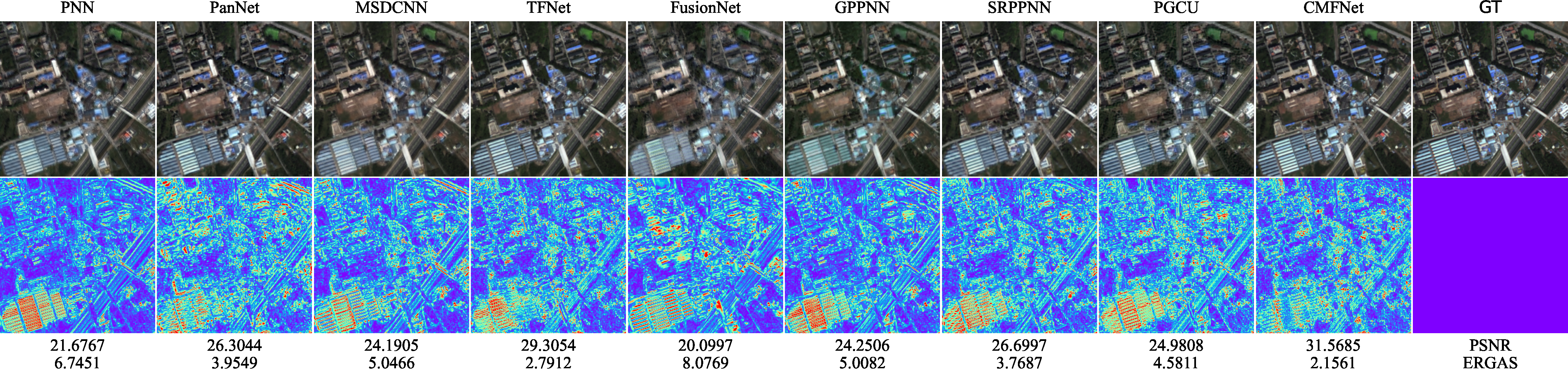

We support various pansharpening methods and satellites. We are working on add new methods and collecting experiment results.

-

Currently supported methods.

-

Currently supported satellites.

- GaoFen1

- GaoFen2

- GaoFen6

- Landsat7

- Landsat8

- WorldView2

- WorldView3

- WorldView4

- QuickBird

- IKONOS

We provide a visualization tool to help you understand the training process. You can use the following command to start the visualization tool.

python visualize.pyIf you use our code or models in your research, please cite with:

@misc{wang2023panbench,

title={PanBench: Towards High-Resolution and High-Performance Pansharpening},

author={Shiying Wang and Xuechao Zou and Kai Li and Junliang Xing and Pin Tao},

year={2023},

eprint={2311.12083},

archivePrefix={arXiv},

primaryClass={cs.CV}

}