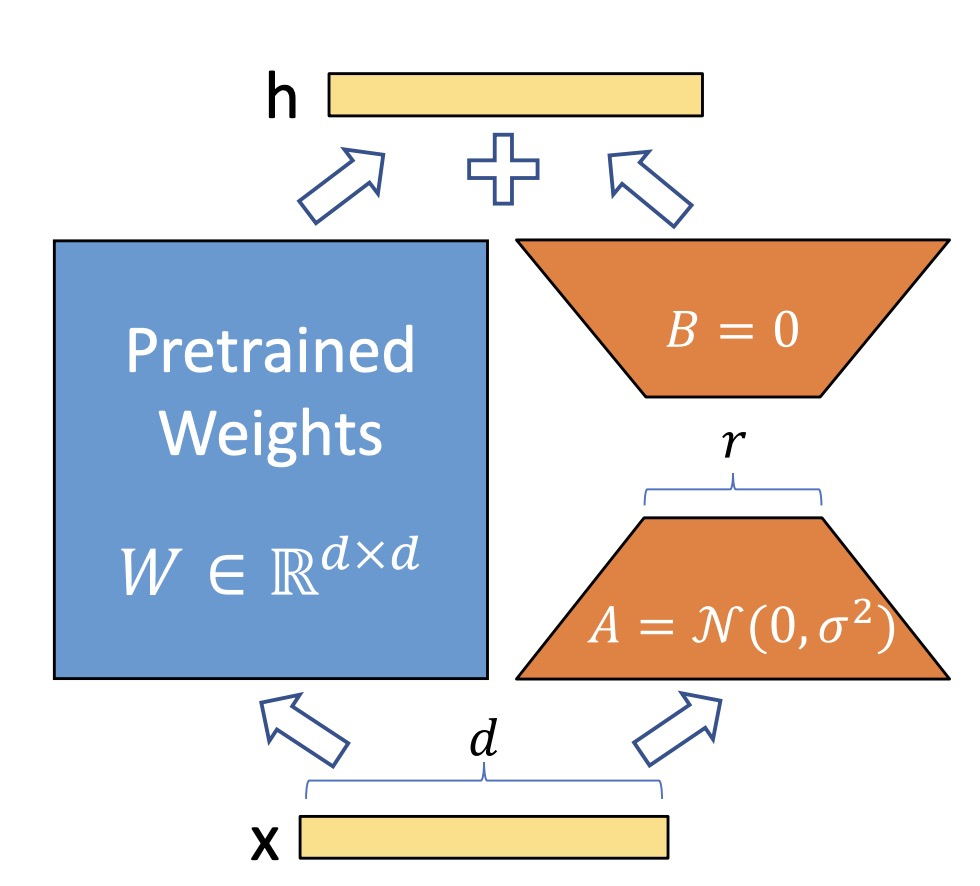

: "LoRA, which freezes the pretrained model weights and injects trainable rank decomposition matrices into each

layer of the Transformer architecture, greatly reducing the number of trainable parameters for downstream tasks."

: "LoRA, which freezes the pretrained model weights and injects trainable rank decomposition matrices into each

layer of the Transformer architecture, greatly reducing the number of trainable parameters for downstream tasks."

Trainable A & B (only)

| LLM | No. Parameters | Task | LoRa/QLoRa | Code |

| Gemma-IT | 2B | Text-to-text Generation | QLoRa | Link |

| Qwen 2 | 1.5B | Named Entity Recognition | LoRa | Link |

| Llama 3 | 8B | Cross-Linguistic Adaptation | LoRa | Link |

Note

LoRa is an elegant technique, yet fine-tuning LLMs with it demands considerable engineering effort. Optimal performance requires thorough optimization. In our repository, we provide foundational examples—consider them your starting point. There are numerous steps to achieve excellence. We encourage you to leverage your talents and creativity to achieve more outstanding results.