Project Page | Video | Paper

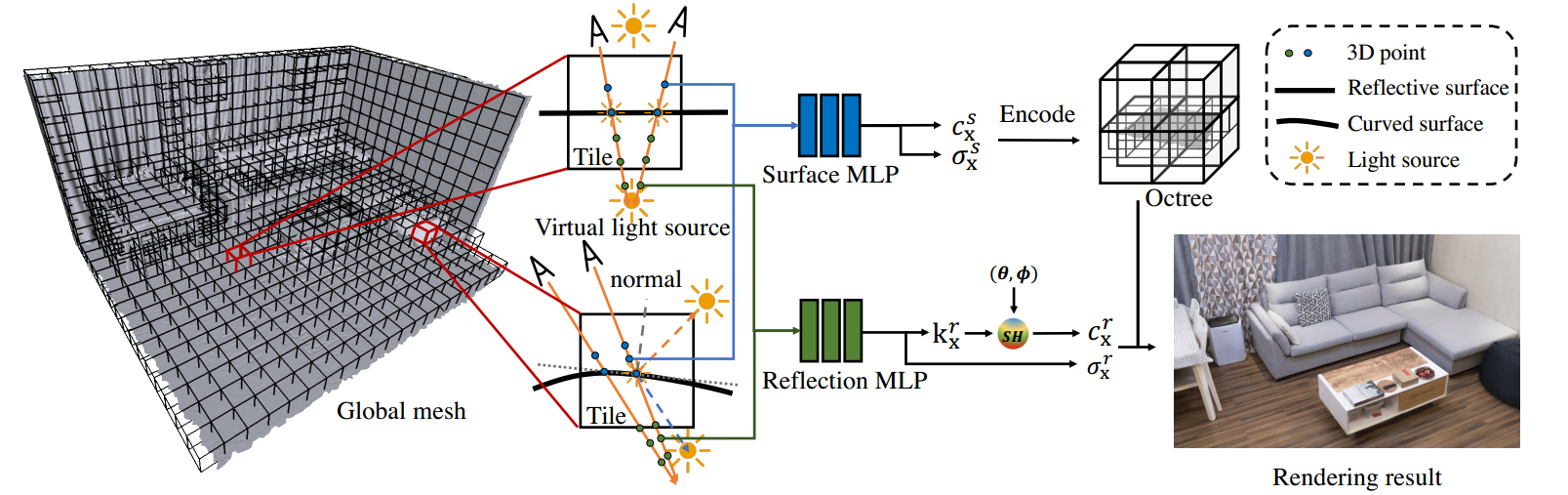

We propose a scalable neural scene reconstruction and rendering method to support distributed training and interactive rendering of large indoor scenes.

- System: Ubuntu 16.04 or 18.04

- GCC/G++: 7.5.0

- GPU : we implement our method on RTX 3090.

- CUDA version: 11.1 or higher

- python: 3.8

To install required python packages:

conda env create -f env.yamlC dependencies: cnpy, tqdm, tinyply,

Our method can render image of resolution 1280 x 720 in 20 FPS.

For interactive rendering, you should also install imgui, glfw-3.3.6.

For TensorRT acceleration, please first follow the TensorRT Installation Guide, then install torch2trt.

To build the rendering project:

cd rendering

bash build.shWe have provided a demo for interactive rendering.

You can download the necessary rendering data here. Unzip file:

unzip data.zipThen, replace the scene path and cnn path in rendering/config/base.yaml with data/renderData.npz and data/cnn.pth , run:

bash demo.shSet your own python directory and dependency path in ./Scalable-Neural-Indoor-Scene-Rendering/training/src/make.sh.

Then, Compilation:

./Scalable-Neural-Indoor-Scene-Rendering/training/src$ bash make.shFor training a tile, please run:

python train.py -c {config_file} -t {tileIdx} -g {gpu_idx}For training a group of tiles, please first make a file group.txt as follows:

tileIdx1

tileIdx2

...

tileIdxN Then, run:

python train.py -c {config_file} -ts group.txt -g {gpu_idx}