This is a Pytorch implementation of the EmotionCLIP paper:

@inproceedings{zhang2023learning,

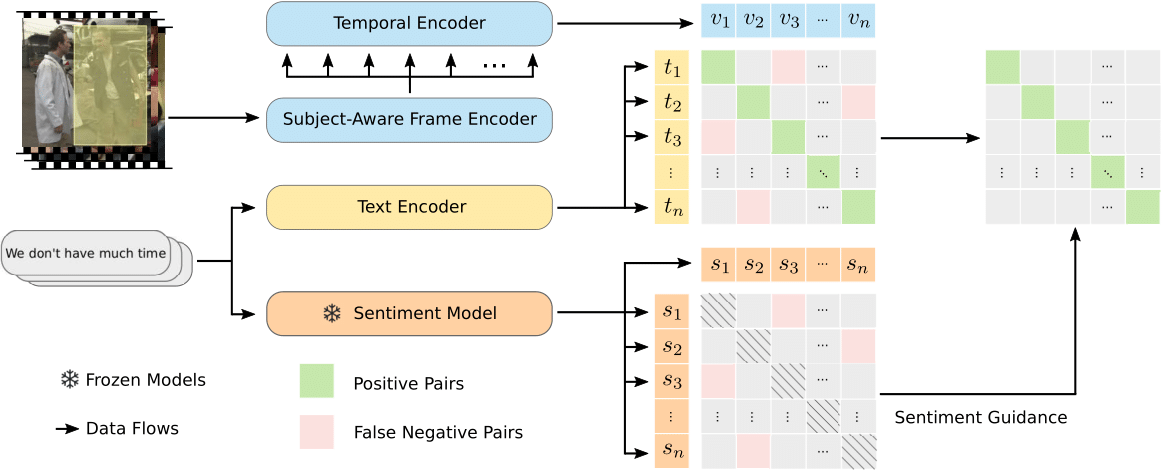

title={Learning Emotion Representations from Verbal and Nonverbal Communication},

author={Zhang, Sitao and Pan, Yimu and Wang, James Z},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={18993--19004},

year={2023}

}

The code is built with following libraries:

- pytorch

- scikit-learn

- einops

- ftfy

- regex

- pandas

- orjson

- h5py

- wandb

- tqdm

- rich

- termcolor

Extra setup is required for data preprocessing. Please refer to preprocessing.

The pre-trained EmotionCLIP model can be downloaded here. We follow the linear-probe evaluation protocol employed in CLIP. To test the pre-trained model on a specific dataset, run

python linear_eval.py \

--dataset <dataset_name> \

--ckpt-path <path_to_the_pretrained_model>

We use the weight provided by OpenCLIP as the starting point for our training. Please download the weight here and put it under src/pretrained.

To do training on the YouTube video dataset with default settings, run

python main.py \

--video-path <path_to_the_video_frames_folder> \

--caption-path <path_to_the_video_caption_folder> \

--sentiment-path <path_to_the_sentiment_logits_file> \

--index-path <path_to_the_index_file>