👋 Welcome to the Support Repository for the DeepLearningAI Event: Building with Instruction-Tuned LLMs: A Step-by-Step Guide

Here are a collection of resources you can use to help fine-tune your LLMs, as well as create a few simple LLM powered applications!

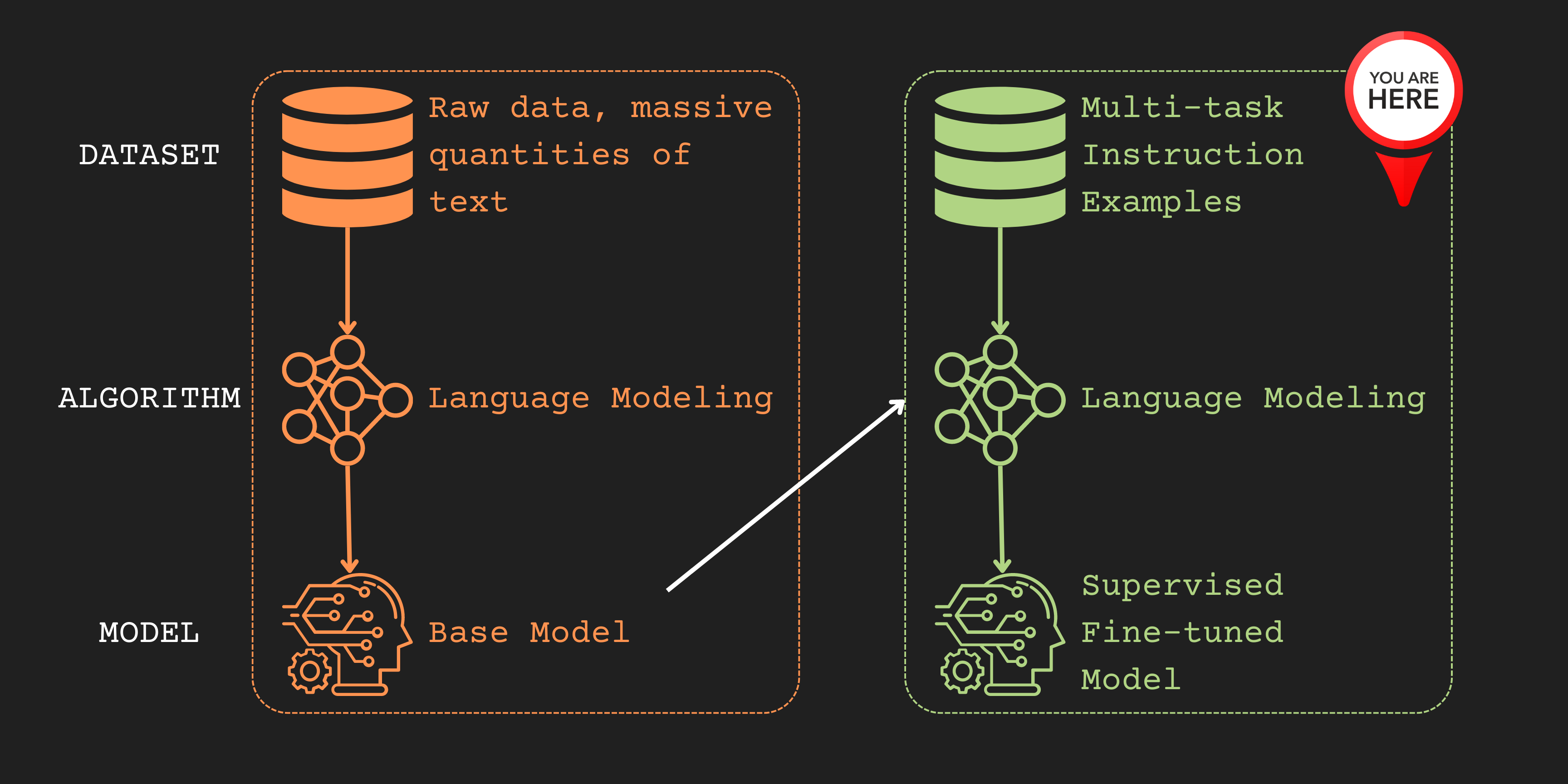

🪡 Fine-tuning Approaches:

Instruct-tuning OpenLM's OpenLLaMA on the Dolly-15k Dataset Notebooks:

| Notebook |

Purpose |

Link |

| Instruct-tuning Leveraging QLoRA |

Supervised fine-tuning! |

Here |

| Instruct-tuning Leveraging Lit-LLaMA |

Using Lightning-AI's Lit-LLaMA frame for Supervised fine-tuning |

Here |

| Natural Language to SQL fine-tuning using Lit-LLaMA |

Using Lightning-AI's Lit-LLaMA frame for Supervised fine-tuning on the Natural Language to SQL task |

Here |

MarketMail Using BLOOMz Resources:

| Notebook |

Purpose |

Link |

| BLOOMz-LoRA Unsupervised Fine-tuning Notebook |

Fine-tuning BLOOMz with an unsupervised approach using Sythetic Data! |

Here |

| Creating Synthetic Data with GPT-3.5-turbo |

Generate Data for Your Model! |

Here |

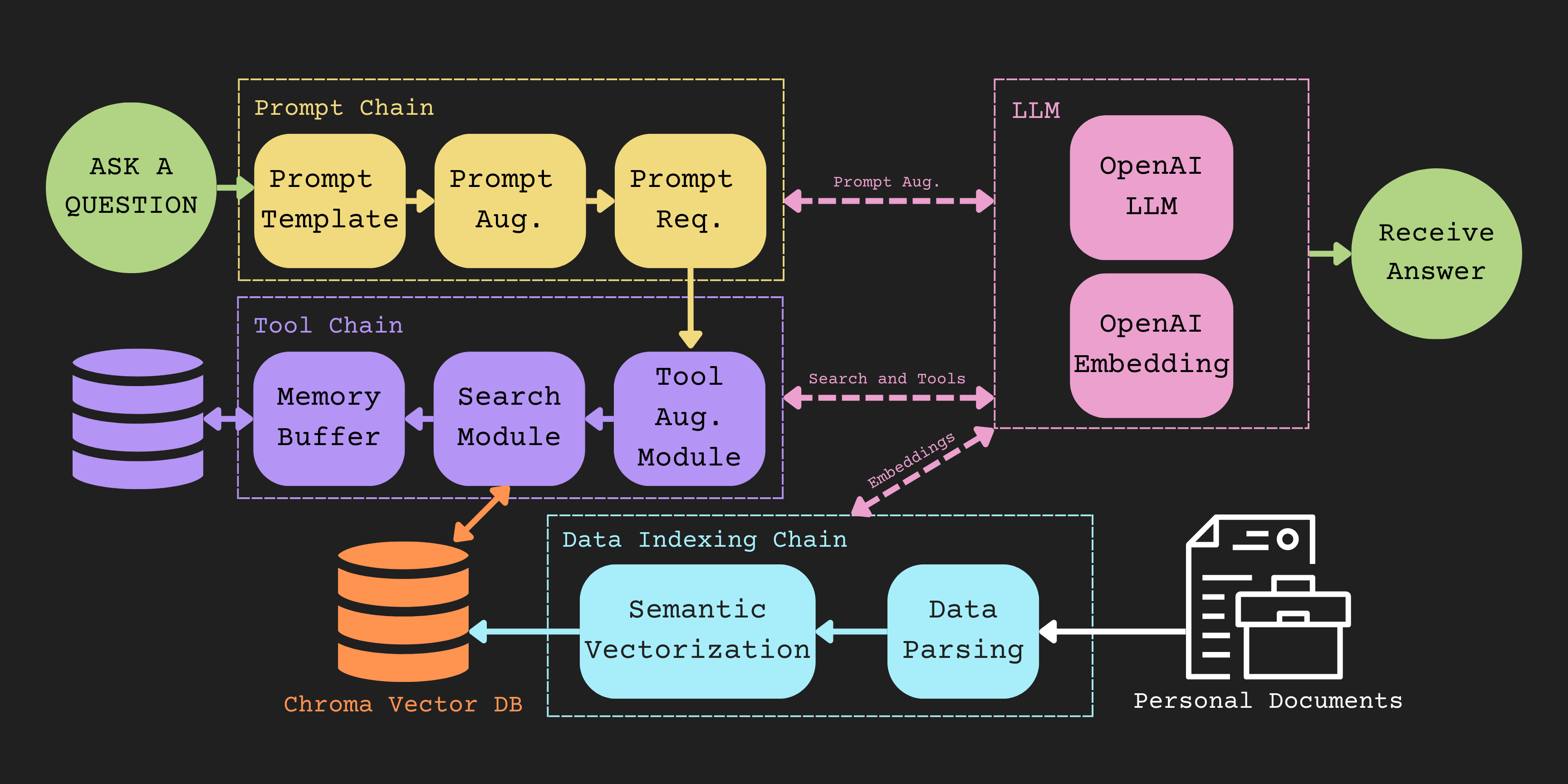

🏗️ Converting Models into Applications:

| Notebook |

Purpose |

Link |

| Open-source LangChain Example |

Leveraging LangChain to build a Hugging Face 🤗 Powered Application |

Here |

| Open AI LangChain Example |

Building an Open AI Powered Application |

Here |

| Demo |

Info |

Link |

| Instruct-tuned Chatbot Leveraging QLoRA |

This demo is currently powered by the Guanaco Model - will be updated once our instruct-tuned model finishes training! |

Here |

| TalkToMyDoc |

Query the first Hitch Hiker's Guide book! |

Here |