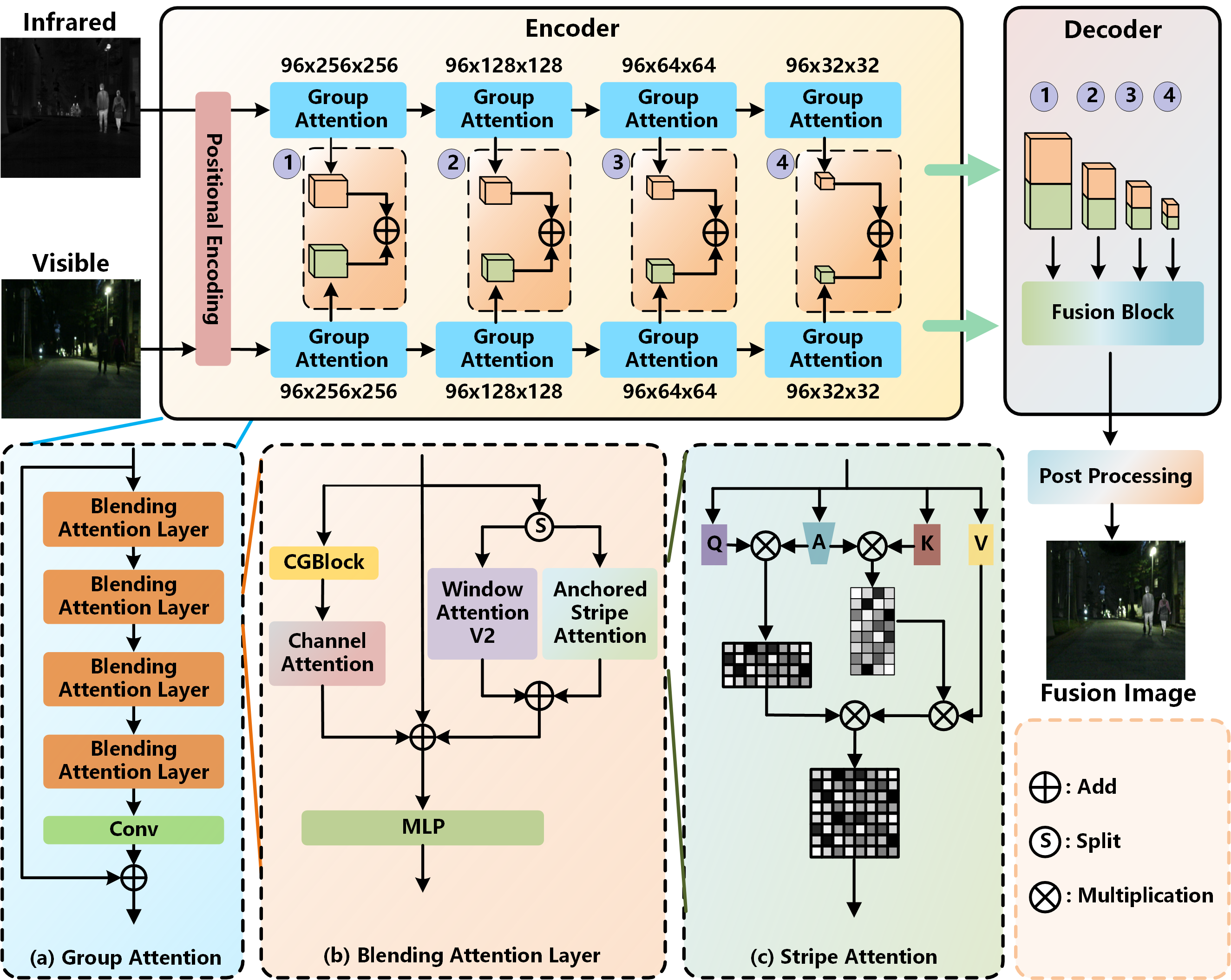

GTMFuse: Group-attention transformer-driven multiscale dense feature-enhanced network for infrared and visible image fusion

⭐ This code has been completely released ⭐

⭐ our article ⭐

If our code is helpful to you, please cite:

@article{mei2024gtmfuse,

title={GTMFuse: Group-attention transformer-driven multiscale dense feature-enhanced network for infrared and visible image fusion},

author={Mei, Liye and Hu, Xinglong and Ye, Zhaoyi and Tang, Linfeng and Wang, Ying and Li, Di and Liu, Yan and Hao, Xin and Lei, Cheng and Xu, Chuan and others},

journal={Knowledge-Based Systems},

volume={293},

pages={111658},

year={2024},

publisher={Elsevier}

}

python train.py

- Downloading the pre-trained checkpoint from best_model.pth and putting it in ./checkpoints.

- python test.py

Downloading the HBUT dataset from HBUT

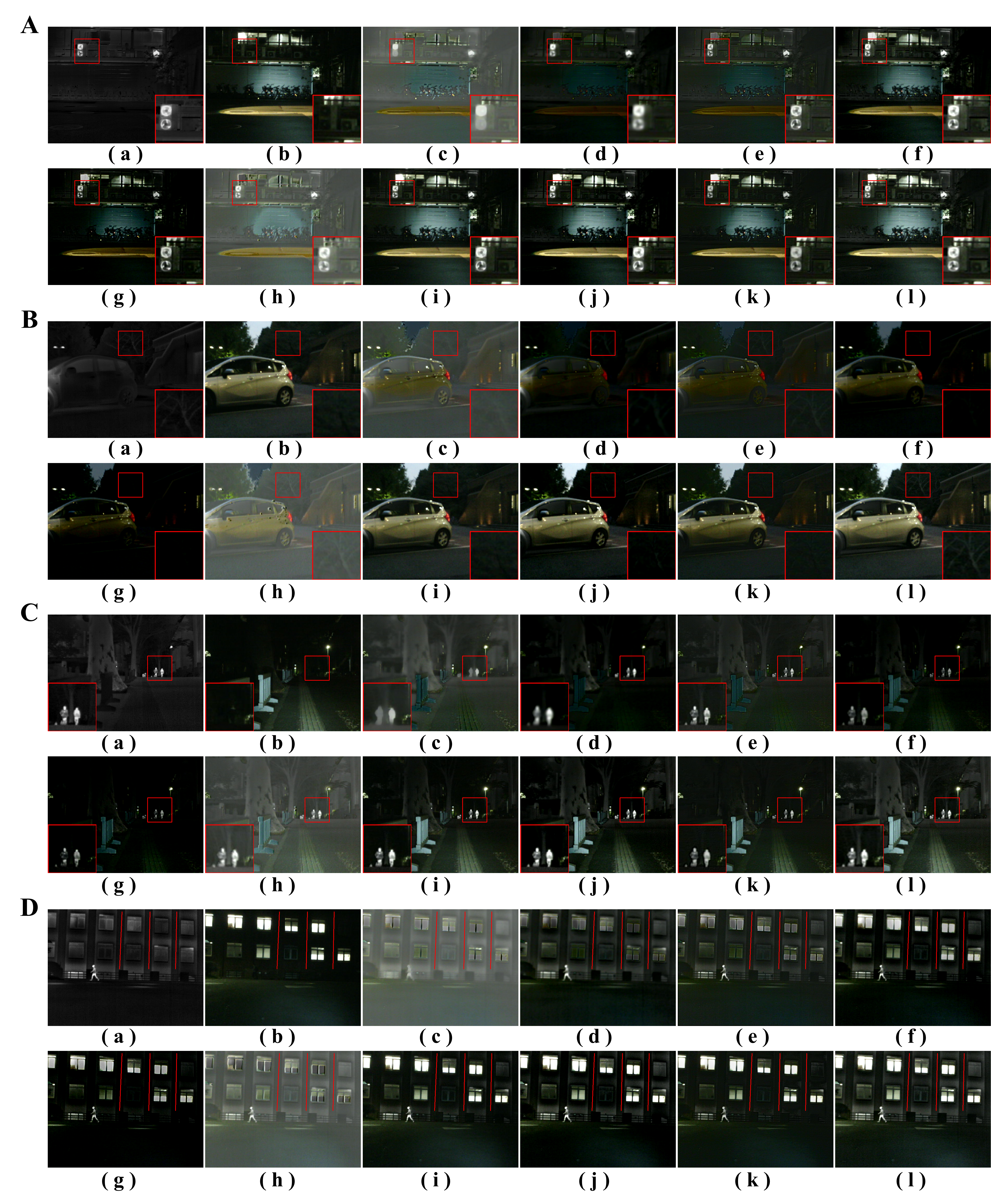

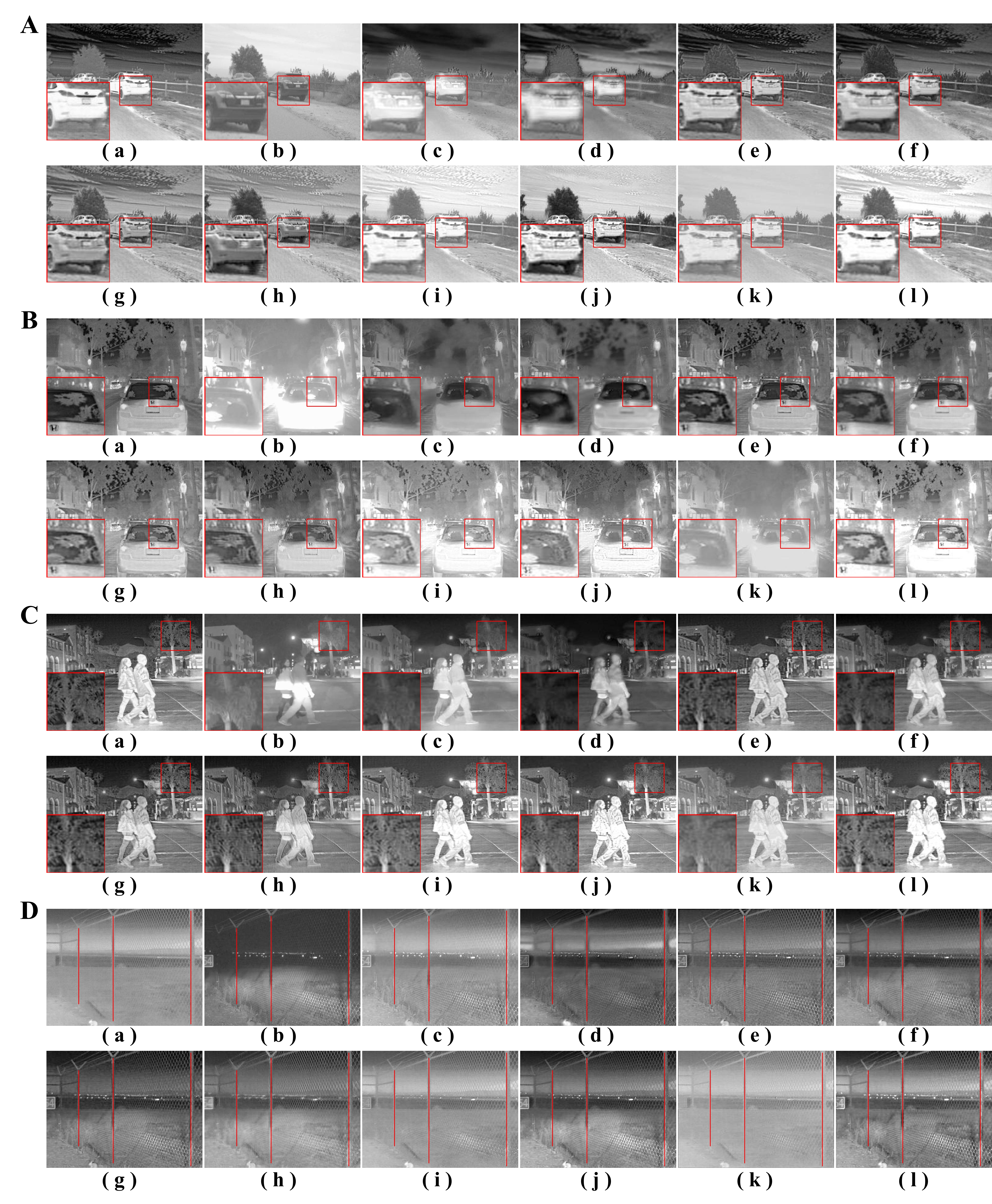

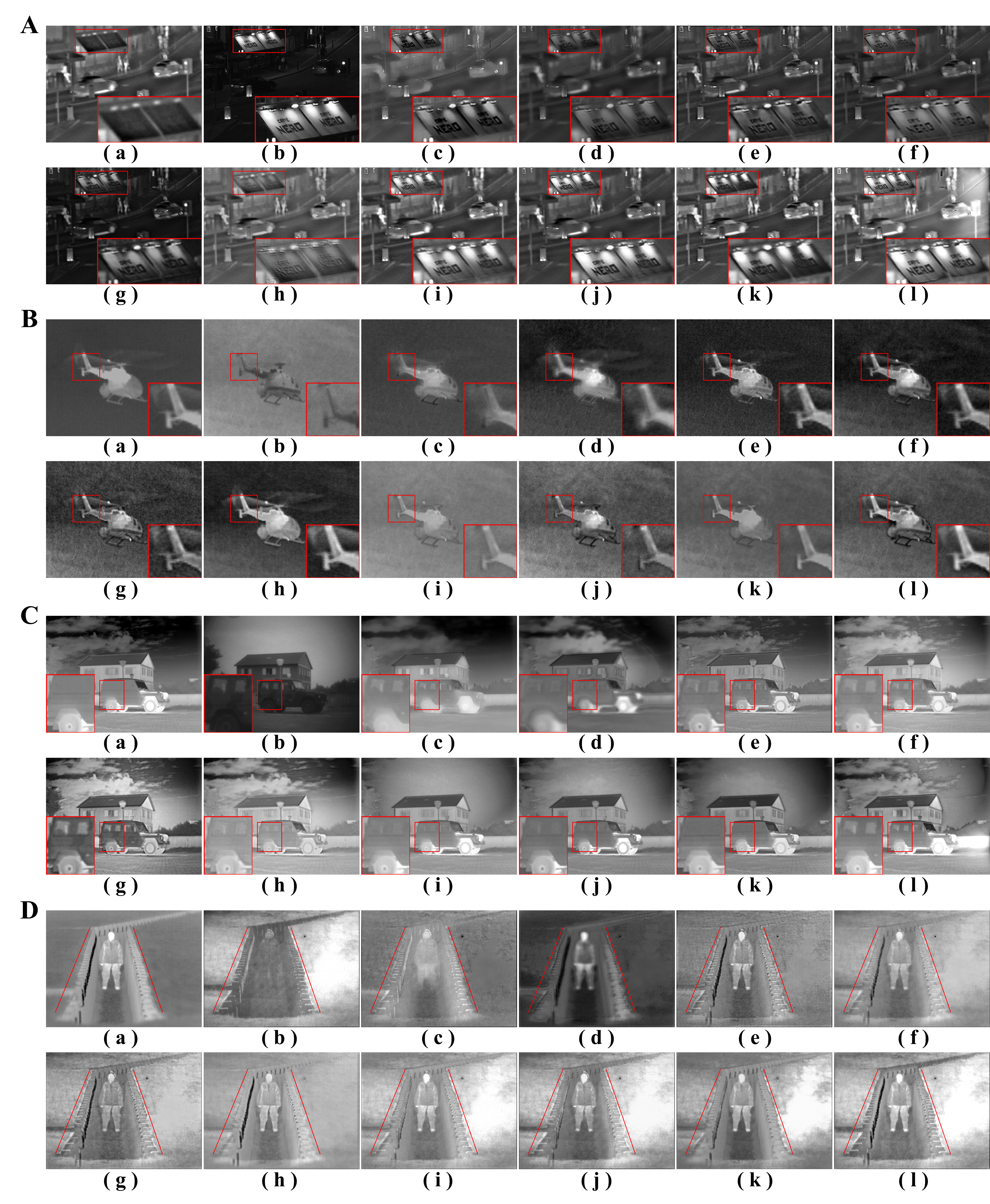

- Four representative images of the MSRS test set. In alphabetical order they are infrared image, visible image, GTF, FusionGAN, SDNet, RFN–Nest, U2Fusion, LRRNet, SwinFusion, CDDFuse, DATFuse, and GTMFuse. - Four representative images of the RoadScene test set. - Four representative images of the RoadScene test set.If you have any questions, please contact me by email (hux18943@gmail.com).