End-to-End Optimization of LiDAR Beam Configuration

arXiv | IEEE Xplore | Video

This repository is the official implementation of the paper:

End-to-End Optimization of LiDAR Beam Configuration for 3D Object Detection and Localization

Niclas Vödisch, Ozan Unal, Ke Li, Luc Van Gool, and Dengxin Dai.

IEEE Robotics and Automation Letters (RA-L), vol. 7, no. 2, pp. 2242-2249, April 2022

If you find our work useful, please consider citing our paper:

@ARTICLE{Voedisch_2022_RAL,

author={Vödisch, Niclas and Unal, Ozan and Li, Ke and Van Gool, Luc and Dai, Dengxin},

journal={IEEE Robotics and Automation Letters},

title={End-to-End Optimization of LiDAR Beam Configuration for 3D Object Detection and Localization},

year={2022},

volume={7},

number={2},

pages={2242-2249},

doi={10.1109/LRA.2022.3142738}}

📔 Abstract

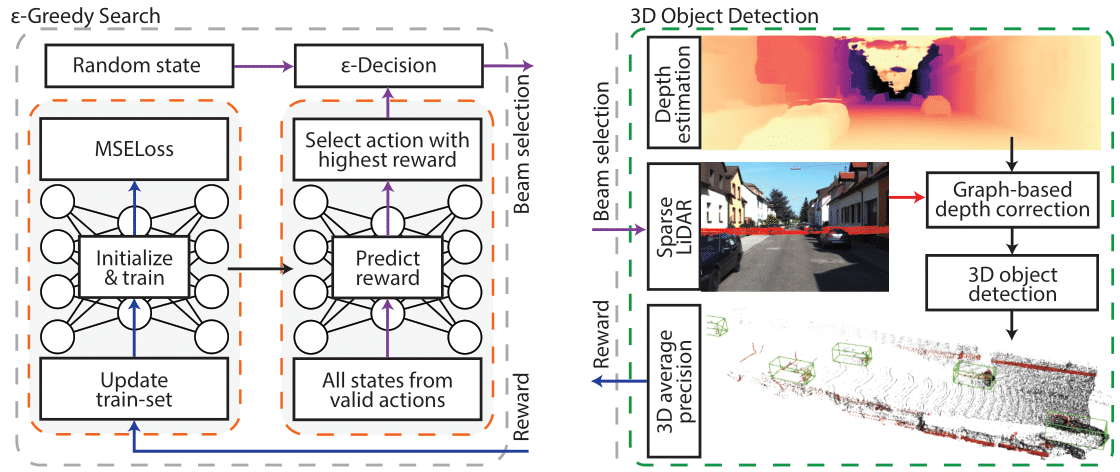

Pre-determined beam configurations of low-resolution LiDARs are task-agnostic, hence simply using can result in non-optimal performance. In this work, we propose to optimize the beam distribution for a given target task via a reinforcement learning-based learning-to-optimize (RL-L2O) framework. We design our method in an end-to-end fashion leveraging the final performance of the task to guide the search process. Due to the simplicity of our approach, our work can be integrated with any LiDAR-based application as a simple drop-in module. In this repository, we provide the code for the exemplary task of 3D object detection.

🏗️ ️ Setup

To clone this repository and all submodules run:

git clone --recurse-submodules -j8 git@github.com:vniclas/lidar_beam_selection.git⚙️ Installation

To install this code, please follow the steps below:

- Create a conda environment:

conda create -n beam_selection python=3.8 - Activate the environment:

conda activate beam_selection - Install dependencies:

pip install -r requirements.txt - Install cudatoolkit (change to the used CUDA version):

conda install cudnn cudatoolkit=10.2 - Install spconv (change to the used CUDA version):

pip install spconv-cu102 - Install OpenPCDet (linked as submodule):

cd third_party/OpenPCDet && python setup.py develop && cd ../.. - Install Pseudo-LiDAR++ (linked as submodule):

pip install -r third_party/Pseudo_Lidar_V2/requirements.txt

pip install pillow==8.3.2(avoid runtime warnings)

💾 Data Preparation

- Download KITTI 3D Object Detection dataset and extract the files:

- Left color images

image_2 - Right color images

image_3 - Velodyne point clouds

velodyne - Camera calibration matrices

calib - Training labels

label_2

- Left color images

- Predict the depth maps:

- Download pretrained model (training+validation)

- Generate the data:

Note: Please adjust the pathscd third_party/Pseudo_Lidar_V2 python ./src/main.py -c src/configs/sdn_kitti_train.config \ --resume PATH_TO_CHECKPOINTS/sdn_kitti_object_trainval.pth --datapath PATH_TO_KITTI/training/ \ --data_list ./split/trainval.txt --generate_depth_map --data_tag trainval \ --save_path PATH_TO_DATA/sdn_kitti_train_setPATH_TO_CHECKPOINTS,PATH_TO_KITTI, andPATH_TO_DATAto match your setup. - Rename

training/velodynetotraining/velodyne_original - Symlink the KITTI folders to PCDet:

ln -s PATH_TO_KITTI/training third_party/OpenPCDet/data/kitti/trainingln -s PATH_TO_KITTI/testing third_party/OpenPCDet/data/kitti/testing

🏃 Running 3D Object Detection

- Adjust paths in

main.py. Further available parameters are listed inrl_l2o/eps_greedy_search.pyand can be added inmain.py. - Adjust the number of epochs of the 3D object detector in (we used 40 epochs):

object_detection/compute_reward.py--> above the class definitionthird_party/OpenPCDet/tools/cfgs/kitti_models/pointpillar.yaml--> search forNUM_EPOCHS

Note: If you use another detector, modify the respective configuration file.

- Adjust the training scripts of the utilized detector to match your setup, e.g.,

object_detection/scripts/train_pointpillar.sh. - Initiate the search:

python main.py

Note: Since we keep intermediate results to easily re-use them in later iterations, running the script will create a lot of data in theoutput_dirspecified inmain.py. You might want to manually delete some folders from time to time.

🔧 Adding more Tasks

Due to the design of the RL-L2O framework, it can be used as a simple drop-in module for many LiDAR applications.

To apply the search algorithm to another task, just implement a custom RewardComputer, e.g., see object_detection/compute_reward.py.

Additionally, you will have to prepare a set of features for each LiDAR beam.

For the KITTI 3D Object Detection dataset, we provide the features as presented in the paper in object_detection/data/features_pcl.pkl.

👩⚖️ License

This software is made available for non-commercial use under a Creative Commons Attribution-NonCommercial 4.0 International License. A summary of the license can be found on the Creative Commons website.