develop branch to see what is coming in pyannote.audio 2.0:

- a much smaller and cleaner codebase

- Python-first API (the good old pyannote-audio CLI will still be available, though)

- multi-GPU and TPU training thanks to pytorch-lightning

- data augmentation with torch-audiomentations

- huggingface model hosting

- prodigy recipes for audio annotations

- online demo based on streamlit

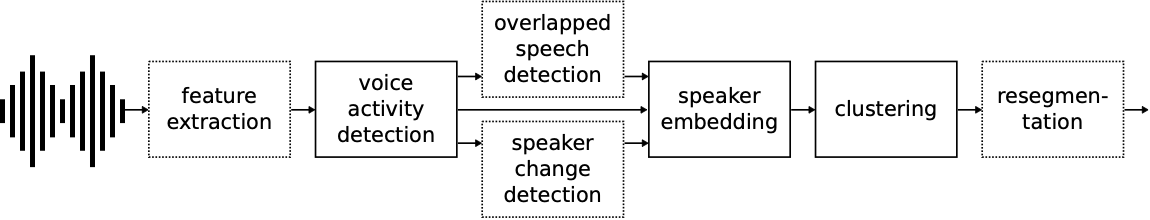

pyannote.audio is an open-source toolkit written in Python for speaker diarization. Based on PyTorch machine learning framework, it provides a set of trainable end-to-end neural building blocks that can be combined and jointly optimized to build speaker diarization pipelines:

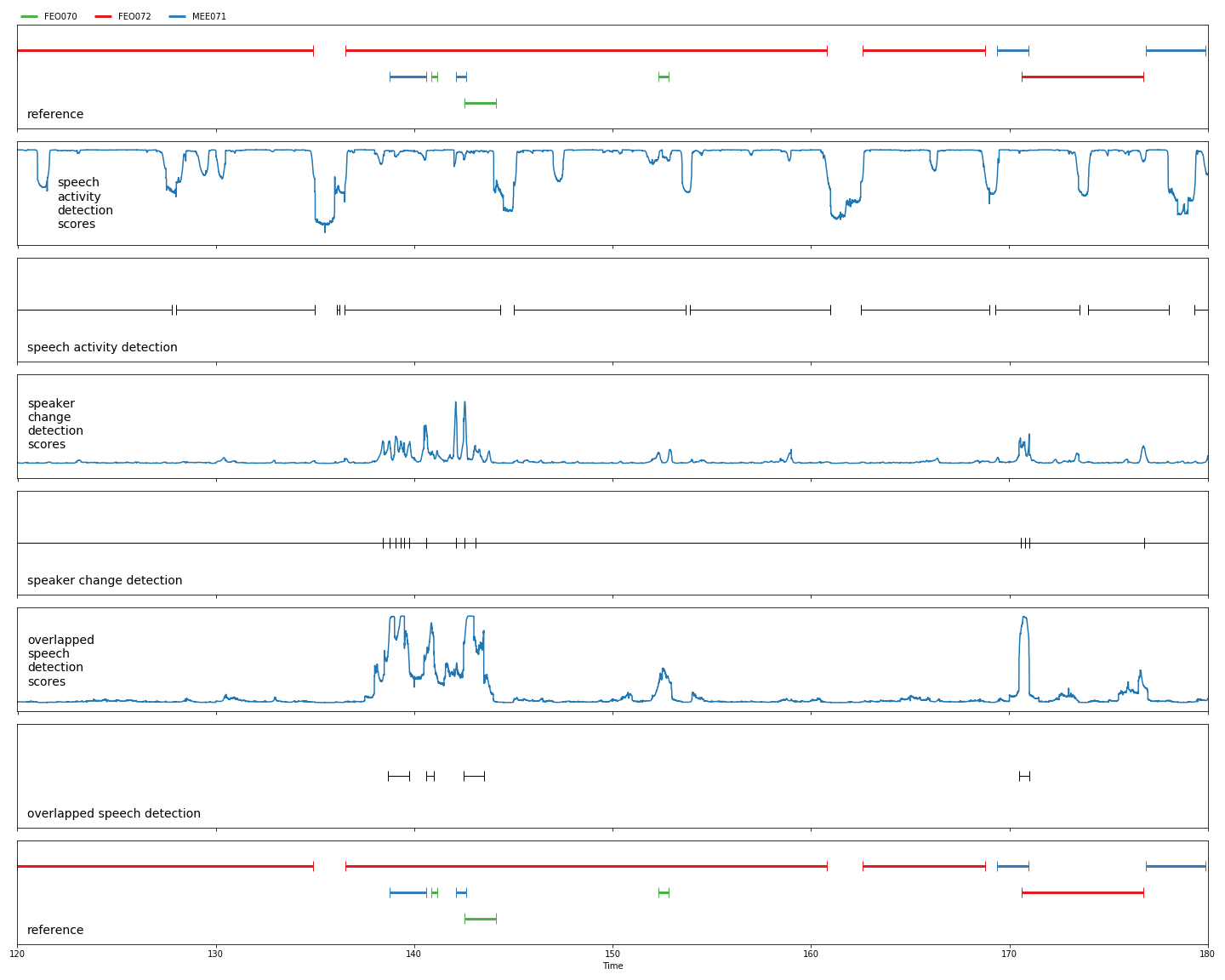

pyannote.audio also comes with pretrained models covering a wide range of domains for voice activity detection, speaker change detection, overlapped speech detection, and speaker embedding:

pyannote.audio only supports Python 3.7 (or later) on Linux and macOS. It might work on Windows but there is no garantee that it does, nor any plan to add official support for Windows.

The instructions below assume that pytorch has been installed using the instructions from https://pytorch.org.

$ pip install pyannote.audio==1.1.1- Use pretrained models and pipelines

- Prepare your own data

- Train models on your own data

- Tune pipelines on your own data

Until a proper documentation is released, note that part of the API is described in this tutorial.

If you use pyannote.audio please use the following citation

@inproceedings{Bredin2020,

Title = {{pyannote.audio: neural building blocks for speaker diarization}},

Author = {{Bredin}, Herv{\'e} and {Yin}, Ruiqing and {Coria}, Juan Manuel and {Gelly}, Gregory and {Korshunov}, Pavel and {Lavechin}, Marvin and {Fustes}, Diego and {Titeux}, Hadrien and {Bouaziz}, Wassim and {Gill}, Marie-Philippe},

Booktitle = {ICASSP 2020, IEEE International Conference on Acoustics, Speech, and Signal Processing},

Address = {Barcelona, Spain},

Month = {May},

Year = {2020},

}