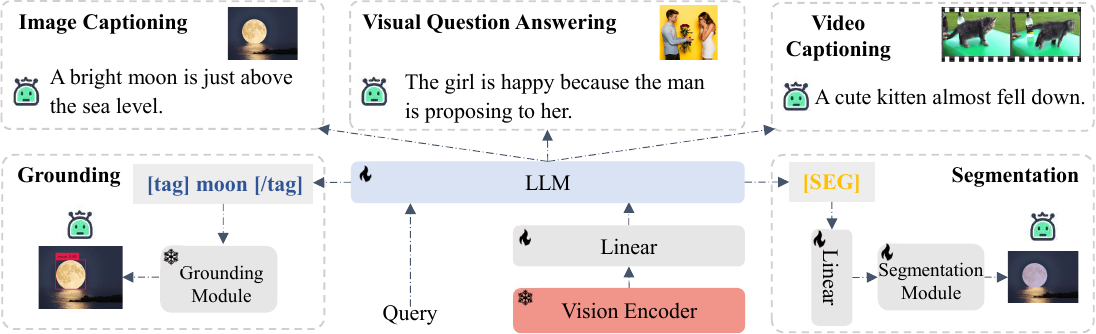

Multi-modal multi task LLM

Explore the docs »

View Demo

·

Report Bug

·

Request Feature

Table of Contents

Structure:

Examples

- Visual Understanding

- Image Captioning

- Video Captioning

- Visual Question Answering (VQA)

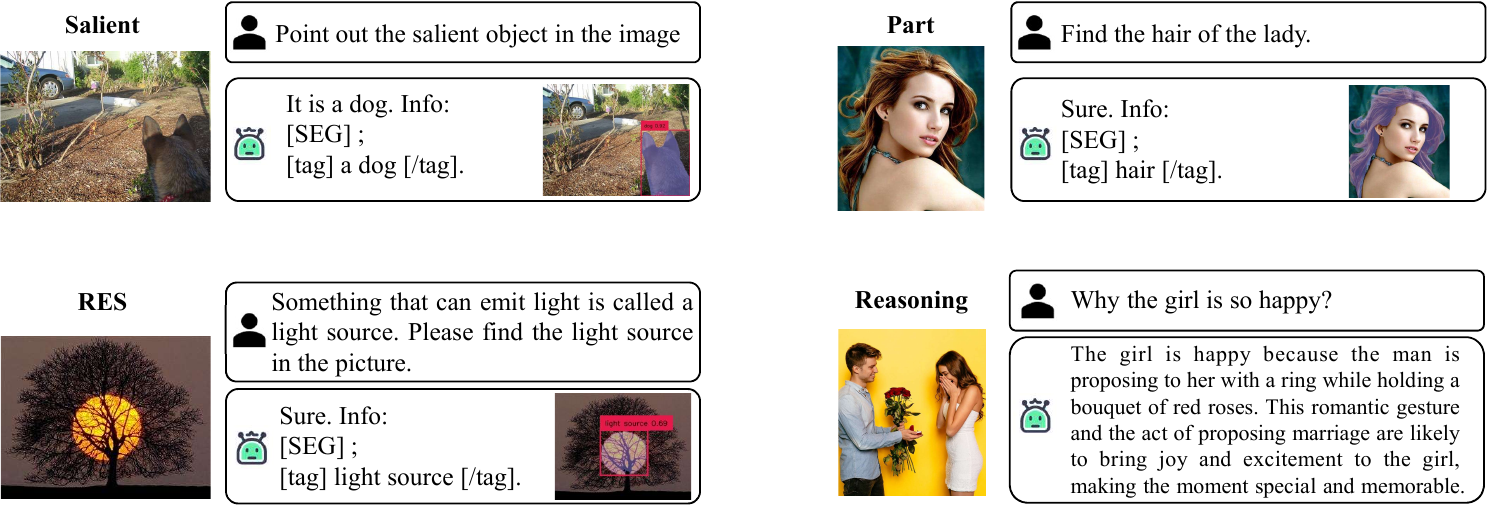

- Visual Segmentation

- Referring Expression Segmentation (RES)

- Salient Object Segmentation

- Semantic Segmentation

- Visual Grounding

- Referring Expression Comprehension (REC)

This is an example of how you may give instructions on setting up your project locally. To get a local copy up and running follow these simple example steps.

Run the following commands in terminal:

pip install -r ./shells/requirements.txt

cd ./models/GroundingDINO && ./install.sh && cd ../..And please should install requirements of GroundingDINO for Grounding. Otherwise, you can disable grounding module.

Why do these?

- install requirements:

pip install -r requirements.txt - build cuda core for GroundingDINO:

cd ./models/GroundingDINO && ./install.sh && cd ../.., if not may ariseUserWarning: Failed to load custom C++ ops. Running on CPU mode Only! warnings.warn("Failed to load custom C++ ops. Running on CPU mode Only!")

# save to requirements.txt

polyglot

shortuuid

fire

fastapi

uvicorn

gradio

omegaconf

decord

einops

ninja

accelerate

torch==1.13.1

torchvision==0.14.1

transformers==4.29.1

tokenizers==0.13.3

deepspeed==0.8.3

imageio==2.31.1

SentencePiece

pydantic==1.10.12

peft==0.4.0

yacs

addict

yapf

supervision

opencv-python-headless==4.2.0.34

| Dataset | Images/Videos | Annotations |

|---|---|---|

| LLaVA CC3M | LLaVA-CC3M-Pretrain-595K/image.zip | chat.json |

| TGIF | tgif | ullava_tgif.json |

Note that TGIF videos should be downloaded by the url in data/tgif-v1.0.tsv.

| Dataset | Images | Annotations |

|---|---|---|

| LLaVA Instruction 150K | coco2017 | llava_instruct_150k.json |

| RefCOCO | coco2014 | ullava_refcoco.json |

| RefCOCOg | coco2014 | ullava_refcocog.json |

| RefCOCO+ | coco2014 | ullava_refcoco+.json |

| RefCLEF | saiapr_tc-12 | ullava_refclef.json |

| ADE20K | ade20k | ullava_ade20k.json |

| COCO Stuff | cocostuff | ullava_cocostuff.json |

| VOC2010 | voc2010 | ullava_pascal_part.json |

| PACO LVIS | paco | ullava_paco_lvis.json |

| Salient 15K | salient15k | ullava_salient15k.json |

Dataset config example

dataset:

llava:

data_type: 'image'

image_token_len: 256

build_info:

anno_dir: './data_annotations/llava_instruct_150k.json'

image_dir: './coco2017/train2017'

portion: 1.0

vis_processor: 'clip_image'

refcoco+:

data_type: 'image'

image_token_len: 256

build_info:

anno_dir: './annotations/ullava_refcoco+_train.json'

image_dir: './coco2014'

template_root: './datasets/templates/SEG.json'

portion: 1.0

vis_processor: 'clip_image'Note:

- We re-organize most of the dataset annotations for easier training, but all of us must follow the rules that the original datasets require.

- Prepare Open-Source LLaMA models

| Foundation model | Version | Path |

|---|---|---|

| Vicuna 7B HF | V1.1 | vicuna_7b_v1.1 |

| LLaMA2 7B HF | - | meta-llama/Llama-2-7b-hf |

| SAM | ViT-H | sam_vit_h_4b8939.pth |

| GroundingDINO | swint_ogc | groundingdino_swint_ogc.pth |

Note:

- LLaMA2 is trained with bf16, convergence error may happen when stage 1 training with fp16.

- The default tokenizer.legacy of Llama-2 is False, and may rise tokenization mismatch error with some conversation

template.

- Errata: The base LLM used in the paper is Vicuna-v1.1, not LLaMA2. Sorry about the mistake.

- Prepare datasets

- Set config in

configs/train/ullava_core_stage1.yaml

Note set all datasets path or output path according to your experiments. 4. Train Stage I with multi GPUs

./shells/pretrain.shor python train_ullava_core.py --cfg_path './configs/train/ullava_core_stage1.yaml' for 1 GPU.

The first stage with 4 A100 80G with bf16 costs ~6hours for 1 epoch. Then you can find the trained model at the output_dir, for example, './exp/ullava_core_7b'

After Stage I training finished, we can go through the following step, that is, fine-tuning.

- Prepare datasets

- Set config in

configs/train/ullava_stage2_lora.yaml (for lora)

configs/train/ullava_stage2.yaml (for non lora)

- Train Stage II with multi GPUs

./shells/finetune.shor python train_ullava_core.py --cfg_path './configs/train/ullava_stage2_lora.yaml' for 1 GPU.

Q1: What conv_tpye used in training?

A1: Stage I: 'conv_simple'. Stage II: 'conv_sep2'

Q2: When LoRA used?

A2: Stage I: We have not used in this stage. Stage II: According to your devices.

- Set config

configs/eval/eval_res.ymal (for RES task)

configs/eval/eval_rec.ymal (for REC task)

configs/eval/eval_salient.ymal (for Salinet segmentation task)

- Run

python evaluation/eval_ullava.py --cfg_path './configs/eval/eval_res.yaml' (for RES)

python evaluation/eval_ullava_grounding.py --cfg_path './configs/eval/eval_rec.yaml' (for REC)

python evaluation/eval_ullava.py --cfg_path './configs/eval/eval_salient.yaml' (for Salinet)

Modify the parser in the evaluation/inference_ullava_core.py and evaluation/inference_ullava.py for stage I and stage II, respectively.

python evaluation/eval_ullava.py

python evaluation/eval_ullava_grounding.py

Distributed under the Apache License. See LICENSE for more information.

@article{xu2023ullava,

title={u-LLaVA: Unifying Multi-Modal Tasks via Large Language Model},

author={Xu, Jinjin and Xu, Liwu and Yang, Yuzhe and Li, Xiang and Xie, Yanchun and Huang, Yi-Jie and Li, Yaqian},

journal={arXiv preprint arXiv:2311.05348},

year={2023}

}

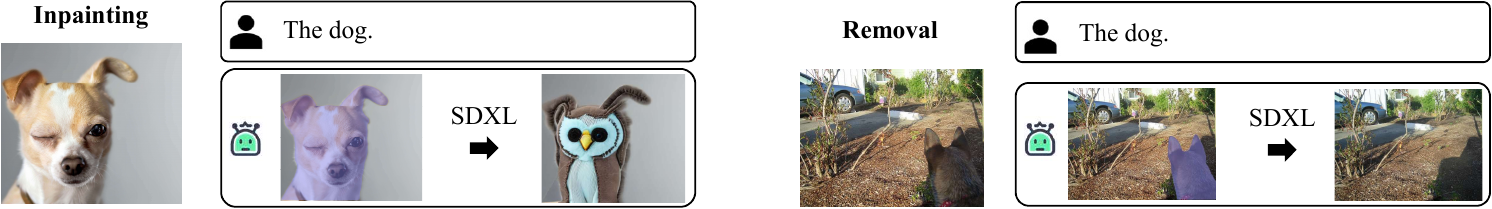

- Visual Segmentation

- Instance Segmentation

We sincerely thank the open source community for their contributions.

See the open issues for a full list of proposed features (and known issues).