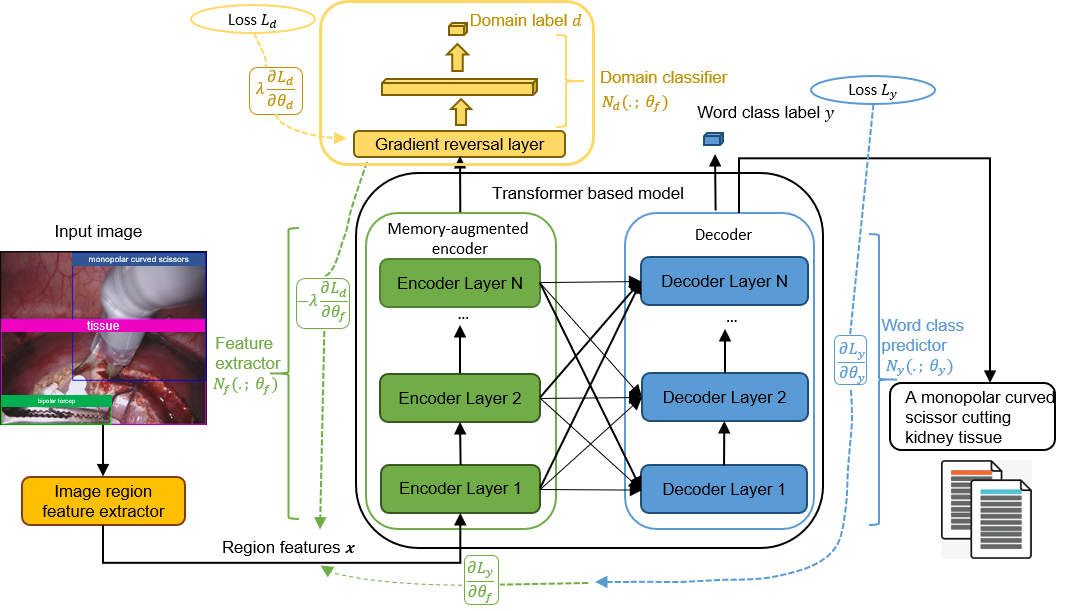

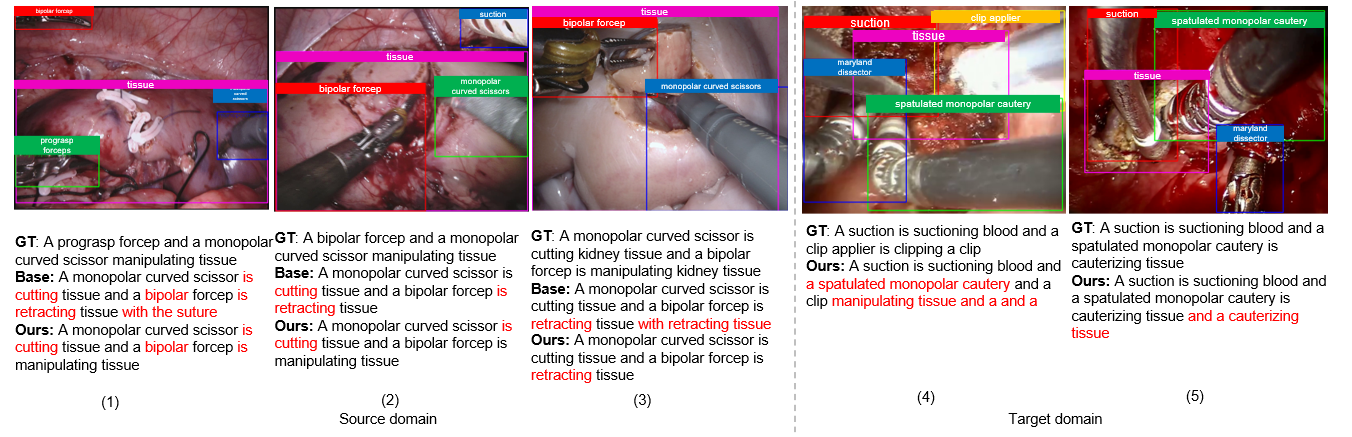

This repository contains the reference code for the paper "Learning Domain Adaptation with Model Calibration for Surgical Report Generation in Robotic Surgery"

Clone the repository and create the m2release conda environment using the environment.yml file:

conda env create -f environment.yml

conda activate m2release

Then download spacy data by executing the following command:

python -m spacy download en

Note: Python 3.6 is required to run our code.

The codes in preprocessing folder are used to prepare the annotations files which store features path of each surgical image and corresponding caption. This step is optional, you can skip it and directly download the annotation folder according to the next step.

We split the train / val dataset for In-domain dataset (MICCAI EndoVisSub2018-RoboticSceneSegmentation) in CaptionCollection.py, and for Out-of-domain dataset (SGH+NUH Transoral robotic surgery) in DACaptionCollection.py. You need to modify the dir_root_gt properly, switch between the two different seq_set and change the output file name correspondingly to get train dataset files and val dataset files.

For each surgical image, we create a .xml file which stores the caption and coordinates of bounding boxes. You can find xml folders from https://drive.google.com/file/d/1b9OGl8aqyzeOua6kCjCln9yvPZ3OSX8f/view?usp=sharing CaptionCollection.py and DACaptionCollection.py read these .xml files and collect all captions and corresponding feature path into .json file.

Run python Preprocessing/CaptionCollection.py to get the train / val annotations files for in-domain.

Run python Preprocessing/DACaptionCollection.py to get the train / val annotations files for out-of-domain.

To run the code, annotations folder and features folder for the dataset are needed. Download the annotations folder from https://drive.google.com/drive/folders/1cR5Wt_mKqn4qF45-hTnKzhG97pQL7ttU?usp=sharing Download the In-domain and Out-of-domain features folder from https://drive.google.com/file/d/1Vt95T_lLg_IhmttNP51B58DJ8iF3FP84/view?usp=sharing and https://drive.google.com/file/d/1bNZZSmX5CXxngazBpPdE9MVb7hF2RDeU/view?usp=sharing respectively and extract them.

- To reproduce the results of our model reported in our paper, download the pretrained model file saved_best_checkpoints/7_saved_models_final_3outputs/m2_transformer_best.pth

Run python val_ours.py --exp_name m2_transformer --batch_size 50 --m 40 --head 8 --warmup 10000 --features_path /path/to/features/instruments18_caption/ --annotation_folder annotations/annotations_resnet --features_path_DA /path/to/features/DomainAdaptation/ --annotation_folder_DA annotations/annotations_DA

You will get the validation results on In-domain (MICCAI) dataset and UDA (unsupervised domain adapatation) results on Out-of-domain (SGH NUH) dataset.

- To reproduce the results of the base model (M² Transformer), download the pretrained model file saved_best_checkpoints/MICCAI_SGH_Without_LS/ResNet/m2_transformer_best.pth

Run python val_base.py --exp_name m2_transformer --batch_size 50 --m 40 --head 8 --warmup 10000 --features_path /path/to/features/instruments18_caption/ --annotation_folder annotations/annotations_resnet

- To reproduce the Zero-shot, One-shot, Few-shot results on Out-of-domain with our model, download the pretrained our model files [saved_best_checkpoints/3_DA_saved_models/zero_shot/Base_GRL_LS/m2_transformer_best.pth] [saved_best_checkpoints/3_DA_saved_models/one_shot/Base_GRL_LS/m2_transformer_best.pth] [saved_best_checkpoints/3_DA_saved_models/few_shot/Base_GRL_LS/m2_transformer_best.pth]

from https://drive.google.com/drive/folders/12Gckx3fDW5ekFxHKpWPnSvNz4hvFafN2?usp=sharing

Then change the load path of the pretrained model to the above path accordingly in val_base.py.

Run python val_base.py --exp_name m2_transformer --batch_size 5 --m 40 --head 8 --warmup 10000 --features_path /path/to/features/DomainAdaptation/ --annotation_folder annotations/annotations_DA_xxx_shot

xxx = {zero, one, few}. If xxx = zero, please change "--batch_size 5" to "--batch_size 1" in the command at the same time.

- To reproduce the Zero-shot, One-shot, Few-shot results on Out-of-domain with base model, download the pretrained base model files [saved_best_checkpoints/3_DA_saved_models/zero_shot/Base/m2_transformer_best.pth] [saved_best_checkpoints/3_DA_saved_models/one_shot/Base/m2_transformer_best.pth] [saved_best_checkpoints/3_DA_saved_models/few_shot/Base/m2_transformer_best.pth]

from https://drive.google.com/drive/folders/12Gckx3fDW5ekFxHKpWPnSvNz4hvFafN2?usp=sharing

Then do change the load path in in val_base.py and run the same commands just like 3)

-

Run

python train_ours.py --exp_name m2_transformer --batch_size 50 --m 40 --head 8 --warmup 10000 --features_path /path/to/features/instruments18_caption/ --annotation_folder annotations/annotations_resnet --features_path_DA /path/to/features/DomainAdaptation/ --annotation_folder_DA annotations/annotations_DAto reproduce the results from our model. -

Run

python train_base.py --exp_name m2_transformer --batch_size 50 --m 40 --head 8 --warmup 10000 --features_path /path/to/features/instruments18_caption/ --annotation_folder annotations/annotations_resnetto reproduce the results from the base model (M² Transformer). -

Fine-tune the pretrained our model saved_best_checkpoints/4_save_models_oldfeatures_baseGRLLS/m2_transformer_best.pth on Out-of-domain in Zero-shot, One-shot, Few-shot manners,

Please change the load path of the pretrained model to 'saved_best_checkpoints/4_save_models_oldfeatures_baseGRLLS/m2_transformer_best.pth' in each DA_xxx_shot.py firstly

Run python DA.py --exp_name m2_transformer --batch_size 5 --m 40 --head 8 --warmup 10000 --features_path /path/to/features/DomainAdaptation/ --annotation_folder annotations/annotations_DA_xxx_shot to reproduce the results from our model.

xxx = {zero, one, few}. If xxx = zero, please change "--batch_size 5" to "--batch_size 1" in the command at the same time.

- Fine-tune the pretrained base model saved_best_checkpoints/MICCAI_SGH_Without_LS/ResNet/m2_transformer_best.pth on Out-of-domain in Zero-shot, One-shot, Few-shot manners, please change the load path of the pretrained model to 'saved_best_checkpoints/MICCAI_SGH_Without_LS/ResNet/m2_transformer_best.pth' in each DA_xxx_shot.py firstly and run the same commands as 3) to reproduce the results from base model.

Explanation of arguments:

| Argument | Possible values |

|---|---|

--exp_name |

Experiment name |

--batch_size |

Batch size (default: 10) |

--workers |

Number of workers (default: 0) |

--m |

Number of memory vectors (default: 40) |

--head |

Number of heads (default: 8) |

--warmup |

Warmup value for learning rate scheduling (default: 10000) |

--features_path |

Path to detection features |

--annotation_folder |

Path to folder with annotations |

(In our case, /path/to/features/ is /media/mmlab/data_2/mengya/)