This is the official project page of the paper "Towards Surveillance Video-and-Language Understanding: New Dataset, Baselines, and Challenges"(CVPR 2024)

We are excited to introduce the UCA (UCF-Crime Annotation) dataset, meticulously crafted based on the UCF-Crime dataset.

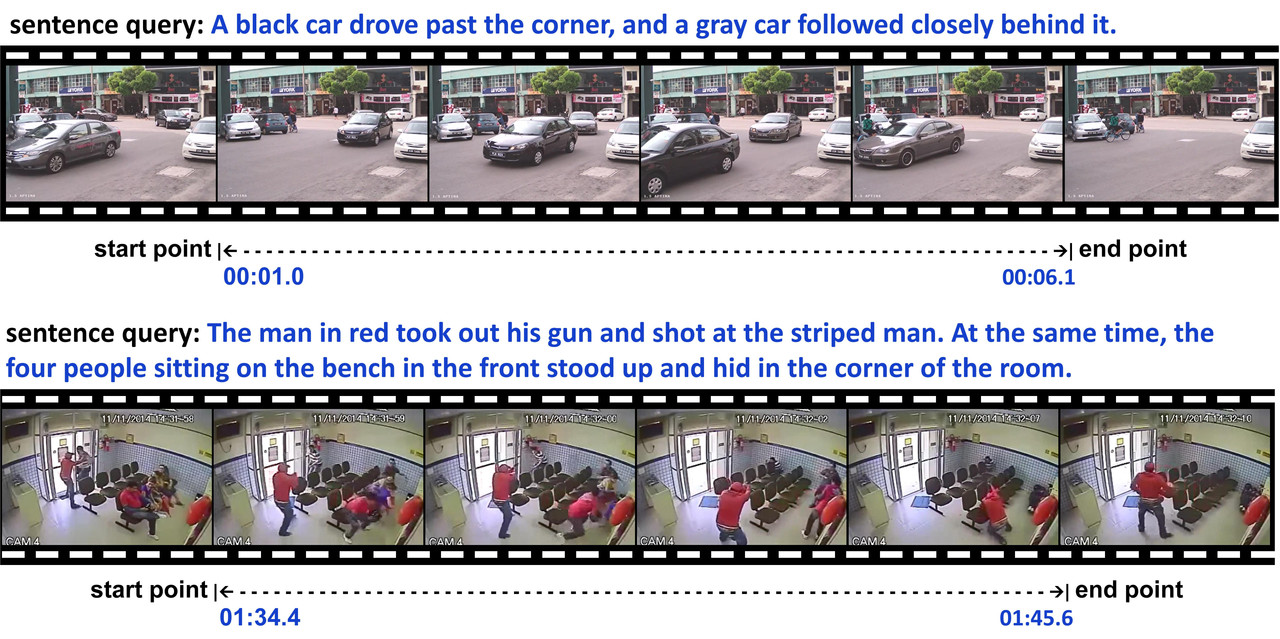

The UCA dataset is extensive, featuring 1,854 videos and 23,542 sentences that span 110.7 hours, each averaging 20 words in length. Each video in the dataset has been meticulously annotated with event descriptions, providing precise start and end times down to 0.1 seconds. This level of detail and annotation density makes UCA a valuable resource for exploring and advancing multi-modal learning techniques, especially in the field of intelligent security.

In addition to constructing this comprehensive dataset, we benchmark SOTA models for four multimodal tasks on UCA. These tasks serve as new baselines for the understanding of surveillance video in conjunction with language. Our experiments reveal that mainstream models, which performed well on publicly available datasets, face new challenges in the surveillance video context. This underscores the unique complexities of surveillance video-and-language understanding.

The following table provides a statistical comparison between the UCA dataset and other traditional video datasets in multimodal learning tasks. Our dataset is specifically designed for the surveillance domain, featuring the longest average word count per sentence.

During the video collection process for UCA, we conducted a meticulous screening of the original UCF-Crime dataset to filter out videos of lower quality. This ensures the quality and fairness of our UCA dataset. The low-quality videos identified had issues like repetitions, severe obstructions, or excessively fast playback speeds, which impeded the clarity of manual annotations and the precision of event time localization.

Consequently, we removed 46 videos from the original UCF-Crime dataset, resulting in a total of 1,854 videos for UCA. The data split in UCA is outlined in the table below.

The UCA dataset is available in two formats: txt and json.

-

txt format:

VideoName StartTime EndTime ##Video event description -

json format:

"VideoName": { "duration": xx.xx, "timestamps": [ [StartTime 1, EndTime 1], [StartTime 2, EndTime 2] ], "sentences": ["Video event description 1", "Video event description 2"] }

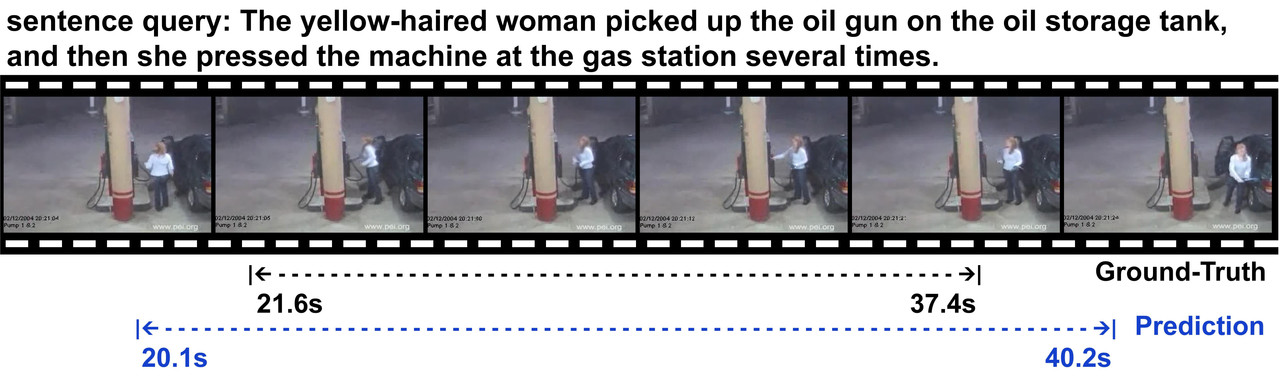

Annotation Examples: The following image shows fine-grained sentence queries and their corresponding timing in our UCA dataset.

txt: Contains annotation data in txt format.json: Contains annotation data in json format.txt_mask: Contains gender-neutral annotation data.

We conducted four types of experiments on our dataset:

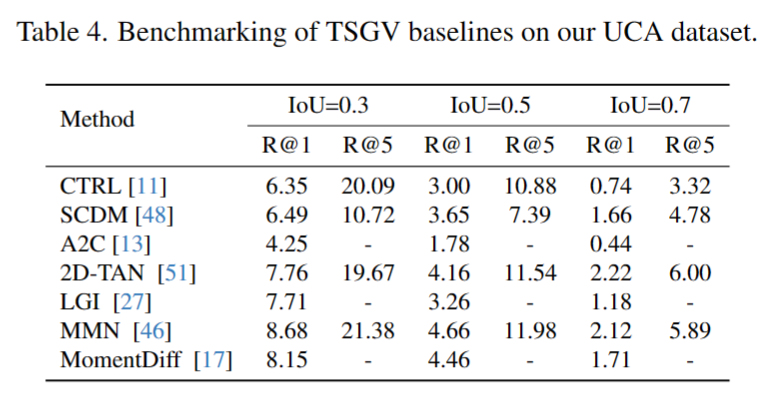

- Temporal Sentence Grounding in Videos (TSGV): This task focuses on temporal activity localization in a video based on a language query.

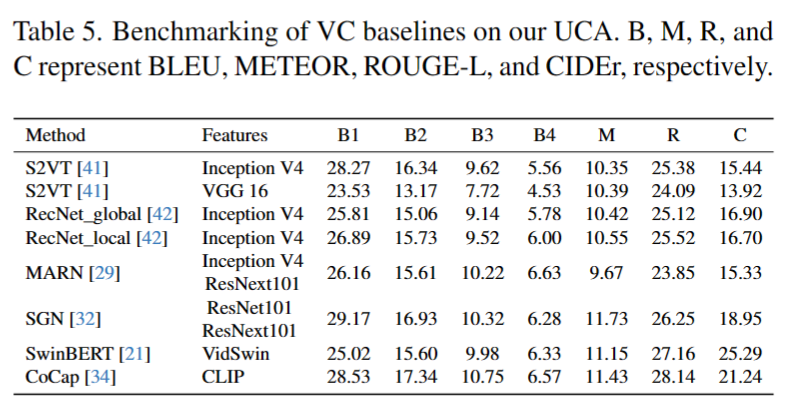

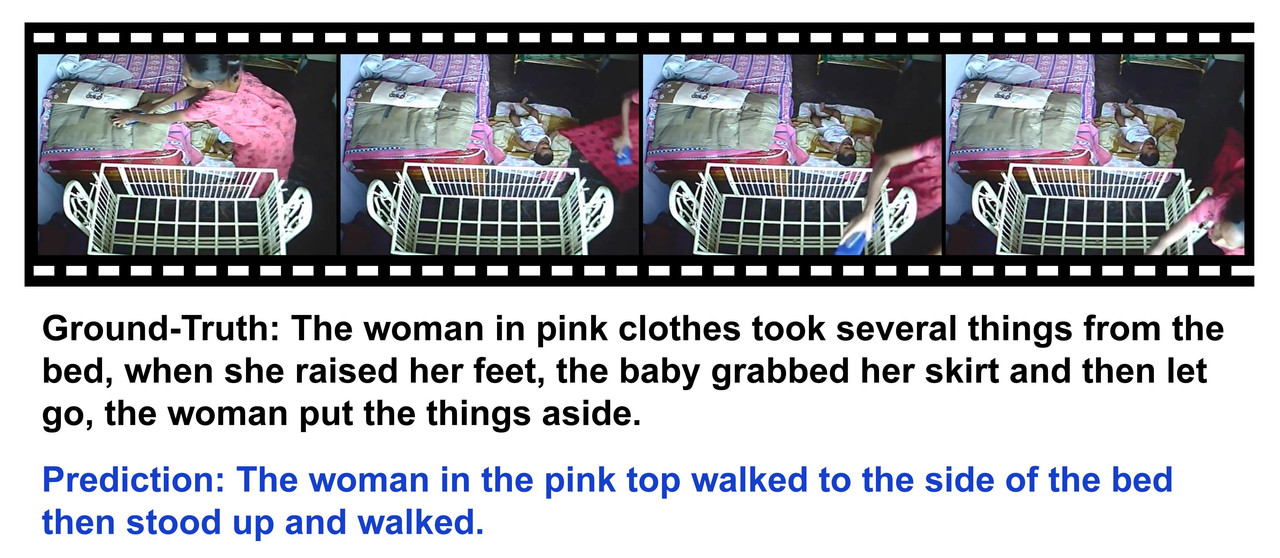

- Video Captioning (VC): Understanding a video clip and describing it with language.

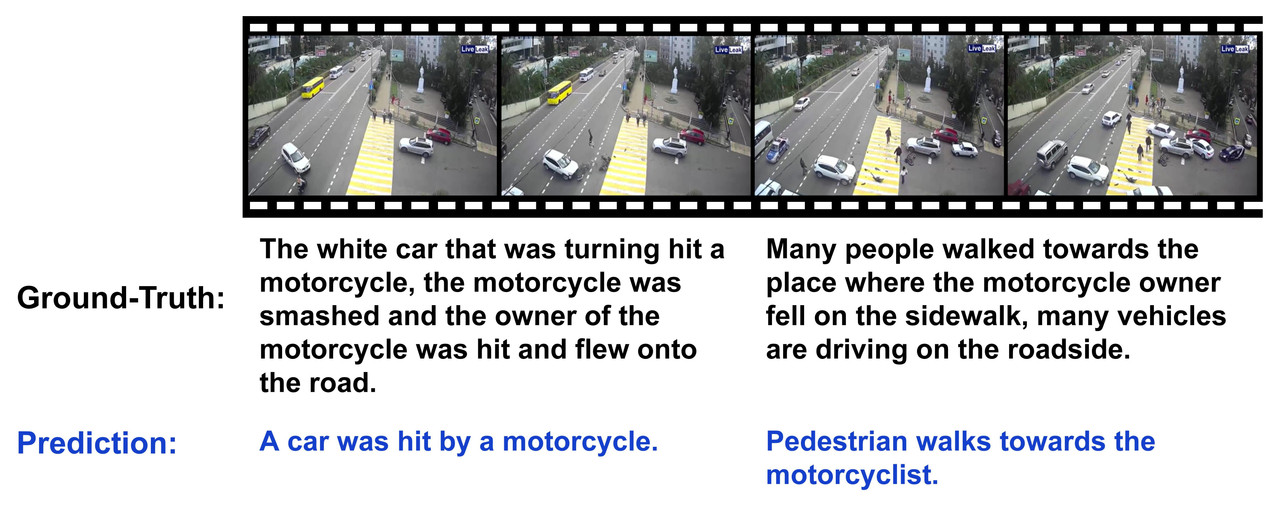

- Dense Video Captioning (DVC): Involves generating the temporal localization and captioning of dense events in an untrimmed video.

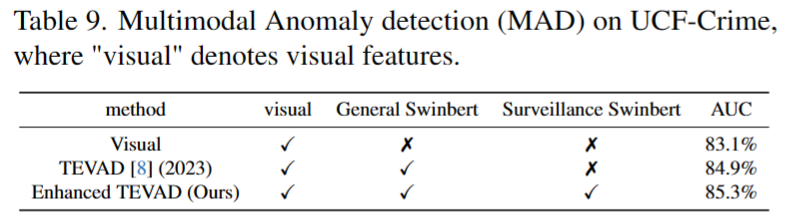

- Multimodal Anomaly Detection (MAD): Utilizes captions as a text feature source to enhance traditional anomaly detection in complex surveillance videos.

For each task, multiple models were evaluated. Here, we present some experiment results as examples.

To better understand the dataset and the experimental outcomes, the following visualizations are included:

Our UCA dataset is built upon the foundational UCF-Crime dataset. For those interested in exploring the original data, the UCF-Crime dataset can be downloaded directly from this link: Download zip.

Additionally, further details about the UCF-Crime project are available on their official website: Visit here.

If you wish to reference the UCF-Crime dataset in your work, please cite the following paper:

@inproceedings{sultani2018real,

title={Real-world anomaly detection in surveillance videos},

author={Sultani, Waqas and Chen, Chen and Shah, Mubarak},

booktitle={Proceedings of the IEEE conference on computer vision and pattern recognition},

pages={6479--6488},

year={2018}

}

Each annotation in our UCA dataset is associated with a corresponding video in the original UCF-Crime dataset. Users interested in this dataset can easily match the videos to the annotation information after downloading.

Our dataset is exclusively available for academic and research purposes. Please feel free to contact the original authors for inquiries, suggestions, or collaboration proposals.

The dataset is available under the Apache-2.0 license.

@misc{yuan2023surveillance,

title={Towards Surveillance Video-and-Language Understanding: New Dataset, Baselines, and Challenges},

author={Tongtong Yuan and Xuange Zhang and Kun Liu and Bo Liu and Chen Chen and Jian Jin and Zhenzhen Jiao},

year={2023},

eprint={2309.13925},

archivePrefix={arXiv},

primaryClass={cs.CV}

}