[CVPR2023] [Project Page] [arXiv] [Demo][BibTex] [中文简介]

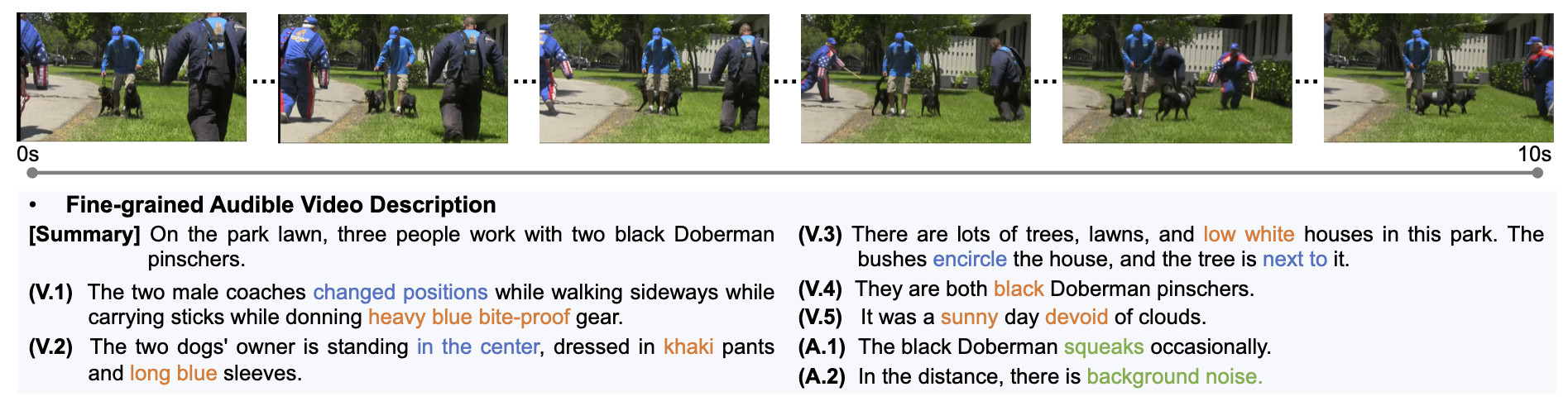

This repository provides the official implementation for the CVPR2023 paper "Fine-grained Audible Video Description". We build a novel task: FAVD and a new dataset: FAVDBench in this paper.

😃 This repository offers the code necessary to replicate the results outlined in the paper. However, we encourage you to explore additional tasks using the FAVDBench dataset.

You can access the FAVDBench dataset by visiting the OpenNLPLab/Download webpage. To obtain the dataset, please complete the corresponding Google Forms. Once we receive your application, we will respond promptly. Alternatively, if you encounter any issues with the form, you can also submit your application (indicating your Name, Affiliation) via email to opennlplab@gmail.com.

- FAVDBench Dataset Google Forms: https://forms.gle/5S3DWpBaV1UVczkf8

These downloaded data can be placed or linked to the directory AVLFormer/datasets.

In general, the code requires python>=3.7, as well as pytorch>=1.10 and torchvision>=0.8. You can follow recommend_env.sh to configure a recommend conda environment:

-

Create virtual env

conda create -n FAVDBench; conda activate FAVDBench -

Install pytorch-related packages:

conda install pytorch==1.12.1 torchvision==0.13.1 torchaudio==0.12.1 cudatoolkit=11.3 -c pytorch

-

Install basic packages:

pip install fairscale opencv-python pip install deepspeed PyYAML fvcore ete3 transformers pandas timm h5py pip install tensorboardX easydict progressbar matplotlib future deprecated scipy av scikit-image boto3 einops addict yapf

-

Install mmcv-full

pip install mmcv-full==1.6.1 -f https://download.openmmlab.com/mmcv/dist/cu113/torch1.12/index.html

-

Install apex

git clone https://github.com/NVIDIA/apex cd apex pip install -v --disable-pip-version-check --no-cache-dir ./ -

Clone related repo for eval

cd ./AVLFormer/src/evalcap git clone https://github.com/xiaoweihu/cider.git git clone https://github.com/LuoweiZhou/coco-caption.git mv ./coco-caption ./coco_caption -

Install ffmpeg & ffprobe

-

Use

ffmpeg -versionandffprobe -versionto check whether ffmpeg and ffprobe are installed. -

Installation guideline:

# For ubuntu sudo apt update sudo apt install ffmpeg # For mac brew update brew install ffmpeg

📝Note:

- Please finish the above installation before the subsequent steps.

- Check Quick Links for Dataset Preparation to download the processed files may help you to quickly enter the exp part.

-

Refer to the Apply for Dataset section to download the raw video files directly into the datasets folder.

-

Retrieve the metadata.zip file into the datasets folder, then proceed to unzip it.

-

Activate conda env

conda activate FAVDBench. -

Extract the frames from videos and convert them into a single TSV (Tab-Separated Values) file.

# check the path pwd >>> FAVDBench/AVLFormer # check the preparation ls datasets >>> audios metadata videos audios # data pre-processing bash data_prepro/run.sh # validate the data pre-processing ls datasets >>> audios frames frame_tsv metadata videos ls datasets/frames >>> train-32frames test-32frames val-32frames ls datasets/frame_tsv test_32frames.img.lineidx test_32frames.img.tsv test_32frames.img.lineidx.8b val_32frames.img.lineidx val_32frames.img.tsv val_32frames.img.lineidx.8b train_32frames.img.lineidx train_32frames.img.tsv train_32frames.img.lineidx.8b

📝Note

- The contents within

datasets/framesserve as intermediate files for training, although they hold utility for inference and scoring. datasets/frame_tsvfiles are specifically designed for training purposes.- Should you encounter any problems, access Quick Links for Dataset Preparation to download the processed files or initiate a new issue in GitHub.

- The contents within

-

Convert the audio files in

mp3format to theh5pyformat by archiving them.python data_prepro/convert_h5py.py train python data_prepro/convert_h5py.py val python data_prepro/convert_h5py.py test# check the preparation ls datasets/audio_hdf >>> test_mp3.hdf train_mp3.hdf val_mp3.hdf📝Note

- Should you encounter any problems, access Quick Links for Dataset Preparation to download the processed files or initiate a new issue in GitHub.

| URL | md5sum | |

|---|---|---|

| metadata | 💻 metadata.zip | f03e61e48212132bfd9589c2d8041cb1 |

| audio_mp3 | 🎵 audio_mp3.tar | e2a3eb49edbb21273a4bad0abc32cda7 |

| audio_hdf | 🎵 audio_hdf.tar | 79f09f444ce891b858cb728d2fdcdc1b |

| frame_tsv | 🎆 Dropbox / 百度网盘 | 6c237a72d3a2bbb9d6b6d78ac1b55ba2 |

📝Note:

- Please finish the above installation and data preparation before the subsequent steps.

- Check Quick Links for Experiments to download the pretrained weights may help your exps.

Please visit Video Swin Transformer to download pre-trained weights models.

Download swin_base_patch244_window877_kinetics400_22k.pth and swin_base_patch244_window877_kinetics600_22k.pth, and place them under models/video_swin_transformer directory.

FAVDBench/AVLFormer

|-- models

| |--captioning/bert-base-uncased

| |-- video_swin_transformer

| | |-- swin_base_patch244_window877_kinetics600_22k.pth

| | |-- swin_base_patch244_window877_kinetics400_22k.pth- The run.sh file provides training scripts catered for single GPU, multiple GPUs, and distributed across multiple nodes with GPUs.

- The hyperparameters could be beneficial.

- For detailed instructions, refer to this guide.

- The inference.sh file offers scripts for inferences.

- Attention: The baseline for inference necessitates both raw video and audio data, which could be found here.

- For detailed instructions, refer to this guide.

| URL | md5sum | |

|---|---|---|

| weight | 🔒 GitHub / 百度网盘 | 5d6579198373b79a21cfa67958e9af83 |

| hyperparameters | 🧮 args.json | - |

| prediction | ☀️ prediction_coco_fmt.json | - |

| metrics | 🔢 metrics.log | - |

- For detailed instructions, refer to this guide.

cd Metrics/AudioScore

python score.py \

--pred_path PATH_to_PREDICTION_JSON_in_COCO_FORMAT \📝Note:

- Additional weights is requried to download, please refer to the installation.

-

URL md5sum TriLip 👍 TriLip.bin 6baef8a9b383fa7c94a4c56856b0af6d

- For detailed instructions, refer to this guide.

cd Metrics/EntityScore

python score.py \

--pred_path PATH_to_PREDICTION_JSON_in_COCO_FORMAT \

--refe_path AVLFormer/datasets/metadata/test.caption_coco_format.json \

--model_path t5-base- Please refer to CLIPScore to evaluate the model.

The community usage of FAVDBench model & code requires adherence to Apache 2.0. The FAVDBench model & code supports commercial use.

Our project is developed based on the following open source projects:

- SwinBERT for the code baseline.

- Video Swin Transformer for video model.

- PaSST for audio model.

- CLIP for AudioScore model.

If you use FAVD or FAVDBench in your research, please use the following BibTeX entry.

@InProceedings{Shen_2023_CVPR,

author = {Shen, Xuyang and Li, Dong and Zhou, Jinxing and Qin, Zhen and He, Bowen and Han, Xiaodong and Li, Aixuan and Dai, Yuchao and Kong, Lingpeng and Wang, Meng and Qiao, Yu and Zhong, Yiran},

title = {Fine-Grained Audible Video Description},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2023},

pages = {10585-10596}

}