Please note that this project is not based on standard python scripts (.py) so that it only works in Jupyter Notebook and Visdom. Therefore you need to install them before running this project. An alternative is to change the whole project to standard python scripts (.py) which won't take much time.

This is the pyTorch implementation of Fast Neural Style, for more details of this network, please refer to the original paper:

Johnson, Justin, Alexandre Alahi, and Li Fei-Fei. "Perceptual losses for real-time style transfer and super-resolution." European Conference on Computer Vision. Springer, Cham, 2016.

- pyTorch 0.4.0+

- Jupyter Notebook

- Visdom

- ipdb, tqdm

- Download MS COCO dataset from here, and unzip the folder to "datasets".

- Open "config.ipynb" in Jupyter Notebook and change the settings according to your hardware configuration, note that the ratio of w_content and w_style should be chosen carefully.

- Open "train.ipynb" in Jupyter Notebook and click "Cell - Run all" to start training.

- During training you can view the output from Visdom, the training results (.pth) will be saved in "saves".

- After training, use "test.ipynb" to run the trained network.

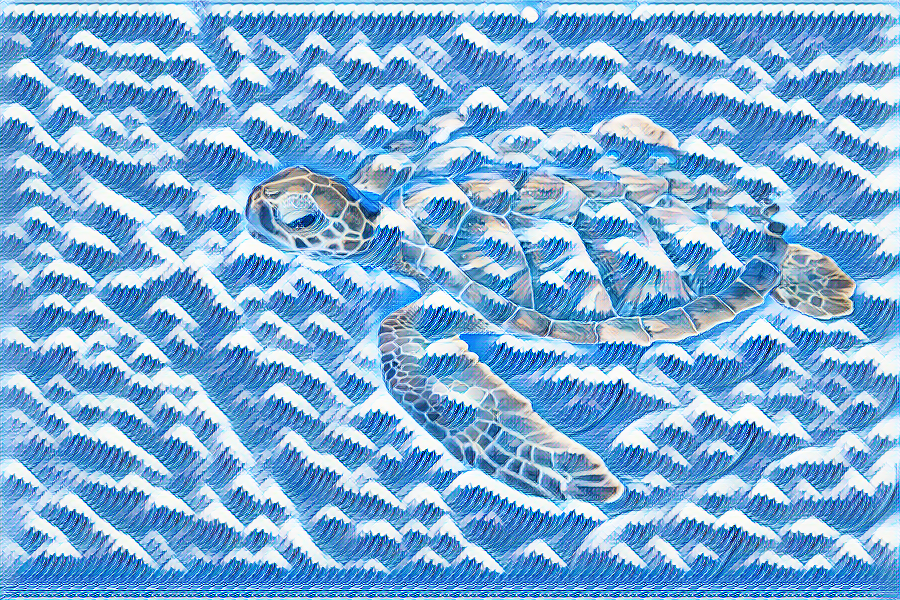

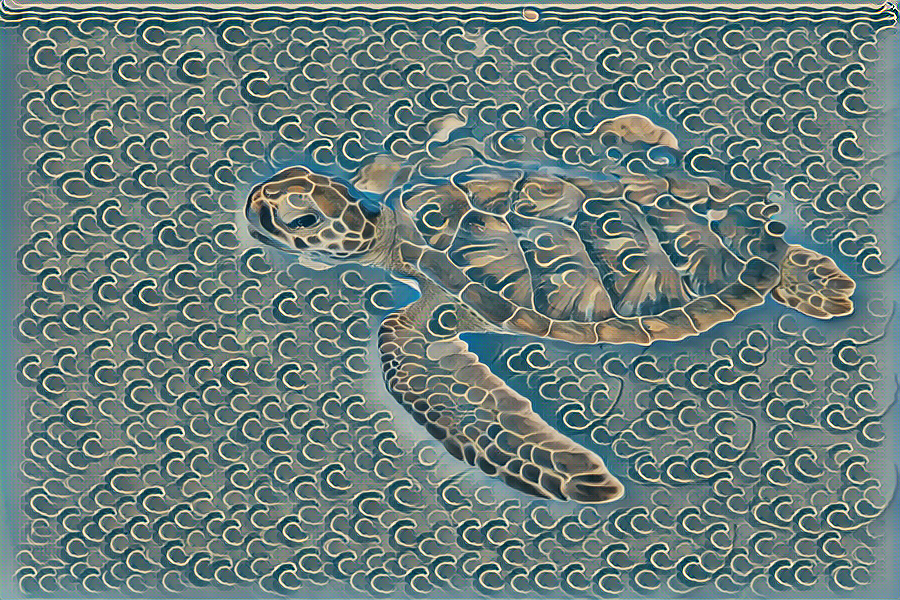

|

|

|

|---|---|---|

|

|

|

This project uses the original network structure proposed without further modification and thus the output may not be very good sometimes. However, there are some other improved versions available online such as adding reflection padding or replacing transposed convolution with upsampling followed by convolution.