BERT(BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding) yields pretrained token (=subword) embeddings. Let's extract and save them in the word2vec format so that they can be used for downstream tasks.

- pytorch_pretrained_bert

- NumPy

- tqdm

- Check

extract.py.

| Models | # Vocab | # Dim | Notes |

|---|---|---|---|

| bert-base-uncased | 30,522 | 768 | |

| bert-large-uncased | 30,522 | 1024 | |

| bert-base-cased | 28,996 | 768 | |

| bert-large-cased | 28,996 | 1024 | |

| bert-base-multilingual-cased | 119,547 | 768 | Recommended |

| bert-base-multilingual-uncased | 30,522 | 768 | Not recommended |

| bert-base-chinese | 21,128 | 768 |

-

Check

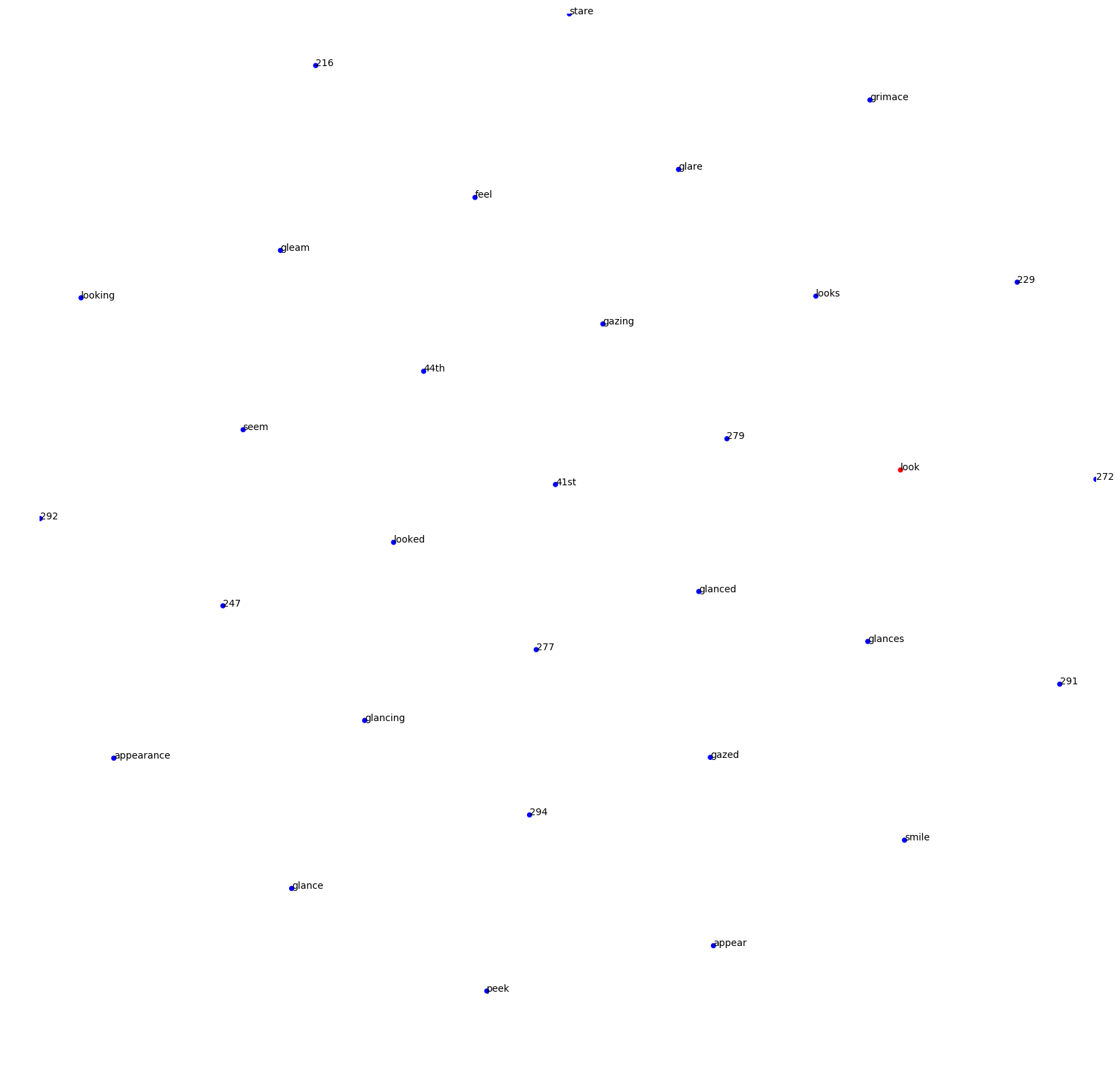

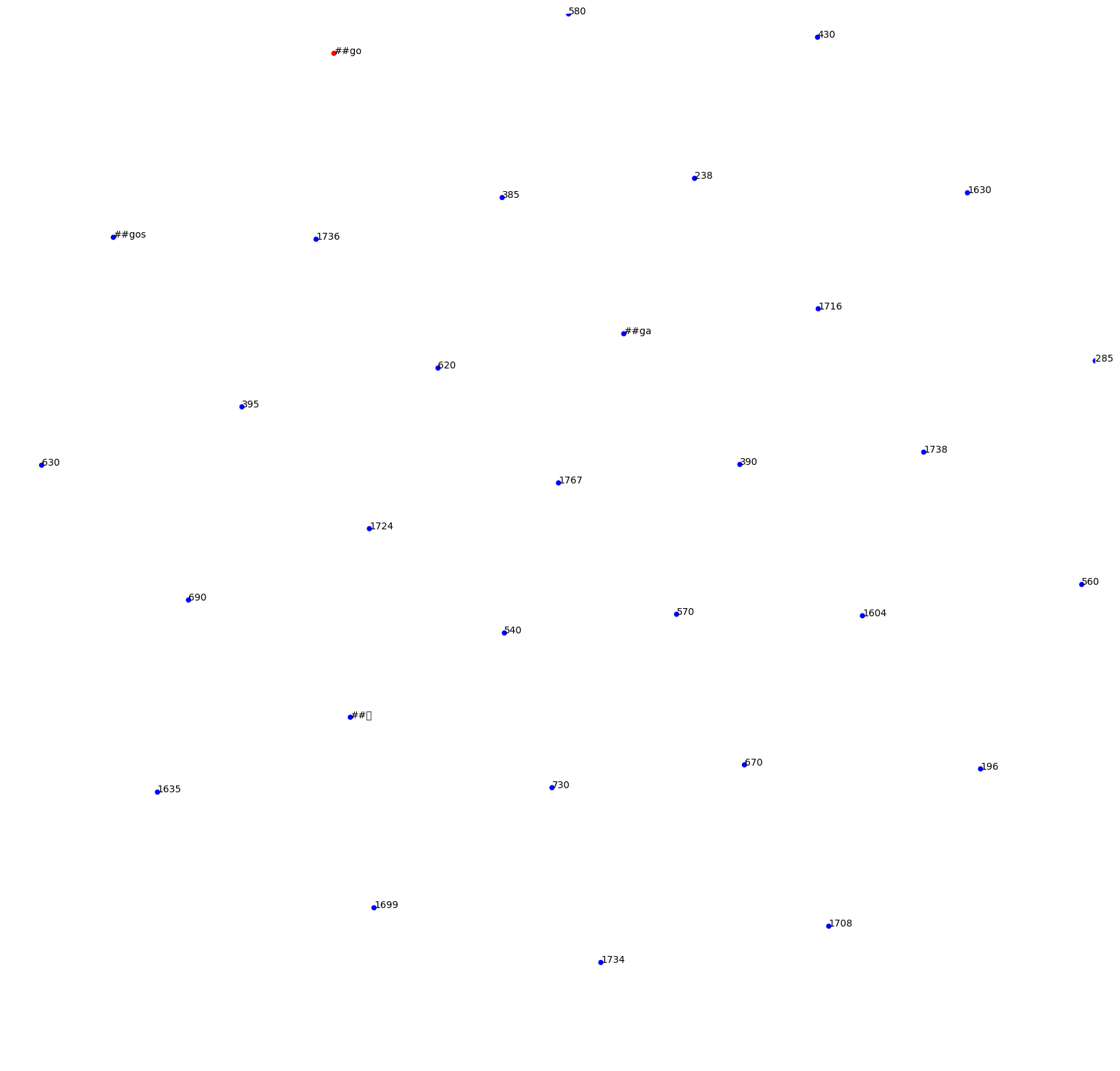

example.ipynbto see how to load (sub-)word vectors with gensim and plot them in 2d space using tSNE. -

Related tokens to look