The mlrun/demos repository provides full end-to-end ML demo use-case applications using MLRun.

- MLRun Demos - In This Document

The MLRun demos are end-to-end use-case applications that leverage MLRun to implement complete machine-learning (ML) pipelines — including data collection and preparation, model training, and deployment automation.

The demos demonstrate how you can

- Run ML pipelines locally from a web notebook such as Jupyter Notebook.

- Run some or all tasks on an elastic Kubernetes cluster by using serverless functions.

- Create automated ML workflows using Kubeflow Pipelines.

The demo applications are tested on the Iguazio Data Science Platform ("the platform") and use its shared data fabric, which is accessible via the v3io file-system mount; if you're not already a platform user, request a free trial.

You can also modify the code to work with any shared file storage by replacing the apply(v3io_mount()) calls with any other Kubeflow volume modifier.

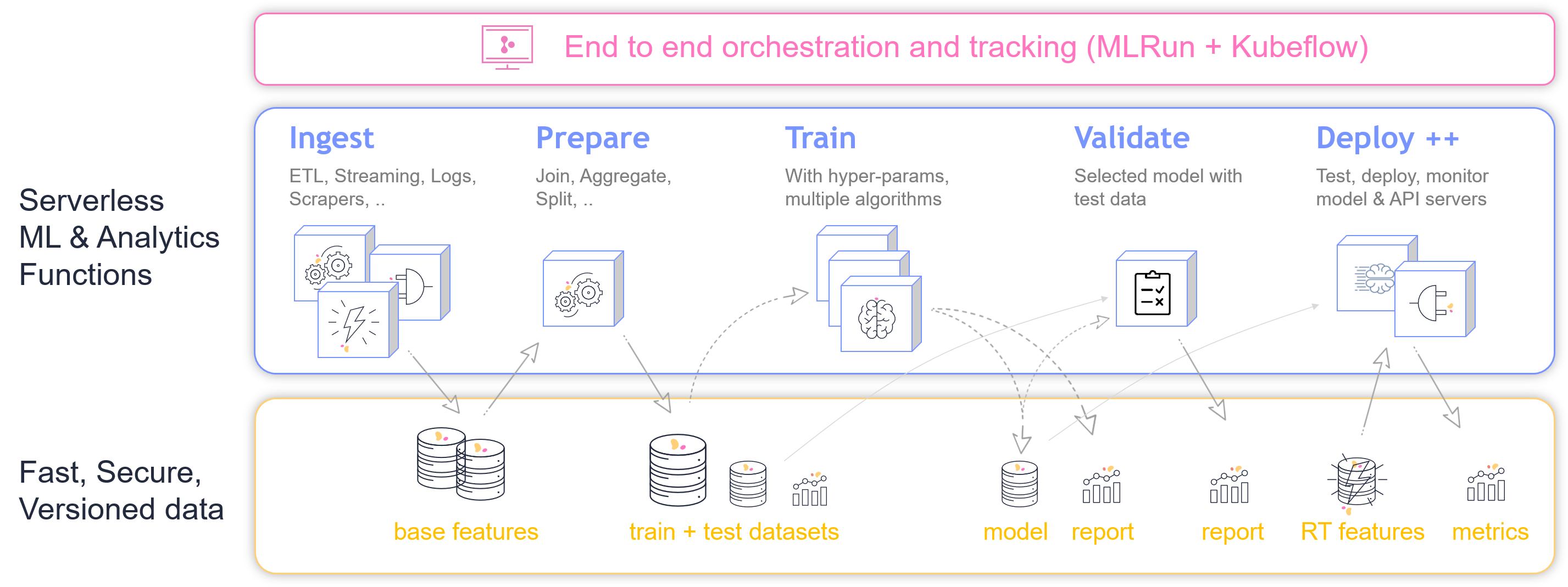

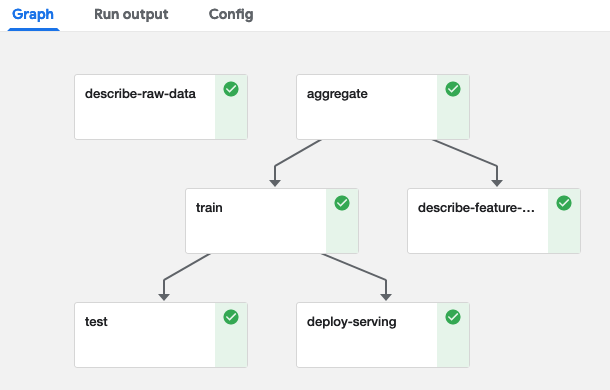

The provided demos implement some or all of the ML workflow steps illustrated in the following image:

To run the MLRun demos, first do the following:

- Prepare a Kubernetes cluster with preinstalled operators or custom resources (CRDs) for Horovod and/or Nuclio, depending on the demos that you wish to run.

- Install an MLRun service on your cluster. See the instructions in the MLRun documentation.

- Ensure that your cluster has a shared file or object storage for storing the data (artifacts).

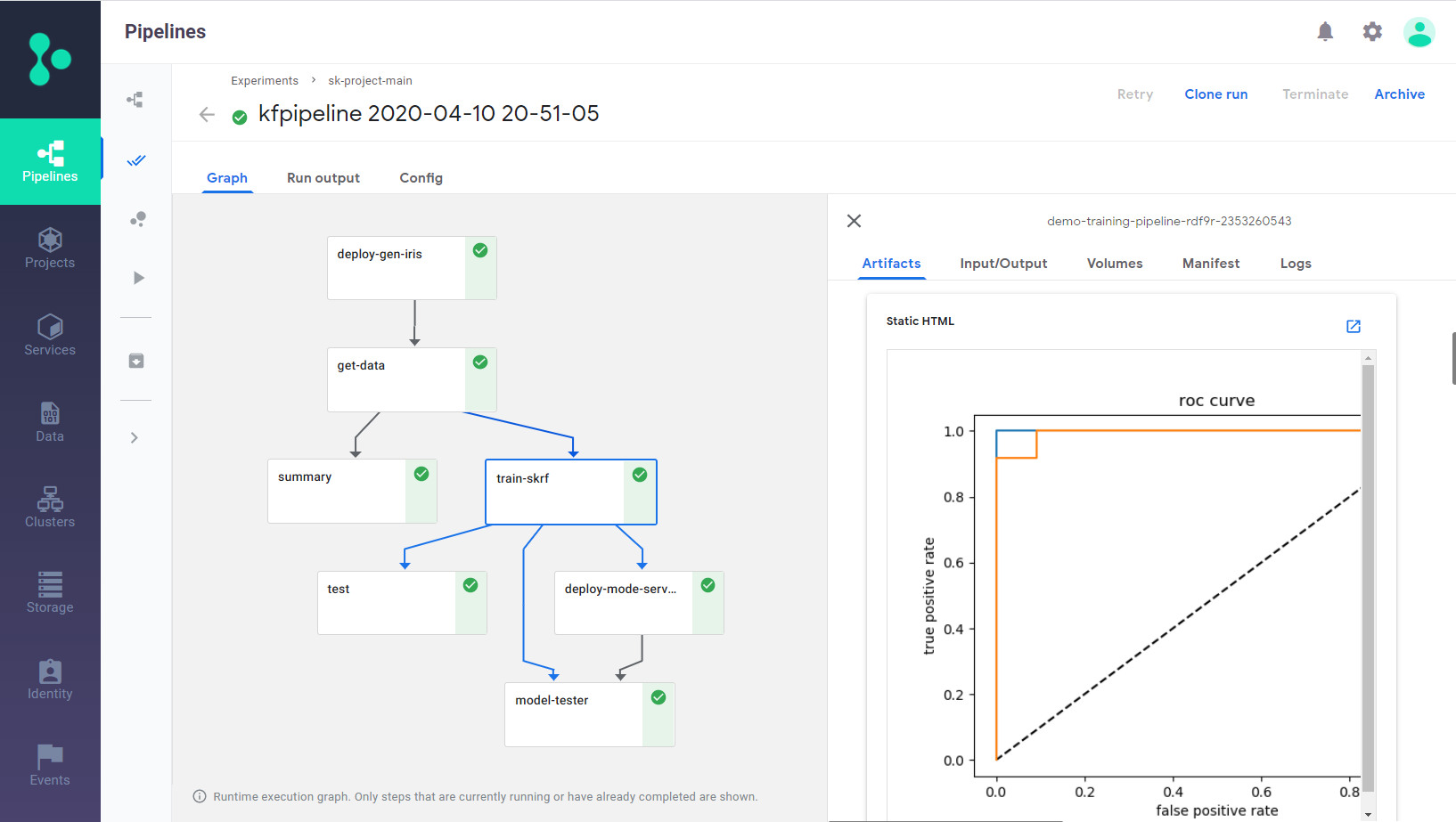

The scikit-learn-pipeline demo demonstrates how to build a full end-to-end automated-ML (AutoML) pipeline using scikit-learn and the UCI Iris data set.

The combined CI/data/ML pipeline includes the following steps:

- Create an Iris data-set generator (ingestion) function.

- Ingest the Iris data set.

- Analyze the data-set features.

- Train and test the model using multiple algorithms (AutoML).

- Deploy the model as a real-time serverless function.

- Test the serverless function's REST API with a test data set.

To run the demo, download the sklearn-project.ipynb notebook into an empty directory and execute the cells sequentially.

The output plots can be viewed as static HTML files in the scikit-learn-pipeline/plots directory.

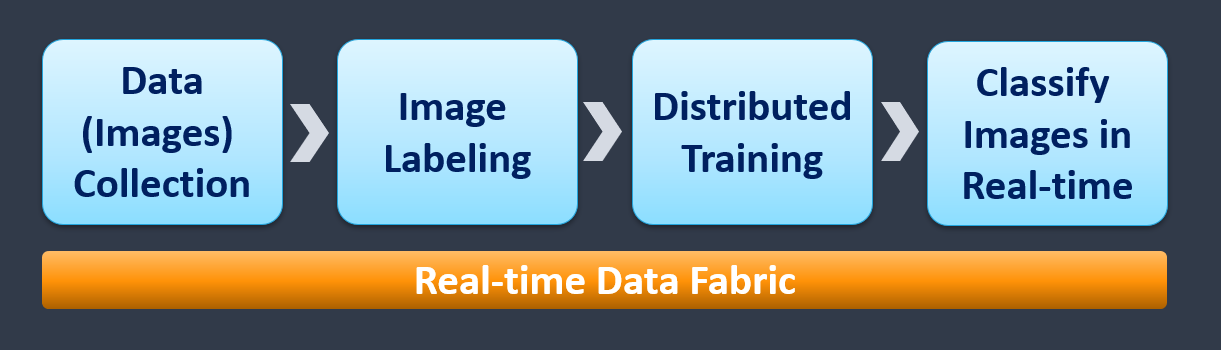

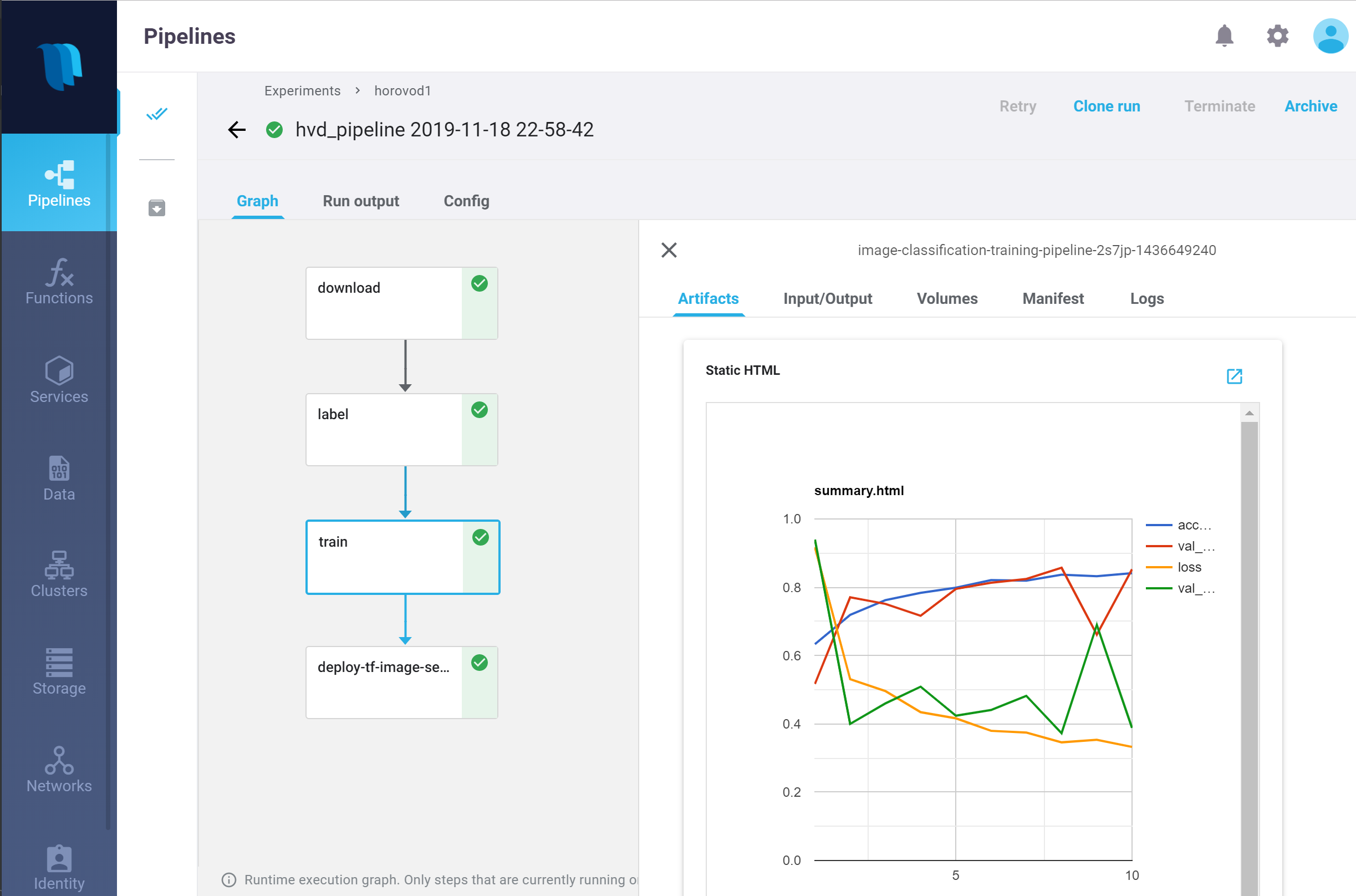

The image-classification-with-distributed-training demo demonstrates an end-to-end image-classification solution using TensorFlow (versions 1 or 2), Keras, Horovod, and Nuclio.

The demo consists of four MLRun and Nuclio functions and a Kubeflow Pipelines orchestration:

- Download: Import an image archive from AWS S3 to your cluster's data store.

- Label: Tag the images based on their name structure.

- Training: Perform distributed training using TensorFlow, Keras, and Horovod.

- Inference: Automate deployment of a Nuclio model-serving function.

Note: The demo supports both TensorFlow versions 1 and 2. There's one shared notebook and two code files — one for each TensorFlow version.

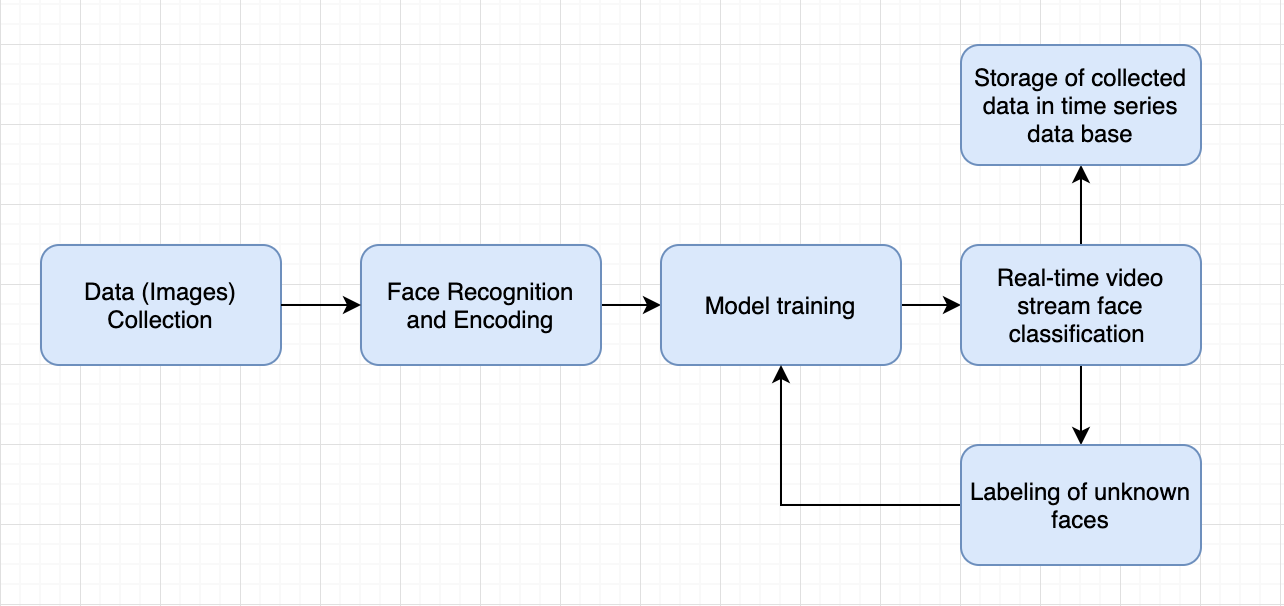

The faces demo demonstrates real-time capture, recognition, and classification of face images over a video stream, as well as location tracking of identities.

This comprehensive demonstration includes multiple components:

- A live image-capture utility.

- Image identification and tracking using OpenCV.

- A labeling application for tagging unidentified faces using Streamlit.

- Model training using PyTorch.

- Automated model deployment using Nuclio

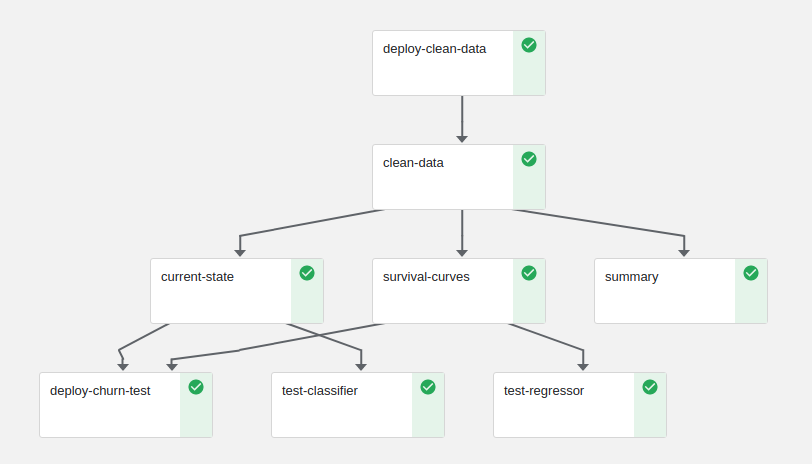

The chrun demo demonstrates analyses of customer-churn data using the Kaggle Telco Customer Churn data set, model training and validation using XGBoost, and model serving using real-time Nuclio serverless functions.

The demo consists of few MLRun and Nuclio functions and a Kubeflow Pipelines orchestration:

- Write custom data encoders for processing raw data and categorizing or "binarizing" various features.

- Summarize the data, examining parameters such as class balance and variable distributions.

- Define parameters and hyperparameters for a generic XGBoost training function.

- Train and test several models using XGBoost.

- Identify the best model for your needs, and deploy it into production as a real-time Nuclio serverless function.

- Test the model server.

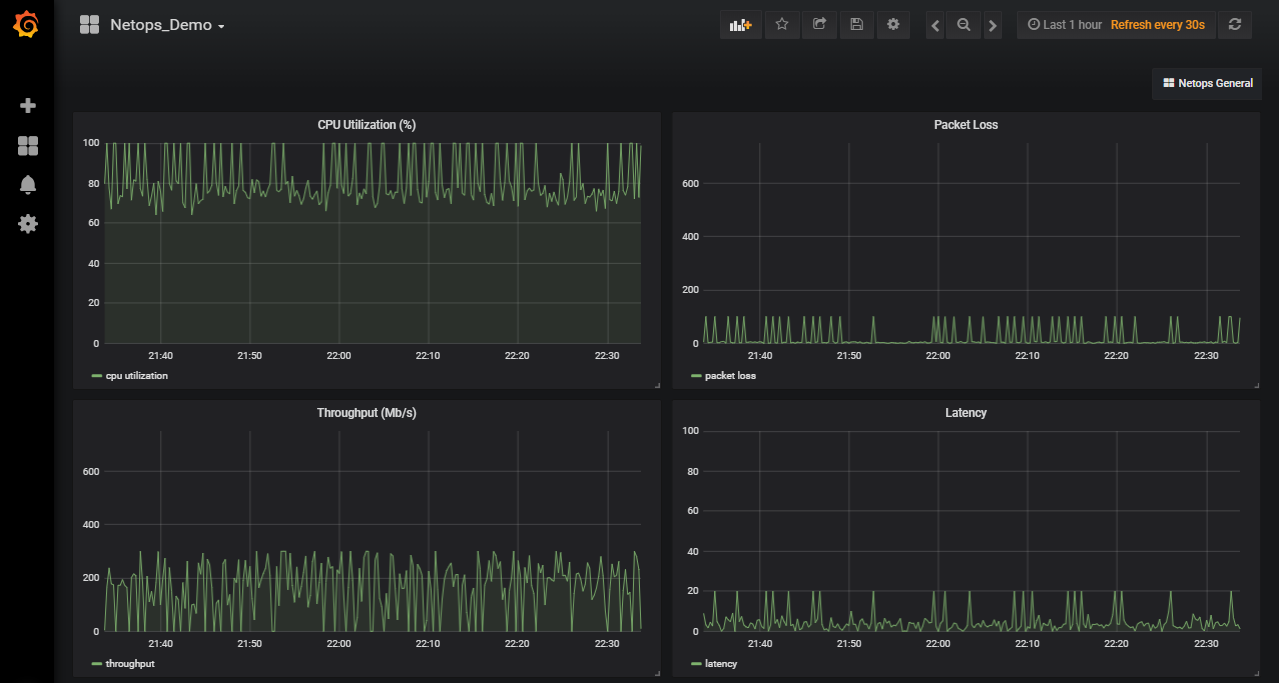

The NetOps demo demonstrates ingestion of telemetry/Network Operations (NetOps) data from a simulator or live stream, feature exploration, data preparation (aggregation), model training, and automated model deployment.

The demo is maintained in a separate Git repository and also demonstrates how to manage a project life cycle using Git.