This repository is based on alpha-omok made by RLKorea. Original Code for Omok version

AlphaZero is a Reinforcement Learning algorithm which is effectively combine MCTS(Monte-Carlo Tree Search) with Actor-Critic. The RL model is implemented by Pytorch.

Usage

- Go Pytorch and install it.

- pip install pygame

- python main.py (for training, default: 9x9 board)

- python eval_main.py (you can play the game with alpha)

There are 2 objectives to achieve in this project

- Implement AlphaZero on Connect6

- Optimize the code

- ID-based implementation

- Description of the parameters

- How to change the environment

- How to load the saved model

- How to use eval_main

- Log file

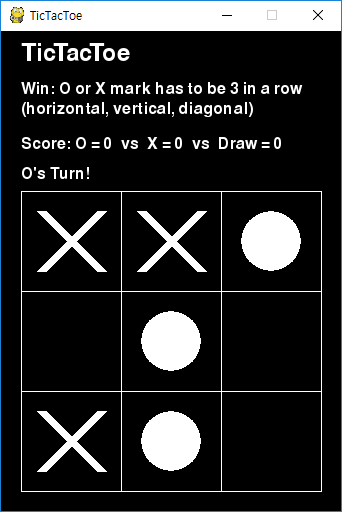

This folder is for implementing MCTS in Tic-Tac-Toe. If you want to study MCTS only, please check the files in this folder.

The description of the files in the folder is as follows. (files with bold text are codes for implementation)

- env: Tic-Tac-Toe environment code (made with pygame)

- mcts_guide: MCTS doesn't play the game, it only recommends how to play.

- mcts_vs: User can play against MCTS algorithm.

- utils: functions for implementing algorithm.

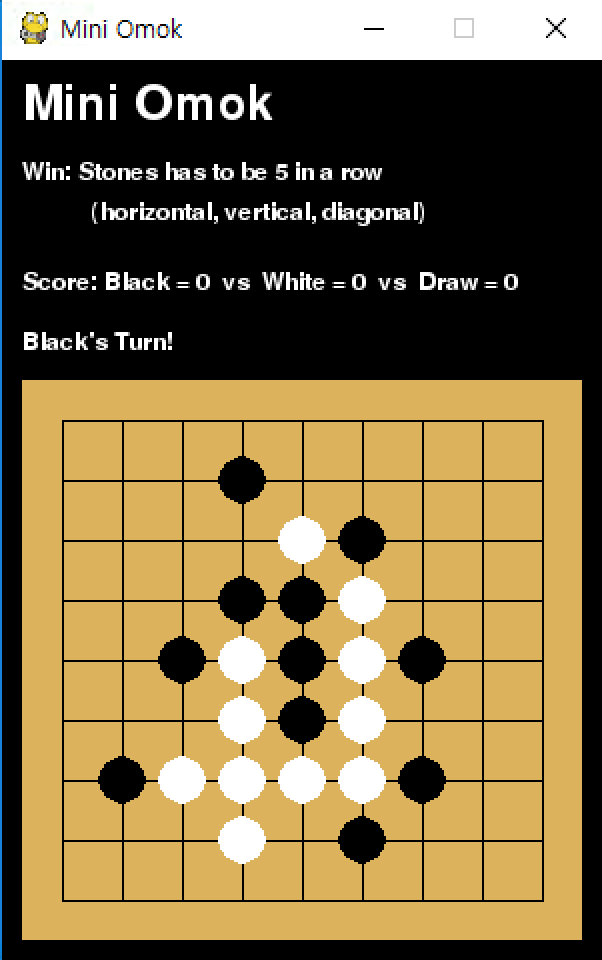

The folder is for implementing AlphaZero algorithm in omok environment. There are two versions of omok (env_small: 9x9, env_regular: 15x15). The above image is sample image of 9x9 omok game

The description of the files in the folder is as follows. (files with bold text are codes for implementation)

- eval_main: code for evaluating the algorithm on both local PC and web

- main: main training code of Alpha Zero

- model: Network model (PyTorch)

- agents: Agent and MCTS algorithm

- utils: functions for implementing algorithm

- WebAPI: Implementation of web API