IROS Paper | arXiv | Project Website

@article{zha2021contrastively,

title={Contrastively Learning Visual Attention as Affordance Cues from Demonstrations for Robotic Grasping},

author={Zha, Yantian and Bhambri, Siddhant and Guan, Lin},

journal={The IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

year={2021}

}

This repository includes codes for reproducing the experiments (Table. 1) in the paper:

- Ours (Full Model): Siamese + Coupled Triplet Loss

- Ours (Ablation): Siamese + Normal Triplet Loss [To Add]

- Ours (Ablation): Without Contrastive Learning [To Add]

- Baseline [To Add]

- pybullet

- PyTorch (make sure that the torch version matches your cuda version; otherwise, you may still be able to install pytorch but the learning performance could be abnormal)

- ray

- opencv-python==4.5.2.52 (similar versions around 4.5 may also be fine)

- easydict

- matplotlib

- wandb (removable dependency)

- visdom (removable dependency)

Please download here. Change the path correspondingly in the file "src/grasp_bc_13_a.json"

- Modify

trainFolderDir,testFolderDir, andobj_folderinsrc/grasp_bc_13_a.json; Modifyresult_pathandmodel_pathin all training scripts (e.g.trainGrasp_full.py). - Train the full model with coupled triplet loss by running

python3 trainGrasp_full.py src/grasp_bc_13_a; If you train the model on a multi-GPU server, you could runCUDA_VISIBLE_DEVICES=1 nohup python3 trainGrasp_full.py src/grasp_bc_13_a > nohup.out &and monitor the training by the commandtail -f nohup.out. - To visualize grasping rollout, you can go to the

result_paththat you set at step 1; you can easily check results by training epoches or mug IDs.

-

Where is the implementation of coupled triplet loss?

Please check this line.

-

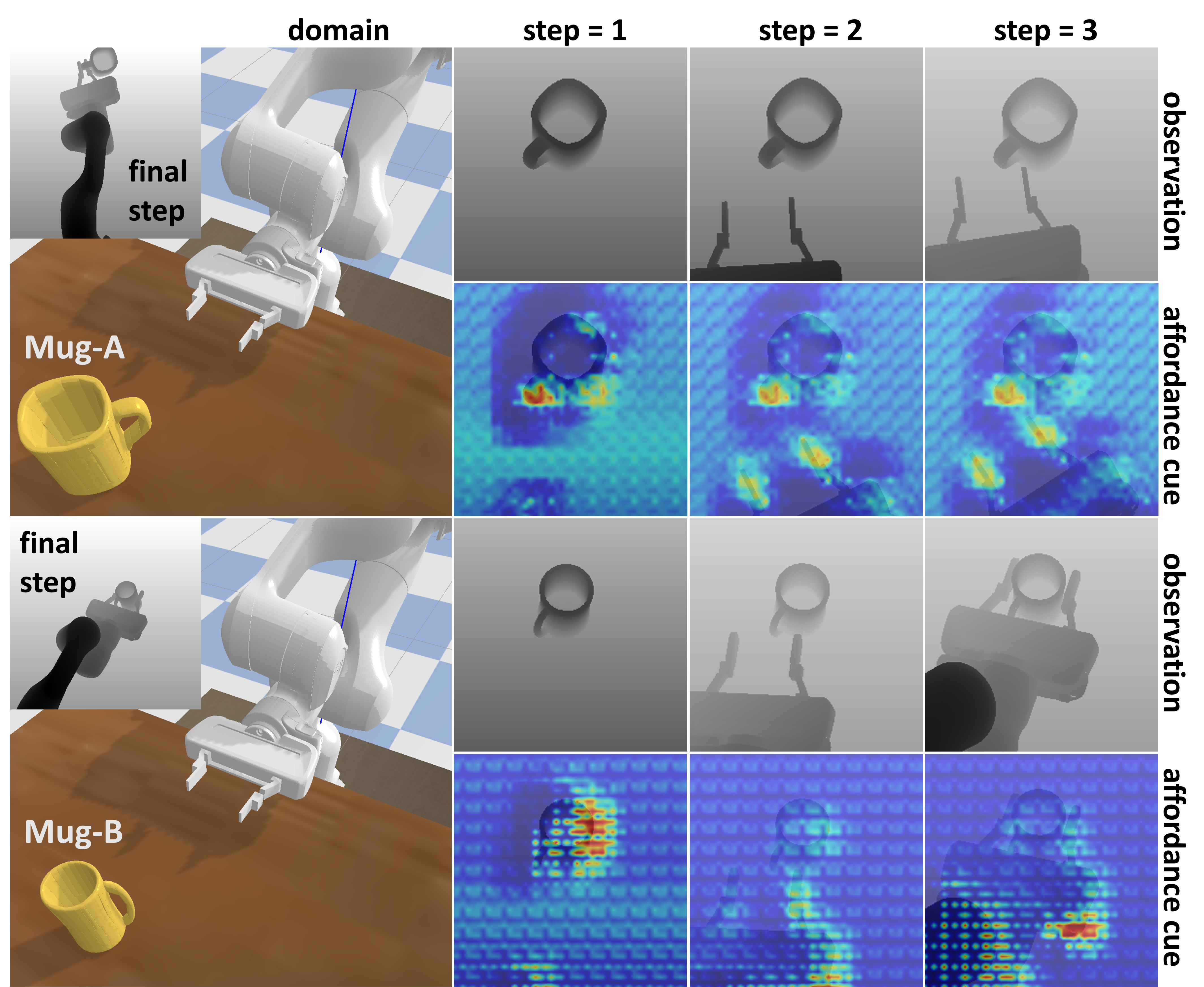

What are the mugs used and how they are different from each other?

Please check this line, which lists three sets of mug model indices that we used for the three affordance categories: body-graspable, handle-left-right-sides-graspable, and handle-front-back-sides-graspable. Note that we consider a challenging case that the three sets of mug indices can be overlapping.

-

Why the visualization images of observations in demonstration trajectory folders sometimes are different in brightnesses?

We added a grayscale normalization at the part of codes for visualizing recorded demonstrations during the procedure of collecting those trajectories. Since we only changed the visualization part, the trajectory data is ok to use.

The first author sincerely appreciates the permission of using some codes from this repo.