Link to article -> Article

Total 3 roles have been used to achieve this, k8s_master_conf, k8s_worker_conf & launch_instances.

$ sudo chmod 400 <PRIVATE-KEY.pem>

$ sudo mkdir /etc/ansible && sudo mv ansible.cfg /etc/ansible

$ ansible-playbook setup.yml

Description for role variables used in launch_instances role ->

Variable |

description |

|---|---|

| reg | region |

| img_id | AMI id |

| i_type | EC2 instance type |

| vpcsi | vpc-subnet id |

| key | private key name for instance EC2 |

| sg_group | security group name |

| kube_master_name | name of EC2 instance for master node |

| aws_ak | AWS access key |

| aws_sk | AWS secret key |

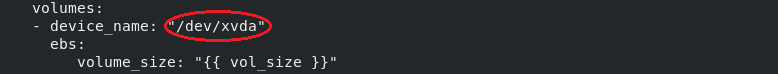

| vol_size | volume size for EC2 instance (in Gib) |

| kube_worker_name1 | name of EC2 instance for worker node-1 |

| kube_worker_name2 | name of EC2 instance for worker node-2 |

Write correct device name according to image used in launch_instances role.

Have minimum 8 Gib of volume for each node.

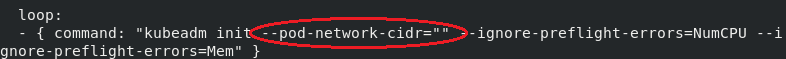

inside k8s_master_config role mention your pod network CIDR.

$ ansible-playbook setup-master.yml

$ ansible-playbook setup-worker.yml

Login to master node and get the join command -

$ sudo kubeadm token create --print-join-command

Now login to the worker nodes and execute the joining command on each of them.