Lip2Vec: Efficient and Robust Visual Speech Recognition via Latent-to-Latent Visual to Audio Representation Mapping

This repository contains a PyTorch implementation and pretrained models for Lip2Vec, a novel method for Visual Speech Recognition. For a deeper understanding of the method, refer to the paper Lip2Vec: Efficient and Robust Visual Speech Recognition via Latent-to-Latent Visual to Audio Representation Mapping.

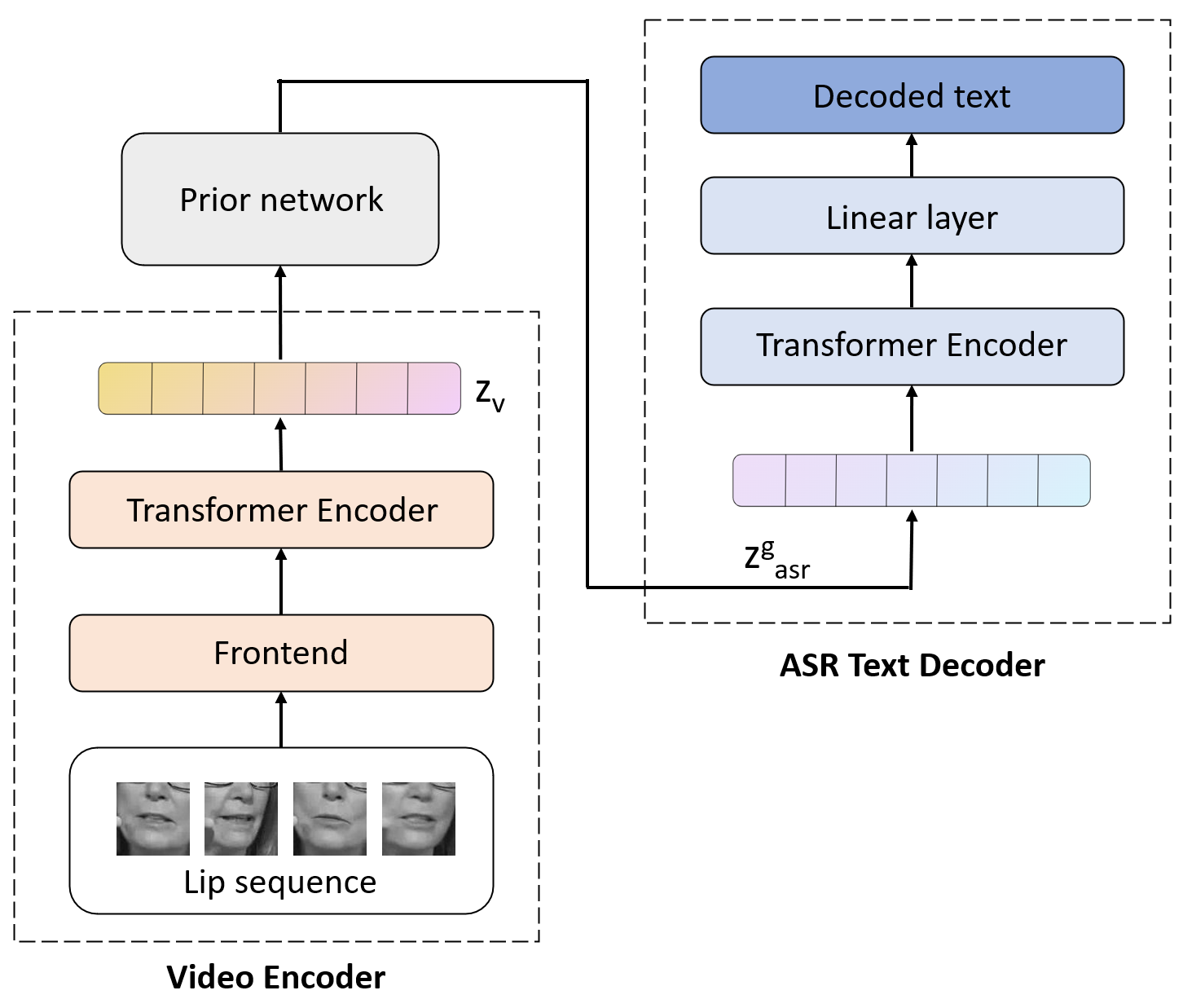

The video representations computed by the video encoder are input to our learned prior network, which synthesizes them to audio representations. These representations are then passed through the encoder and linear layer of the Wav2vec2.0 model to predict the text. Note that audio representations are not used at test time.

| arch | params | WER | Video backbone | download |

|---|---|---|---|---|

| Lip2Vec-Large Low-Ressources | 43M | 30.2 | Av-HuBERT Large | weights |

| Lip2Vec-Base Low-Ressources | 43M | 42.6 | Av-HuBERT Base | weights |

| Lip2Vec-Large High-Ressources | 76M | 26.0 | Av-HuBERT Large | weights |

| Lip2Vec-Base High-Ressources | 76M | 34.9 | Av-HuBERT Base | weights |

git clone https://github.com/YasserdahouML/Lip2Vec.git

cd Lip2Vecconda create -y -n lip2vec python=3.9.5

conda activate lip2vecgit clone https://github.com/facebookresearch/av_hubert.git

cd avhubert

git submodule init

git submodule update

cd fairseq

pip install --editable ./pip install -r requirements.txtFor downloading AV-HuBERT weights, use this repo. Available weights:

- AV-HuBERT Large: LRS3 + VoxCeleb2 (En), No finetuning

- AV-HuBERT Base: LRS3 + VoxCeleb2 (En), No finetuning

Use the following command to perform inference on the LRS3 dataset.

torchrun --nproc_per_node=4 main_test.py \

--lrs3_path=[data_path] \

--model_path=[prior_path] \

--hub_path=[av-hubert_path] \

- data_path: Directory to the LRS3 test set videos

- prior_path: Path to the prior network checkpoint

- av-hubert_path: Path to the AV-Hubert weights

The repository is based on av-hubert, vsr, detr

@inproceedings{djilali2023lip2vec,

title={Lip2Vec: Efficient and Robust Visual Speech Recognition via Latent-to-Latent Visual to Audio Representation Mapping},

author={Djilali, Yasser Abdelaziz Dahou and Narayan, Sanath and Boussaid, Haithem and Almazrouei, Ebtessam and Debbah, Merouane},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={13790--13801},

year={2023}

}