-

Python

Python is a programming language that lets you work quickly and integrate systems more effectively. -

Dronekit

The API allows developers to create Python apps that communicate with vehicles over MAVLink. It provides programmatic access to a connected vehicle's telemetry, state and parameter information, and enables both mission management and direct control over vehicle movement and operations. -

OpenCV

OpenCV (Open Source Computer Vision Library) is a library of programming functions mainly aimed at real-time computer vision. -

Pymavlink

It is a Python adaptation of the MAVLink communication protocol. -

Pyrealsense2

It is a library that allows accessing and extracting information from the Intel RealSense D435I camera. -

Tkinter

Package used for the creation of the graphical interface for navigation monitoring.

The following elements were used to assemble the drone:

-

Readytosky 40A ESC 2-4S brushless ESC electronic speed controller 5V/3A BEC

-

Pixhawk 5.3V BEC XT60 Power Module Connectors for APM2.8 2.6 Quadcopter

-

Lutions Damping Plate Fiberglass Fiberglass Flight driver Anti-vibration

The following Youtube link will show a tutorial of how this drone was assemble.

Youtube

The drone assembly before being integrated with the navigation system looks as follows:

And in these Youtube videos you will be able to observe how it behaves while flying.

The following components were used for the navigation system:

- NVIDIA Jetson Nano Developer Kit

- Wireless-AC8265 - Dual-mode wireless NIC module for Jetson Nano Developer Kit M.2 NGFF

- Intel RealSense - Depth camera D435i

An MDF structure is built to attach these elements to the drone and thus make use of the integration of all these technologies.

The system aspect of the experimental drone is as follows.

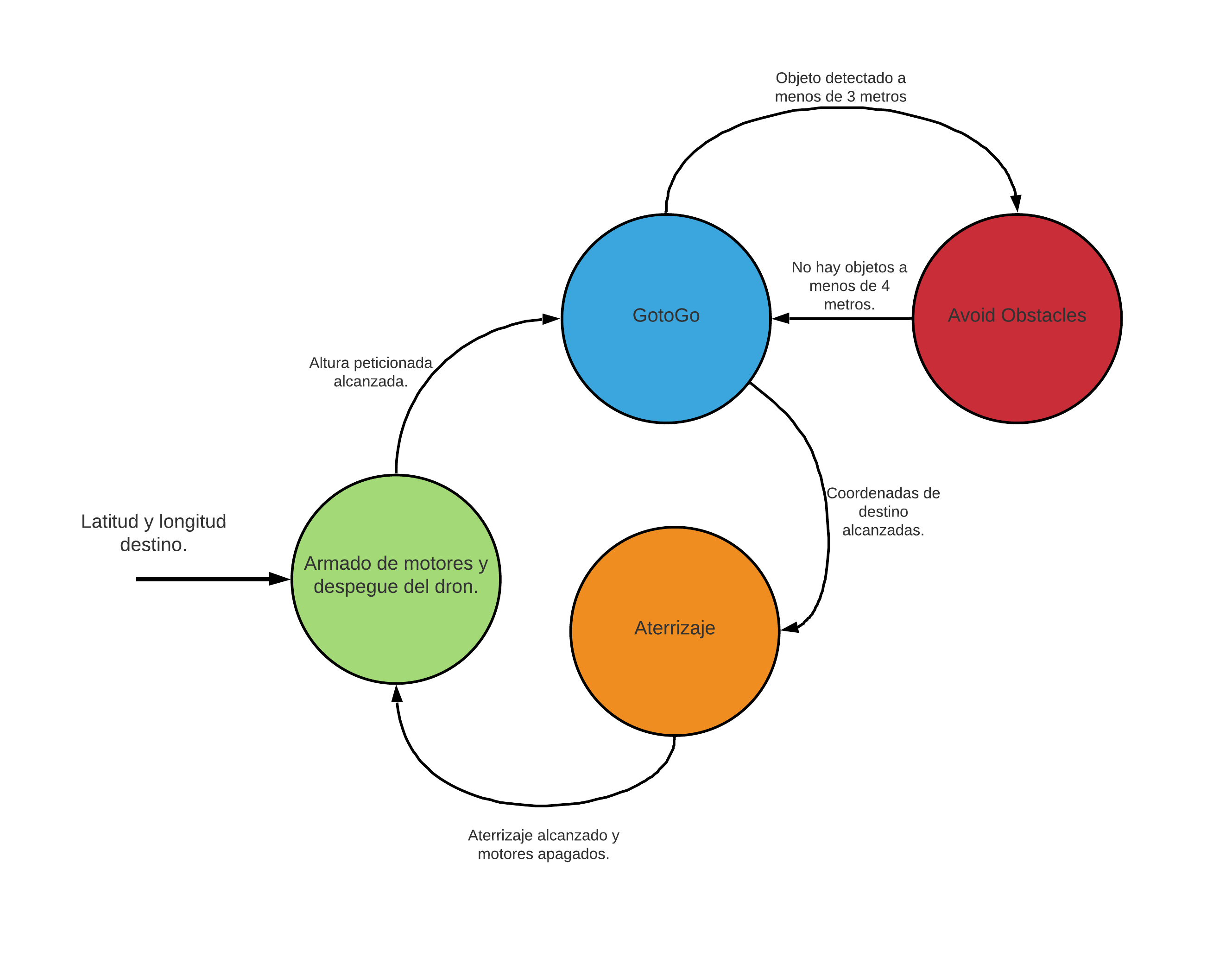

A simple navigation system is developed directing the UAV to a previously defined terrestrial coordinate. The navigation script is in charge of taking off, orienting, moving and landing the drone to the destination point.

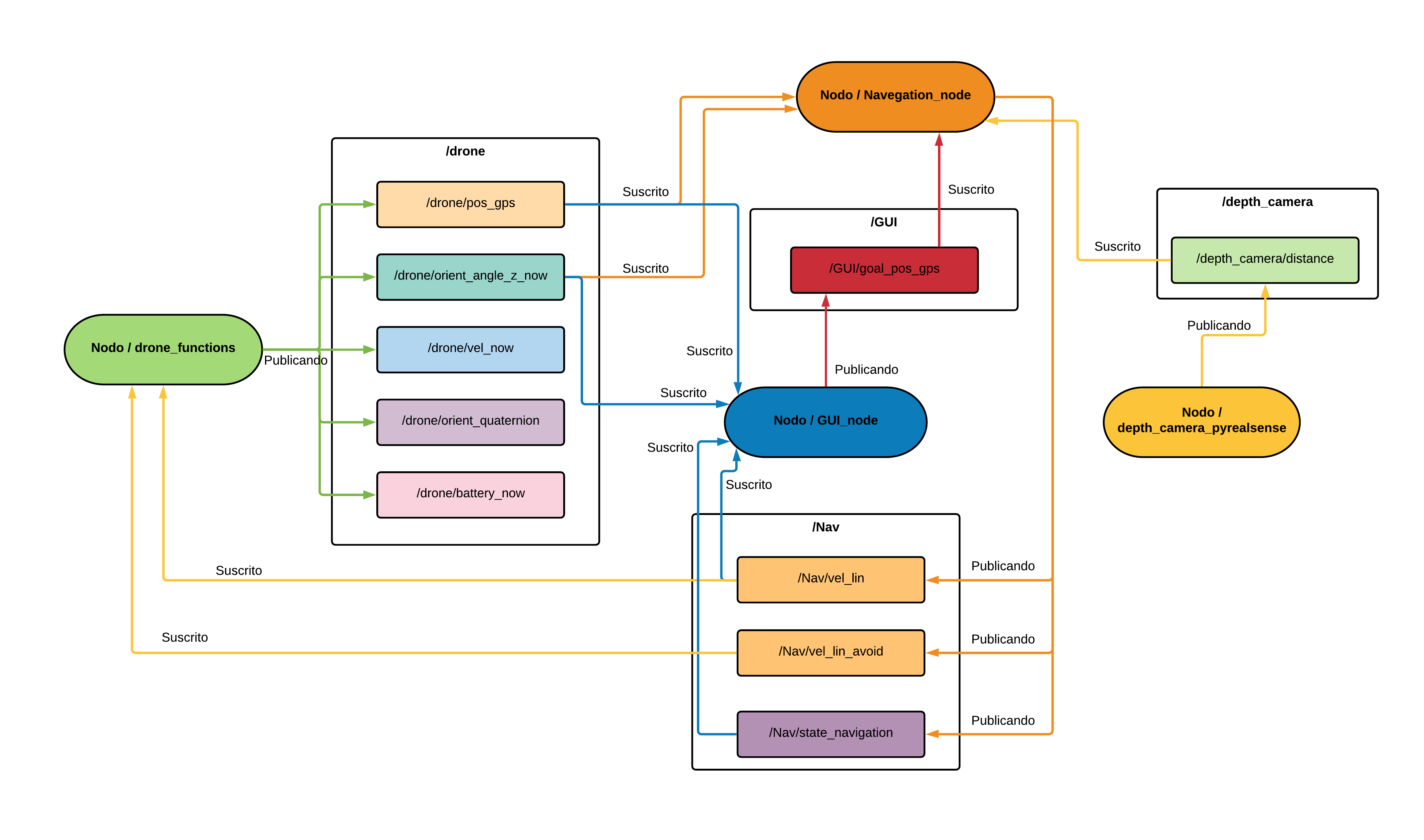

This algorithm is the head of the drone navigation, like the previous ones is done under the structure of a ROS node in the Python 2.7 language, this will be subscribed to all the topics that are being published by other nodes as its operation depends on all the information it receives.

This program has two methods for navigation, the first method is the "GotoGo", this is responsible for orienting and directing the drone to a point on earth (latitude and longitude) previously requested by the user. This method depends on the current orientation and the current GPS position of the drone delivered by the drone function node.

And the "obstacle avoidance" method (AvoidObstacles in the script) is in charge of sending the corresponding velocities in the X-axis, Y-axis and Z-axis in order to avoid hitting any object in the visual range of the depth camera. camera's visual range. This method uses the messages arriving from the depth camera node, which corresponds to 9 distances.

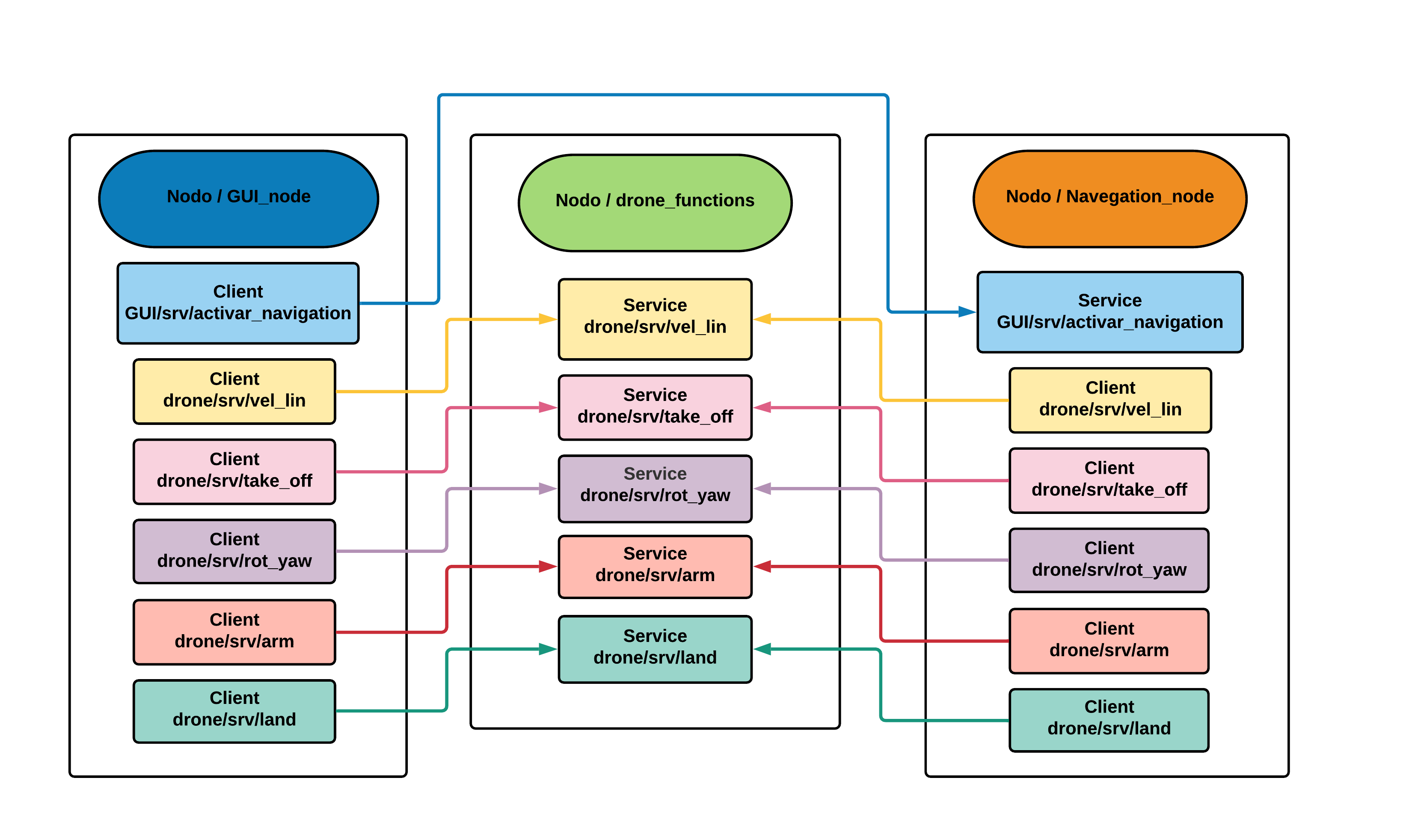

For the navigation to run it was necessary to create other scripts that run in parallel as ROS nodes in the Python framework and these are:

This program is in charge of communicating the Jetson Nano with the Pixhawk flight controller, receiving all the information from the sensors, data from the flight controller and, allowing to send navigation commands.

In this node all the information from the depth camera is obtained, the captured image is divided into 9 fragments and the average of the depths of the pixels found in each of the 9 divided squares is obtained, thus mitigating in part the noise generated by light changes. Then the 9 distances obtained are sent to the navigation node by means of a ROS topic.

The main function of the GUI is to allow the user to visualize the current position, orientation, altitude and X-axis velocity parameters, also the current navigation status can be visualized, that is, if the UAV is taking off, avoiding obstacles, landing, among others. It has a panel of 7 buttons that interact directly with the services of the drone functions node to allow the user to manage the UAV manually and especially to perform a debug of each of its functions. There is also the section where the destination coordinates and the takeoff height are typed, which will be published in the topic called "GUI/goal_pos_gps", the navigation will be executed by means of the service "GUI/srv/activate_navigation" that is activated once the start navigation button is clicked.

The interface does not run directly on the Jetson Nano as it would increase the computational consumption. would increase the computational consumption and make the processes take longer to run. processes take longer to run. To use the GUI in conjunction with the whole navigation system it is necessary to use a ROS tool called necessary to use a ROS tool called "Multiple Remote Machine", this allows to connect several machines to a Multiple machines can be connected to the same ROS Master system remotely through a SSH connection through a SSH connection where all the machines share the same public IP (Łukuzzi). public IP. In the case of this project, the node of the In the case of this project we run the GUI node on a computer that will be on the ground and the rest of the nodes on the Jetson Nano. Nano, as both computers are on the same ROS system, they will be able to share their messages without their messages without any problem between them.

Communication between these nodes is as follows.

And its logic follows with respect to this finite state machine.

In the following videos you can see how the navigation system works in simulations and in real life.

- Live testing of the drone's function node and the GUI manual mode

- Coordinate 1 - GotoGo navigation test in real life

- Coordinate 2 - GotoGo navigation test in real life

Final semester student of mechatronics engineering at EIA University.