RNNPose: Recurrent 6-DoF Object Pose Refinement with Robust Correspondence Field Estimation and Pose Optimization

Yan Xu, Kwan-Yee Lin, Guofeng Zhang, Xiaogang Wang, Hongsheng Li.

Conference on Computer Vision and Pattern Recognition (CVPR), 2022.

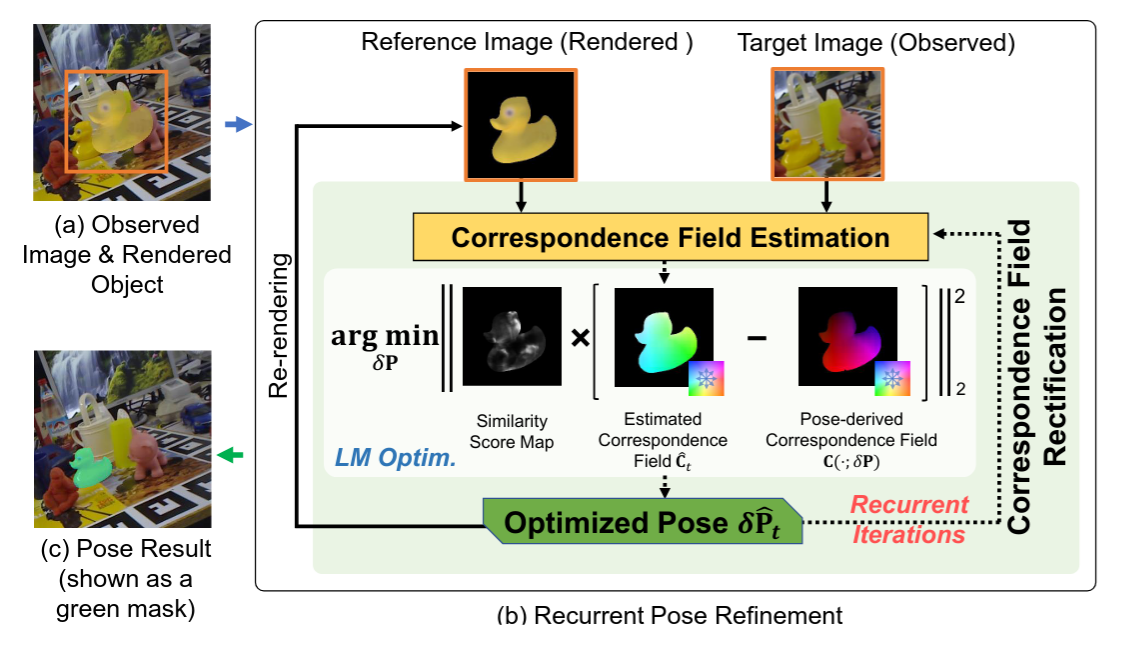

The basic pipeline of our proposed RNNPose. (a) Before refinement, a reference image is rendered according to the object initial pose (shown in a fused view). (b) Our RNN-based framework recurrently refines the object pose based on the estimated correspondence field between the reference and target images. The pose is optimized to be consistent with the reliable correspondence estimations highlighted by the similarity score map (built from learned 3D-2D descriptors) via differentiable LM optimization. (c) The output refined pose.

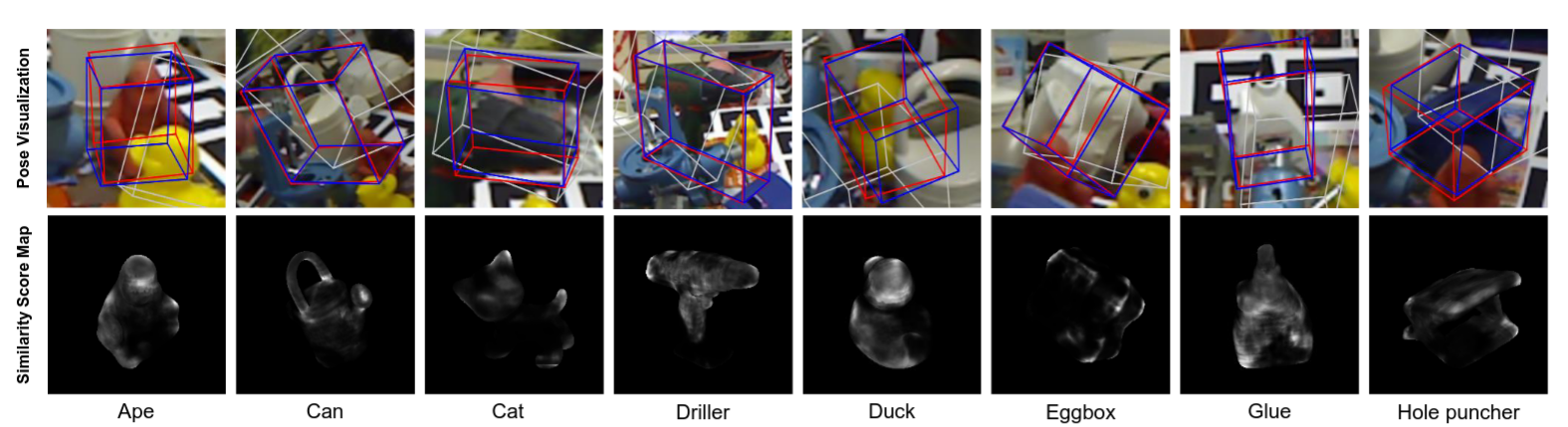

Visualization of our pose estimations (first row) on Occlusion LINEMOD dataset and the similarity score maps (second row) for downweighting unreliable correspondences during pose optimization. For pose visualization, the white boxes represent the erroneous initial poses, the red boxes are estimated by our algorithm and the ground-truth boxes are in blue. Here, the initial poses for pose refinement are originally from PVNet but added with significant disturbances for robustness testing.

A dockerfile is provided to help with the environment setup. You need to install docker and nvidia-docker2 first and then set up the docker image and start up a container with the following commands:

cd RNNPose/docker

sudo docker build -t rnnpose .

sudo docker run -it --runtime=nvidia --ipc=host --volume="HOST_VOLUME_YOU_WANT_TO_MAP:DOCKER_VOLUME" -e DISPLAY=$DISPLAY -e QT_X11_NO_MITSHM=1 rnnpose bash

If you are not familiar with docker, you could also install the dependencies manually following the provided dockerfile.

cd RNNPose/scripts

bash compile_3rdparty.sh

We follow DeepIM and PVNet to preprocess the training data for LINEMOD. You could follow the steps here for data preparation.

We train our model with the mixture of the real data and the synthetic data on LINEMOD dataset.

The trained models on the LINEMOD dataset have been uploaded to the OneDrive. You can download them and put them into the directory weight/ for testing.

An example bash script is provided below for reference.

export PYTHONPATH="$PROJECT_ROOT_PATH:$PYTHONPATH"

export PYTHONPATH="$PROJECT_ROOT_PATH/thirdparty:$PYTHONPATH"

seq=cat

gpu=1

start_gpu_id=0

mkdir $model_dir

train_file=/home/yxu/Projects/Works/RNNPose_release/tools/eval.py

config_path=/mnt/workspace/Works/RNNPose_release/config/linemod/"$seq"_fw0.5.yml

pretrain=$PROJECT_ROOT_PATH/weights/trained_models/"$seq".tckpt

python -u $train_file multi_proc_train \

--config_path $config_path \

--model_dir $model_dir/results \

--use_dist True \

--dist_port 10000 \

--gpus_per_node $gpu \

--optim_eval True \

--use_apex True \

--world_size $gpu \

--start_gpu_id $start_gpu_id \

--pretrained_path $pretrain

Note that you need to specify the PROJECT_ROOT_PATH, i.e. the absolute directory of the project folder RNNPose and modify the respective data paths in the configuration files to the locations of downloaded data before executing the commands. You could also refer to the commands below for evaluation with our provide scripts.

bash scripts/eval.sh

bash scripts/eval_lmocc.sh

An example training script is provided.

bash scripts/train.sh

If you find our code useful, please cite our paper.

@inproceedings{xu2022rnnpose,

title={RNNPose: Recurrent 6-DoF Object Pose Refinement with Robust Correspondence Field Estimation and Pose Optimization},

author={Xu, Yan and Kwan-Yee Lin and Zhang, Guofeng and Wang, Xiaogang and Li, Hongsheng},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2022}

}

The skeleton of this code is borrowed from RSLO. We also would like to thank the public codebases PVNet, RAFT, SuperGlue and DeepV2D.