page | paper | video | poster | slides

We developed a PyTorch framework for kinematic-based auto-regressive motion generation models, supporting both training and inference. Our framework also includes implementations for real-time inpainting and reinforcement learning-based interactive control. If you have any questions about A-MDM, please feel free to reach out via ISSUE or email.

- July 28 2024, framework released.

- Aug 24 2024, LAFAN1 15step checkpoint released.

- Sep 5 2024, 100STYLE 25step checkpoint released.

- Stay tuned for support for more dataset.

Download, unzip and merge with your output directory.

For each dataset, our dataloader automatically parses it into a sequence of 1D frames, saving the frames as data.npz and the essential normalization statistics as stats.npz within your dataset directory. We provide stats.npz so users can perform inference without needing to download the full dataset and provide a single file from the dataset instead.

Download and extract under ./data/LAFAN directory.

BEWARE: We didn't include files with a prefix of 'obstacle' in our experiments.

Download and extract under ./data/100STYLE directory.

Download and extract under ./data/ directory. Create a yaml config file in ./config/model/,

Follow the procedure described in the repo of HuMoR and extract under ./data/AMASS directory.

Follow the procedure described in the repo of HumanML3D and extract under ./data/HumanML3D directory.

conda create -n amdm python=3.7

conda activate amdm

pip install -r requirement.txt

mkdir output

python run_base.py --arg_file args/amdm_DATASET_train.txt

or

python run_base.py

--model_config config/model/amdm_lafan1.yaml

--log_file output/base/amdm_lafan1/log.txt

--int_output_dir output/base/amdm_lafan1/

--out_model_file output/base/amdm_lafan1/model_param.pth

--mode train

--master_port 0

--rand_seed 122

Training time visualization is saved in --int_output_dir

python run_env.py --arg_file args/RP_amdm_DATASET.txt

python run_env.py --arg_file args/PI_amdm_DATASET.txt

python run_env.py --arg_file args/ENV_train_amdm_DATASET.txt

python run_env.py --arg_file args/ENV_test_amdm_DATASET.txt

- Train the base model

- Follow the main function in gen_base_bvh.py, you can generate diverse motion given any starting pose:

Part of the RL modules utilized in our framework are based on the existing codebase of MotionVAE, please cite their work if you find using RL to guide autoregressive motion generative models helpful to your research.

@article{

shi2024amdm,

author = {Shi, Yi and Wang, Jingbo and Jiang, Xuekun and Lin, Bingkun and Dai, Bo and Peng, Xue Bin},

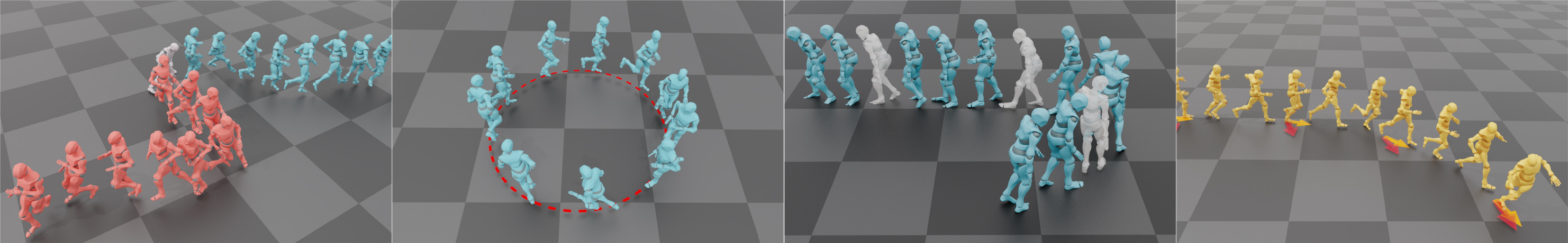

title = {Interactive Character Control with Auto-Regressive Motion Diffusion Models},

year = {2024},

issue_date = {August 2024},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {43},

journal = {ACM Trans. Graph.},

month = {jul},

keywords = {motion synthesis, diffusion model, reinforcement learning}

}