RWTH Aachen University

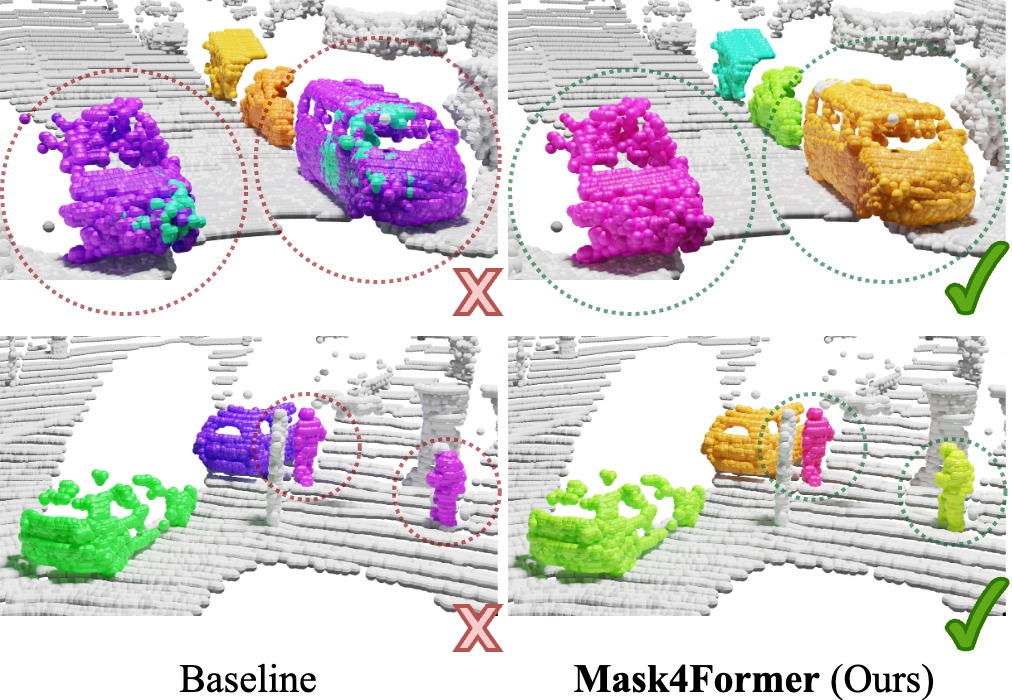

Mask4Former is a transformer-based model for 4D Panoptic Segmentation, achieving a new state-of-the-art performance on the SemanticKITTI test set.

[Project Webpage] [arXiv]

-

2023-01-29: Mask4Former accepted to ICRA 2024

-

2023-09-28: Mask4Former on arXiv

The main dependencies of the project are the following:

python: 3.8

cuda: 11.7You can set up a conda environment as follows

conda create --name mask4former python=3.8

conda activate mask4former

pip install torch==1.13.0+cu117 torchvision==0.14.0+cu117 --extra-index-url https://download.pytorch.org/whl/cu117

pip install -r requirements.txt --no-deps

pip install git+https://github.com/NVIDIA/MinkowskiEngine.git -v --no-deps

pip install git+https://github.com/facebookresearch/pytorch3d.git@v0.7.5 --no-deps

After installing the dependencies, we preprocess the SemanticKITTI dataset.

python -m datasets.preprocessing.semantic_kitti_preprocessing preprocess \

--data_dir "PATH_TO_RAW_SEMKITTI_DATASET" \

--save_dir "data/semantic_kitti"

python -m datasets.preprocessing.semantic_kitti_preprocessing make_instance_database \

--data_dir "PATH_TO_RAW_SEMKITTI_DATASET" \

--save_dir "data/semantic_kitti"

Train Mask4Former:

python main_panoptic.pyIn the simplest case the inference command looks as follows:

python main_panoptic.py \

general.mode="validate" \

general.ckpt_path='PATH_TO_CHECKPOINT.ckpt'Or you can use DBSCAN to boost the scores even further:

python main_panoptic.py \

general.mode="validate" \

general.ckpt_path='PATH_TO_CHECKPOINT.ckpt' \

general.dbscan_eps=1.0The provided model, trained after the submission, achieves 71.1 LSTQ without DBSCAN and 71.5 with DBSCAN post-processing.

@inproceedings{yilmaz24mask4former,

title = {{Mask4Former: Mask Transformer for 4D Panoptic Segmentation}},

author = {Yilmaz, Kadir and Schult, Jonas and Nekrasov, Alexey and Leibe, Bastian},

booktitle = {{International Conference on Robotics and Automation (ICRA)}},

year = {2024}

}