DIC-Net: Upgrade the Performance of Traditional DIC with Hermite Dataset and Convolution Neural Network

Hermite dataset generation implementation (matlab) and DIC-Net CNN implementation (pytorch).

Our method is currently the SOTA of 2D-DeepDIC method in terms of accuracy and spatial resolution(SR).

In the experimental test set with 3216 samples, DIC-Net-d achieves an absolute pixel error (MAE) score of 0.0130 pixels and 0.0126 pixels in the x direction and y direction which is only 48.5% and 47.9% of the prior best method. The spatial resolution (SR) is 17.25 pixels with a noise level of 0.0136, and the metrological performance indicator is 0.234 (lower is better) which outperforms existing traditional and non-traditional methods.

For more details, please refer to our paper: https://doi.org/10.1016/j.optlaseng.2022.107278

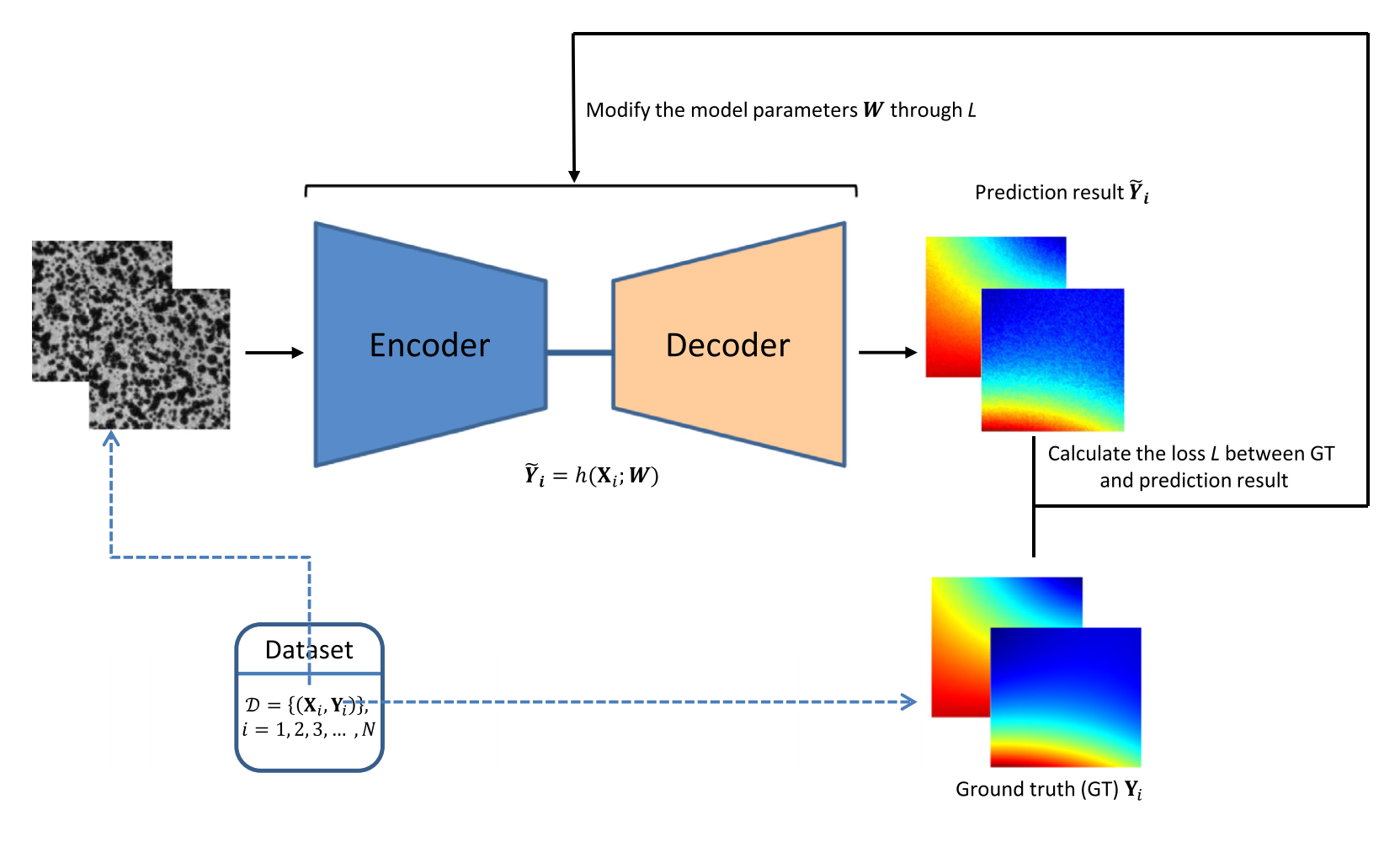

- Frame of DIC-Net

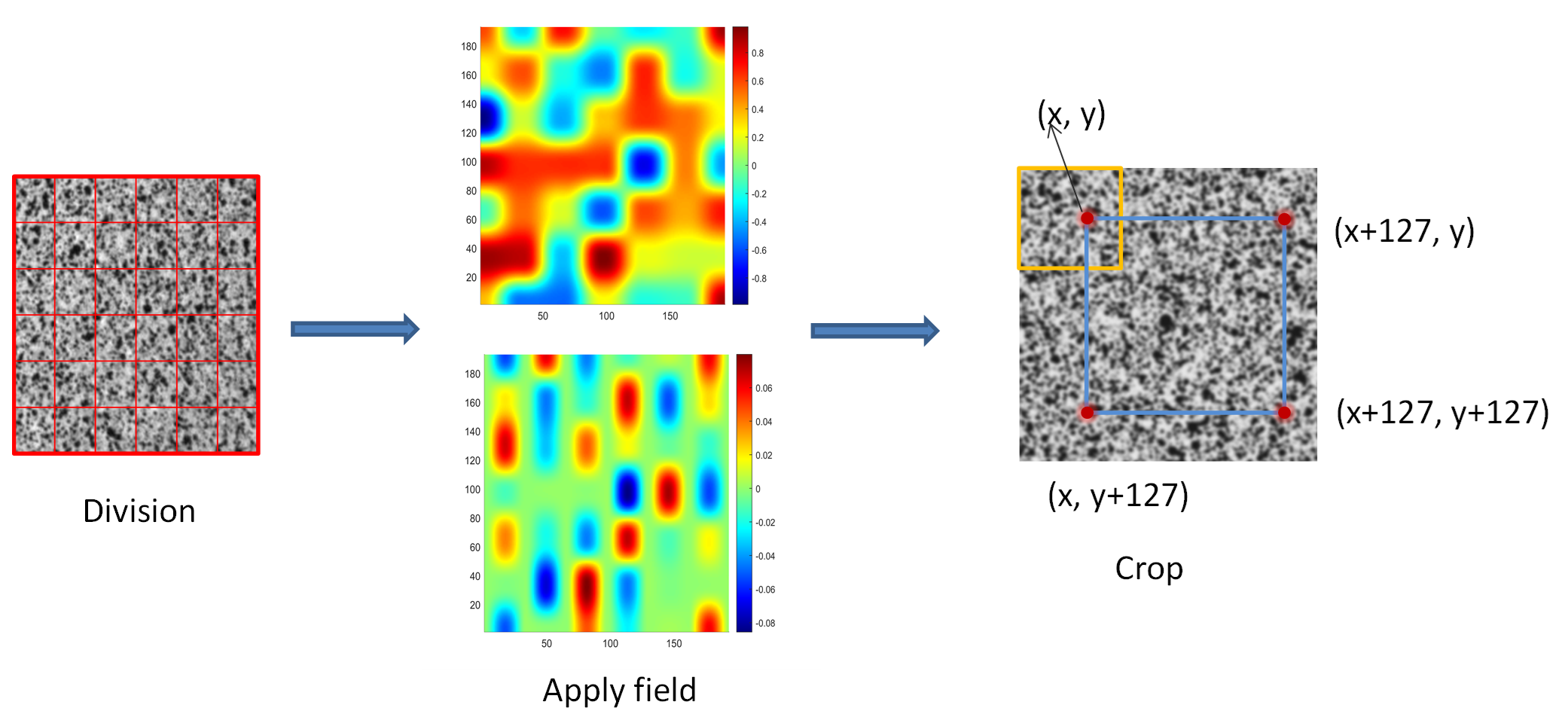

- Frame of Hermite Dataset

- Prediction error statistics of three networks on 3216 samples

|

Displacement mode (number of samples) |

Network |

Average MAE/pixel |

Average RMSE/pixel |

||

|

u |

v |

u |

v |

||

|

Pure translations (785) |

DIC-Net-d |

0.0124 |

0.0115 |

0.0155 |

0.0147 |

|

DisplacementNet |

0.0182 |

0.0154 |

0.0197 |

0.0172 |

|

|

StrainNet-f |

0.0265 |

0.0255 |

0.0442 |

0.0418 |

|

|

Gaussian (445) |

DIC-Net-d |

0.0128 |

0.0122 |

0.0161 |

0.0156 |

|

DisplacementNet |

0.0171 |

0.0164 |

0.0199 |

0.0194 |

|

|

StrainNet-f |

0.0256 |

0.0265 |

0.0441 |

0.0433 |

|

|

Periodic displacement (497) |

DIC-Net-d |

0.0157 |

0.0169 |

0.0201 |

0.0217 |

|

DisplacementNet |

0.1191 |

0.1539 |

0.1395 |

0.1783 |

|

|

StrainNet-f |

0.0288 |

0.0295 |

0.0467 |

0.0458 |

|

|

Strain concentrations (418) |

DIC-Net-d |

0.0137 |

0.0122 |

0.0175 |

0.0157 |

|

DisplacementNet |

0.0410 |

0.0252 |

0.0529 |

0.0315 |

|

|

StrainNet-f |

0.0301 |

0.0280 |

0.0504 |

0.0457 |

|

|

Linear displacement (357) |

DIC-Net-d |

0.0120 |

0.0117 |

0.0151 |

0.0148 |

|

DisplacementNet |

0.0169 |

0.0125 |

0.0187 |

0.0144 |

|

|

StrainNet-f |

0.0254 |

0.0251 |

0.0435 |

0.0408 |

|

|

Quadratic displacement (714) |

DIC-Net-d |

0.0121 |

0.0117 |

0.0153 |

0.0149 |

|

DisplacementNet |

0.0143 |

0.0152 |

0.0165 |

0.0174 |

|

|

StrainNet-f |

0.0250 |

0.0243 |

0.0427 |

0.0405 |

|

|

Total (3216) |

DIC-Net-d |

0.0130 |

0.0126 |

0.0165 |

0.0161 |

|

DisplacementNet |

0.0356 |

0.0378 |

0.0417 |

0.0440 |

|

|

StrainNet-f |

0.0268 |

0.0263 |

0.0450 |

0.0427 |

|

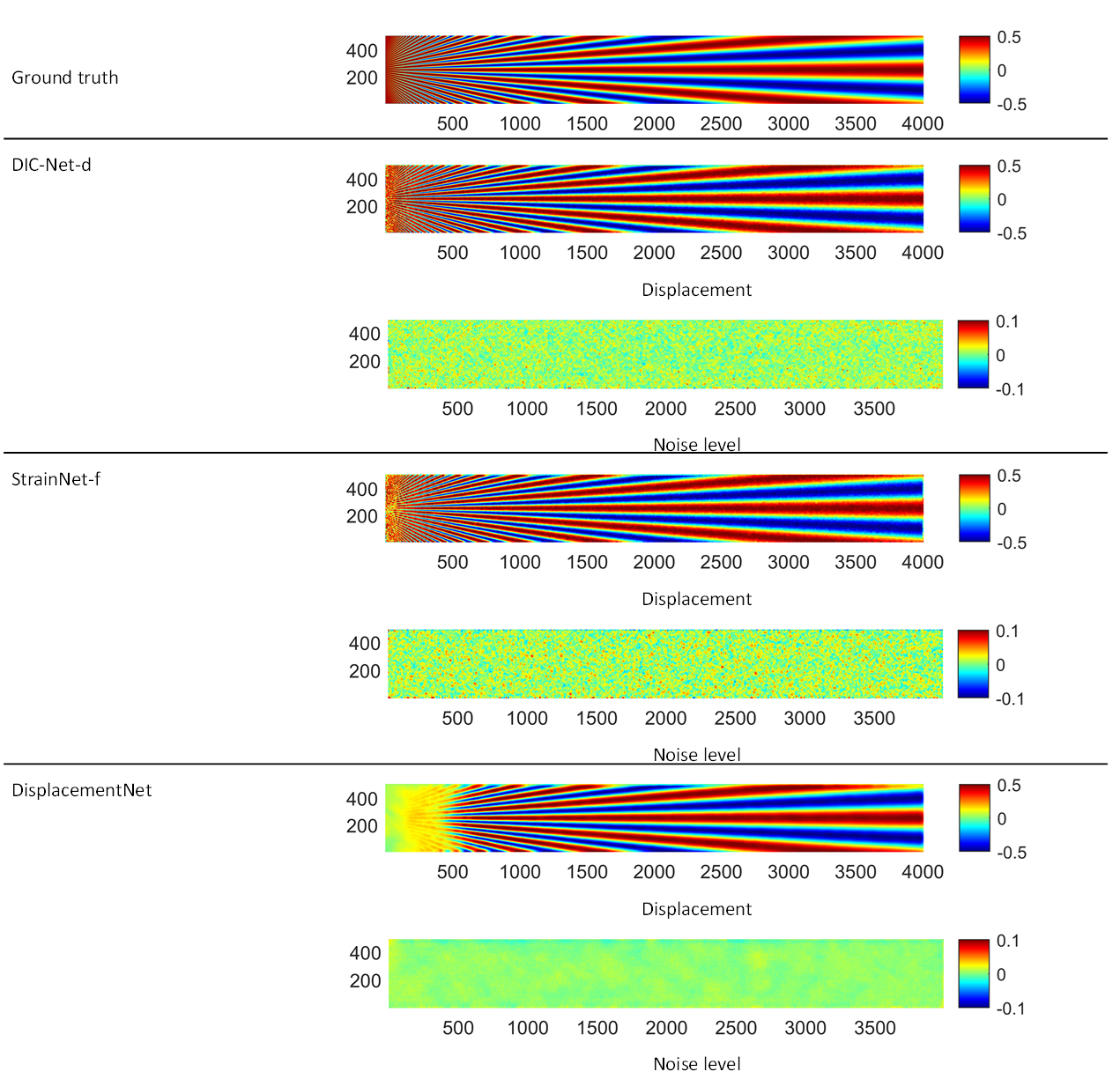

- The performance of spatial resolution in Star5 image set

|

Network |

SR |

Noise level |

𝛼 |

|

DIC-Net-d |

17.25 |

0.0136 |

0.234 |

|

StrainNet-f |

26.93 |

0.0163 |

0.439 |

|

DisplacemeNet |

52.66 |

0.0041 |

0.216 |

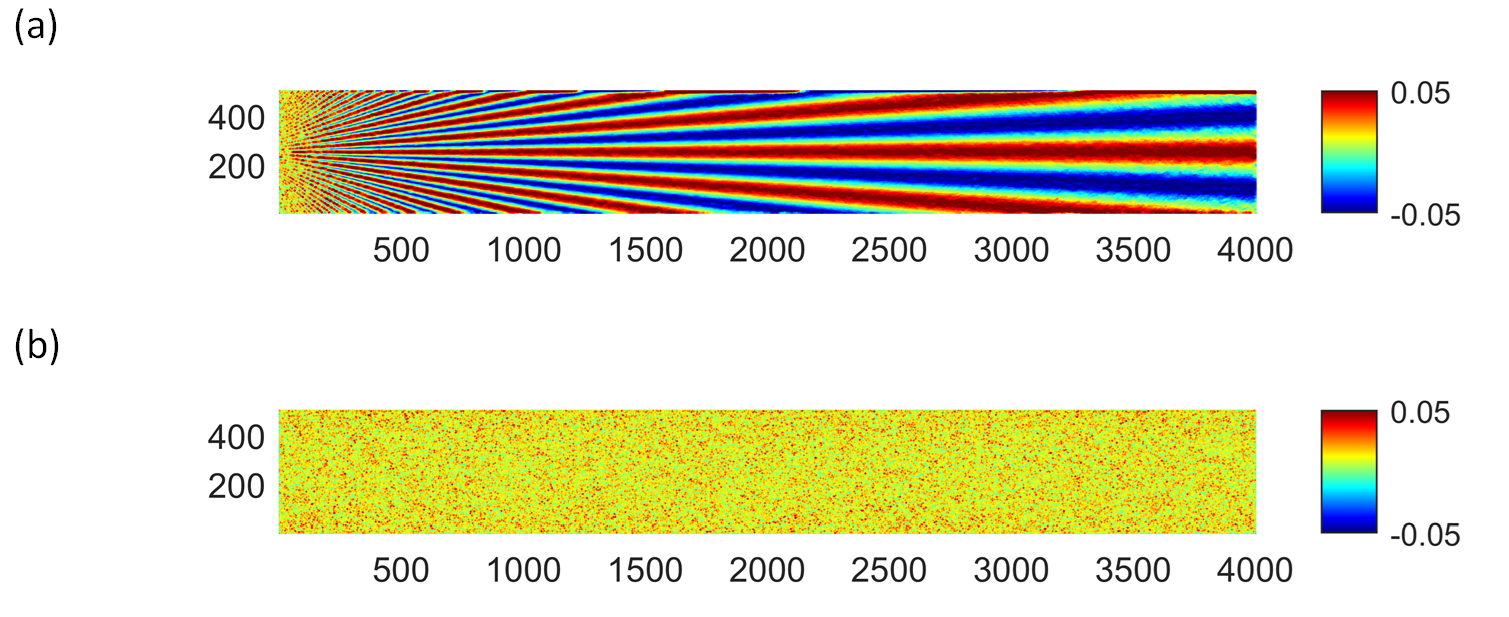

- Star5 displacement and noise level predicted by three networks.

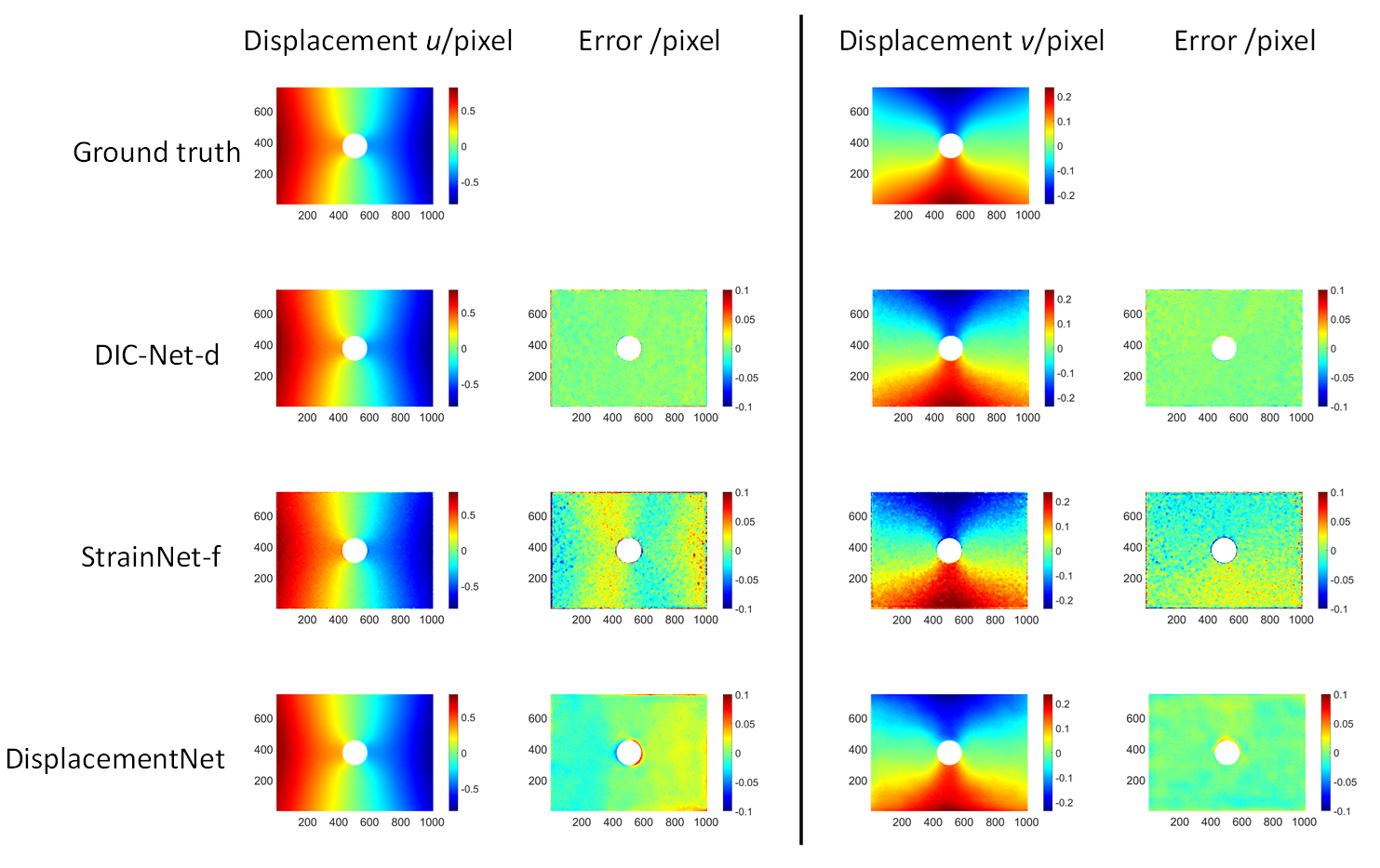

- Tensile plate with hole predicted by three networks.

- Star6 strain predicted by DIC-Net-s

Matlab

- R2020b

- MATLAB Support for MinGW-w64 C/C++ Compiler

Python

- python 3.0

CUDA 11.5

Pytorch 1.10

Numpy 1.20.3

The Version used in the paper is:

DIC-Net-d

- Google Drive:https://drive.google.com/file/d/12bO8bZ3cX_3K3mPxer_IHWVJHx16mWJx/view?usp=share_link

- Baidu Netdisk:链接:https://pan.baidu.com/s/1bGbVPY6piE1XHQX7FPtR_A 提取码:7cqj

DIC-Net-s

- Google Drive:https://drive.google.com/file/d/19aZQrc-mnd5W8jYfn6DZmhDSZTKxdxaP/view?usp=share_link

- Baidu Netdisk:链接:https://pan.baidu.com/s/1GfvhWms_5mLhN7zP1q0AXg 提取码:br61

Hermite Dataset

- Google Drive:

- Baidu Netdisk:链接:https://pan.baidu.com/s/14KE8gOk81ETEEtKnFoc8Zw 提取码:90w1

- The Hermite dataset provided here has a total of 85,700 image pairs, the first 42,900 pairs are used in our paper, please adjust as needed.

Note: It is possible to obtain a more powerful DIC-Net by modifying the parameters of the Hermite element for retraining, so our model parameters would be continuously updated.Refer to https://github.com/YinWang20/DIC-Net-pretrained-Models

@article{WANG2023107278,

title = {DIC-Net: Upgrade the performance of traditional DIC with Hermite dataset and convolution neural network},

journal = {Optics and Lasers in Engineering},

volume = {160},

pages = {107278},

year = {2023},

issn = {0143-8166},

doi = {https://doi.org/10.1016/j.optlaseng.2022.107278},

url = {https://www.sciencedirect.com/science/article/pii/S0143816622003311},

author = {Yin Wang and Jiaqing Zhao}

}

We're well underway to get the code in order, if you need any help, don't hesitate to contact the corresponding author (jqzhao@mail.tsinghua.edu.cn) right away!