This repository is the official PyTorch implementation for SFC introduced in the paper:

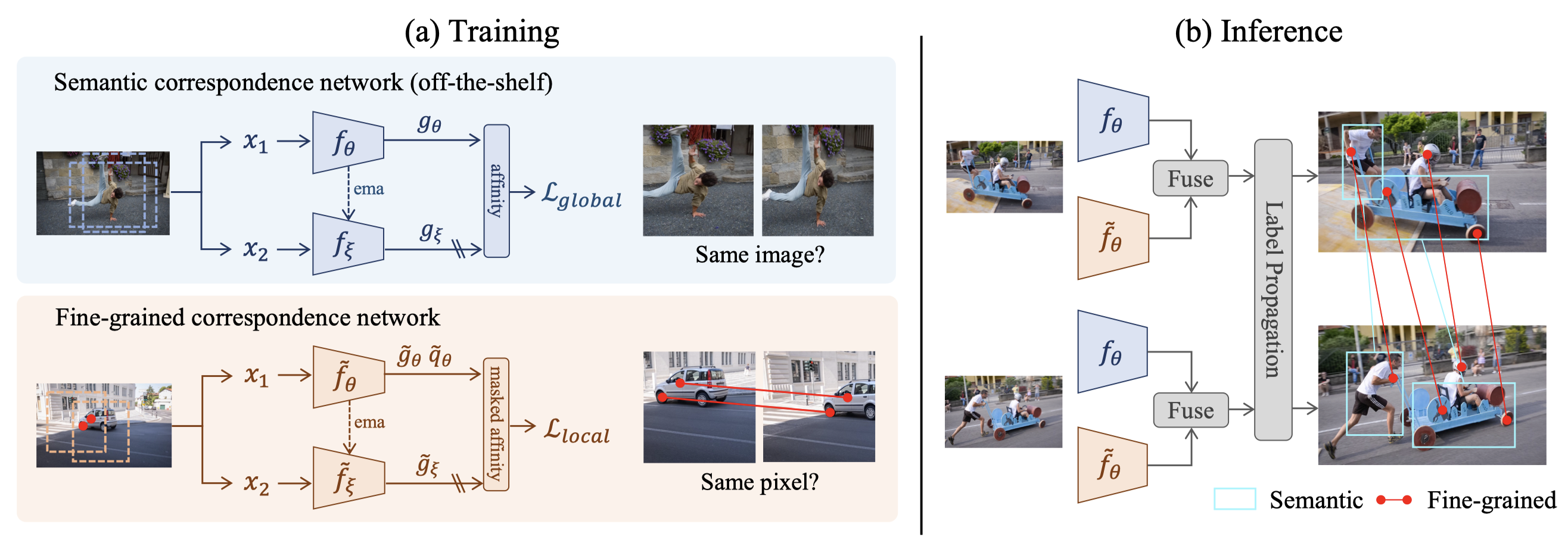

Semantic-Aware Fine-Grained Correspondence. ECCV 2022 (Oral)

Yingdong Hu, Renhao Wang, Kaifeng Zhang, and Yang Gao

- Python 3.8

- PyTorch 1.7.1

- Other dependencies

Create an new conda environment.

conda create -n sfc python=3.8 -y

conda activate sfc

Install PyTorch==1.7.1, torchvision==0.8.2 following official instructions. For example:

conda install pytorch==1.7.1 torchvision==0.8.2 torchaudio==0.7.2 cudatoolkit=11.0 -c pytorch

Clone this repo and install required packages:

git clone https://github.com/Alxead/SFC.git

pip install opencv-python matplotlib scikit-image imageio pandas tqdm wandb

We use YouTube-VOS to pre-train fine-grained correspondence network.

Download raw image frames (train_all_frames.zip). Move ytvos.csv from code/data/ to the directory of YouTube-VOS dataset.

The overall file structure should look like:

youtube-vos

├── train_all_frames

│ └── JPEGImages

└── ytvos.csv

To pre-train with a single 24GB NVIDIA 3090 GPU, run:

python train.py \

--data-path /path/to/youtube-vos \

--output-dir ../checkpoints \

--enable-wandb True

Training time is about 25 hours.

Our fine-grained correspondence network and other baseline models can be downloaded as following:

| Pre-training Method | Architecture | Link |

|---|---|---|

| Fine-grained Correspondence | ResNet-18 | download |

| CRW | ResNet-18 | download |

| MoCo-V1 | ResNet-50 | download |

| SimSiam | ResNet-50 | download |

| PixPro | ResNet-50 | download |

| ImageNet classification | ResNet-50 | torchvision |

After downloading a pre-trained model, place it under SFC/checkpoints/ folder. Please don't modify the file names of these checkpoints.

The label propagation algorithm is based on the implementation of Contrastive Random Walk (CRW). The output of test_vos.py (predicted label maps) must be post-pocessed for evaluation.

To evaluate a model on the DAVIS task, clone davis2017-evaluation repository.

git clone https://github.com/davisvideochallenge/davis2017-evaluation $HOME/davis2017-evaluation

Download DAVIS2017 dataset from the official website. Modify the paths provided in code/eval/davis_vallist.txt.

To evaluate SFC (after downloading pre-trained model and place it under SFC/checkpoints), run:

Step 1: Video object segmentation

python test_vos.py --filelist ./eval/davis_vallist.txt \

--fc-model fine-grained --semantic-model mocov1 \

--topk 15 --videoLen 20 --radius 15 --temperature 0.1 --cropSize -1 --lambd 1.75 \

--save-path /save/path

Step 2: Post-process

python eval/convert_davis.py --in_folder /save/path/ --out_folder /converted/path --dataset /path/to/davis/

Step 3: Compute metrics

python $HOME/davis2017-evaluation/evaluation_method.py \

--task semi-supervised --set val \

--davis_path /path/to/davis/ --results_path /converted/path

This should give:

J&F-Mean J-Mean J-Recall J-Decay F-Mean F-Recall F-Decay

0.713385 0.684833 0.812559 0.171174 0.741938 0.851699 0.234408

The reproduced performance in this repo is slightly higher than reported in the paper.

Here you'll find the command-lines to evaluate some baseline models.

Fine-grained Correspondence Network (FC)

For step 1, run:

python test_vos.py --filelist ./eval/davis_vallist.txt \

--fc-model fine-grained \

--topk 10 --videoLen 20 --radius 12 --temperature 0.05 --cropSize -1 \

--save-path /save/path

Run step 2 and step 3, this should give:

J&F-Mean J-Mean J-Recall J-Decay F-Mean F-Recall F-Decay

0.679752 0.650268 0.767701 0.204185 0.709237 0.825303 0.267199

Contrastive Random Walk (CRW)

For step 1, run:

python test_vos.py --filelist ./eval/davis_vallist.txt \

--fc-model crw \

--topk 10 --videoLen 20 --radius 12 --temperature 0.05 --cropSize -1 \

--save-path /save/path

Run step 2 and step 3, this should give:

J&F-Mean J-Mean J-Recall J-Decay F-Mean F-Recall F-Decay

0.677813 0.648103 0.759397 0.199973 0.707523 0.834314 0.24343

ImageNet Classification

For step 1, run:

python test_vos.py --filelist ./eval/davis_vallist.txt \

--semantic-model imagenet50 \

--topk 10 --videoLen 20 --radius 12 --temperature 0.05 --cropSize -1 \

--save-path /save/path

Run step 2 and step 3, this should give:

J&F-Mean J-Mean J-Recall J-Decay F-Mean F-Recall F-Decay

0.672987 0.647146 0.755544 0.196936 0.698828 0.802094 0.274161

MoCo-V1

For step 1, run:

python test_vos.py --filelist ./eval/davis_vallist.txt \

--semantic-model mocov1 \

--topk 10 --videoLen 20 --radius 12 --temperature 0.05 --cropSize -1 \

--save-path /save/path

Run step 2 and step 3, this should give:

J&F-Mean J-Mean J-Recall J-Decay F-Mean F-Recall F-Decay

0.668148 0.643441 0.744146 0.210128 0.692855 0.794819 0.280282

SimSiam

For step 1, run:

python test_vos.py --filelist ./eval/davis_vallist.txt \

--semantic-model simsiam \

--topk 10 --videoLen 20 --radius 12 --temperature 0.05 --cropSize -1 \

--save-path /save/path

Run step 2 and step 3, this should give:

J&F-Mean J-Mean J-Recall J-Decay F-Mean F-Recall F-Decay

0.666938 0.643999 0.763806 0.21507 0.689877 0.794482 0.294832

PixPro

For step 1, run:

python test_vos.py --filelist ./eval/davis_vallist.txt \

--semantic-model pixpro \

--topk 10 --videoLen 20 --radius 12 --temperature 0.05 --cropSize -1 \

--save-path /save/path

Run step 2 and step 3, this should give:

J&F-Mean J-Mean J-Recall J-Decay F-Mean F-Recall F-Decay

0.58866 0.579455 0.699188 0.266769 0.597864 0.671969 0.363654

We have modified and integrated the code from CRW and PixPro into this project.

If you find this repository useful, please consider giving a star ⭐ and citation:

@article{hu2022semantic,

title={Semantic-Aware Fine-Grained Correspondence},

author={Hu, Yingdong and Wang, Renhao and Zhang, Kaifeng and Gao, Yang},

journal={arXiv preprint arXiv:2207.10456},

year={2022}

}