Episodic Transformer for Vision-and-Language Navigation

Alexander Pashevich, Cordelia Schmid, Chen Sun

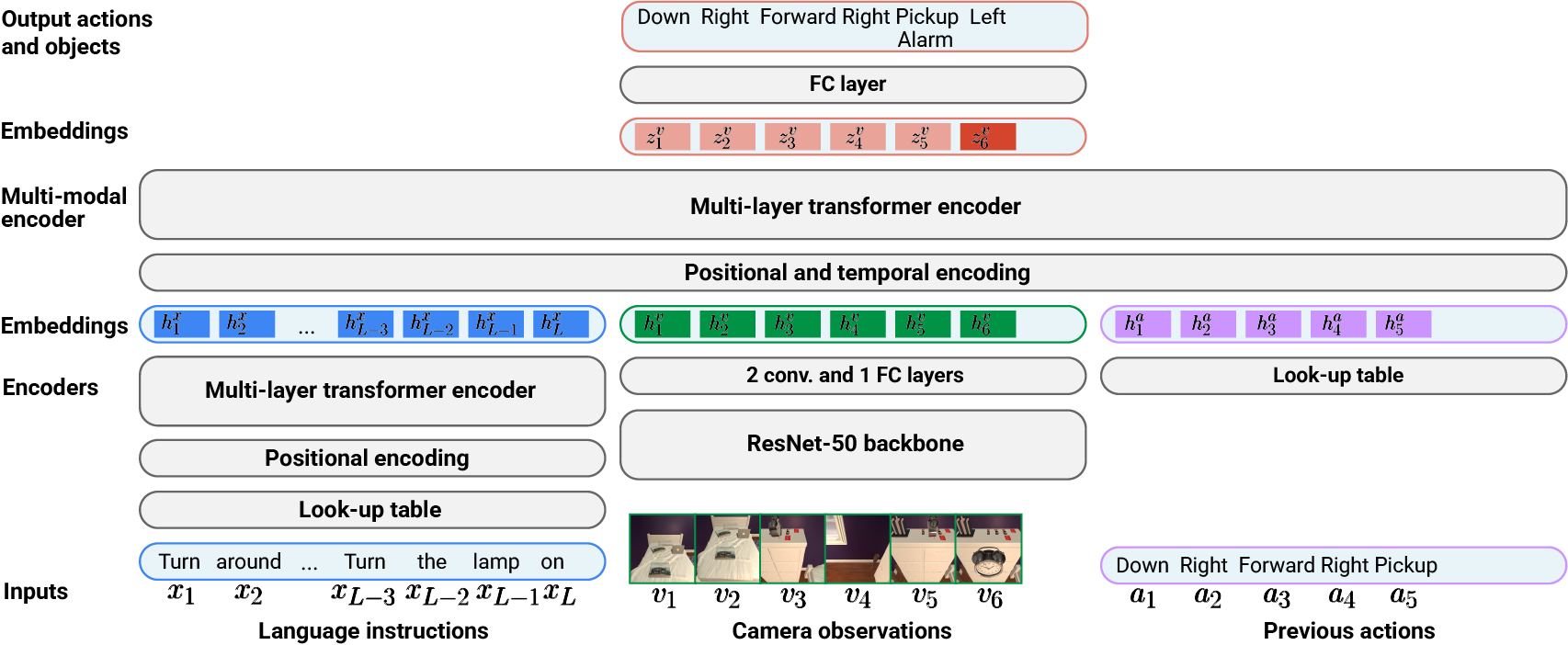

Episodic Transformer (E.T.) is a novel attention-based architecture for vision-and-language navigation. E.T. is based on a multimodal transformer that encodes language inputs and the full episode history of visual observations and actions. This code reproduces the results obtained with E.T. on ALFRED benchmark. To learn more about the benchmark and the original code, please refer to ALFRED repository.

Clone repo:

$ git clone https://github.com/alexpashevich/E.T..git ET

$ export ET_ROOT=$(pwd)/ET

$ export ET_LOGS=$ET_ROOT/logs

$ export ET_DATA=$ET_ROOT/data

$ export PYTHONPATH=$PYTHONPATH:$ET_ROOTInstall requirements:

$ virtualenv -p $(which python3.7) et_env

$ source et_env/bin/activate

$ cd $ET_ROOT

$ pip install --upgrade pip

$ pip install -r requirements.txtDownload ALFRED dataset:

$ cd $ET_DATA

$ sh download_data.sh json_featCopy pretrained checkpoints:

$ wget http://pascal.inrialpes.fr/data2/apashevi/et_checkpoints.zip

$ unzip et_checkpoints.zip

$ mv pretrained $ET_LOGS/Render PNG images and create an LMDB dataset with natural language annotations:

$ python -m alfred.gen.render_trajs

$ python -m alfred.data.create_lmdb with args.visual_checkpoint=$ET_LOGS/pretrained/fasterrcnn_model.pth args.data_output=lmdb_human args.vocab_path=$ET_ROOT/files/human.vocabNote #1: For rendering, you may need to configure args.x_display to correspond to an X server number running on your machine.

Note #2: We do not use JPG images from the full dataset as they would differ from the images rendered during evaluation due to the JPG compression.

Evaluate an E.T. agent trained on human data only:

$ python -m alfred.eval.eval_agent with eval.exp=pretrained eval.checkpoint=et_human_pretrained.pth eval.object_predictor=$ET_LOGS/pretrained/maskrcnn_model.pth exp.num_workers=5 eval.eval_range=None exp.data.valid=lmdb_humanNote: make sure that your LMDB database is called exactly lmdb_human as the word embedding won't be loaded otherwise.

Evaluate an E.T. agent trained on human and synthetic data:

$ python -m alfred.eval.eval_agent with eval.exp=pretrained eval.checkpoint=et_human_synth_pretrained.pth eval.object_predictor=$ET_LOGS/pretrained/maskrcnn_model.pth exp.num_workers=5 eval.eval_range=None exp.data.valid=lmdb_humanNote: For evaluation, you may need to configure eval.x_display to correspond to an X server number running on your machine.

Train an E.T. agent:

$ python -m alfred.model.train with exp.model=transformer exp.name=et_s1 exp.data.train=lmdb_human train.seed=1Evaluate the trained E.T. agent:

$ python -m alfred.eval.eval_agent with eval.exp=et_s1 eval.object_predictor=$ET_LOGS/pretrained/maskrcnn_model.pth exp.num_workers=5Note: you may need to train up to 5 agents using different random seeds to reproduce the results of the paper.

Language encoder pretraining with the translation objective:

$ python -m alfred.model.train with exp.model=speaker exp.name=translator exp.data.train=lmdb_humanTrain an E.T. agent with the language pretraining:

$ python -m alfred.model.train with exp.model=transformer exp.name=et_synth_s1 exp.data.train=lmdb_human train.seed=1 exp.pretrained_path=translatorEvaluate the trained E.T. agent:

$ python -m alfred.eval.eval_agent with eval.exp=et_synth_s1 eval.object_predictor=$ET_LOGS/pretrained/maskrcnn_model.pth exp.num_workers=5Note: you may need to train up to 5 agents using different random seeds to reproduce the results of the paper.

You can also generate more synthetic trajectories using generate_trajs.py, create an LMDB and jointly train a model on it. Please refer to the original ALFRED code to know more the data generation. The steps to reproduce the results are the following:

- Generate 45K trajectories with

alfred.gen.generate_trajs. - Create a synthetic LMDB dataset called

lmdb_synth_45Kusingargs.visual_checkpoint=$ET_LOGS/pretrained/fasterrcnn_model.pthandargs.vocab_path=$ET_ROOT/files/synth.vocab. - Train an E.T. agent using

exp.data.train=lmdb_human,lmdb_synth_45K.

If you find this repository useful, please cite our work:

@inproceedings{pashevich2021episodic,

title = {{Episodic Transformer for Vision-and-Language Navigation}},

author = {Alexander Pashevich and Cordelia Schmid and Chen Sun},

booktitle = {ICCV},

year = {2021},

}