This project focuses on making SQL queries using natural language. We will use the SnowFlake cloud database, although it can be easily extended to other databases, local or in cloud, such as PostgreSQL or MySQL.

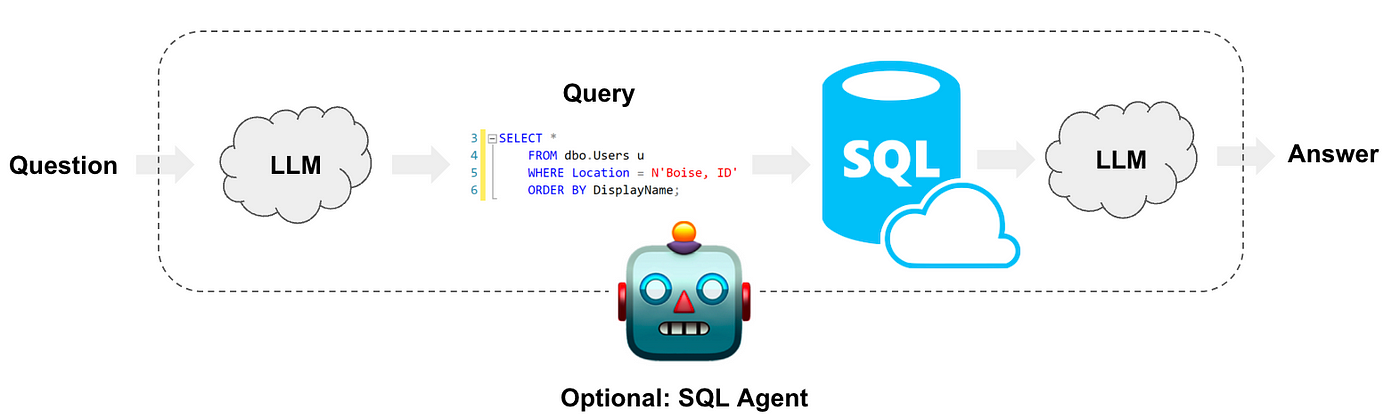

The process is as follows:

- We make a query.

- The LLM translates our query into SQL language.

- The query is executed on the database.

- The SQL response is converted into text.

- The LLM returns a response in text.

We will upload the Sakila public database to SnowFlake. The Sakila database is a nicely normalised database modelling a DVD rental store (for those of you old enough to remember what that is). Its design includes a few nice features:

- Many to many relationships.

- Multiple paths between entities (e.g. film-inventory-rental-payment vs film-inventory-store-customer-payment) to practice joins.

- Consistent naming of columns.

- Primary keys are called [tablename]_[id].

- Foreign keys are called like their referenced primary key, if possible. This allows for using JOIN .. USING syntax where supported.

- Relationship tables do not have any surrogate keys but use composite primary keys.

- Every table has a last_update audit column.

- A generated data set of a reasonable size is available.

Besides, we'll use public Query Practices on Sakila provided on MySQL for testing our model.

First, we need to store the OpenAI API KEY in a .env file to load it with the dotenv library and use it as an environment variable. In notebooks folder there is a jupyter notebook with a step by step explanation and other with the practices. In src folder there is the python code for querying SQL and getting a chat response, besides requirements.txt file. Use examples:

Getting a response for a user query

python chat.py -p "Which actors have the first name ‘SCARLETT’?"