For each of the CPU, DDP, FSDP, and DS files, we have organized the various examples independently.

Use these examples as a guide to write your own train.py!

I'm thinking of making a template that is somewhat enforced. Torch-fabric doesn't support as many features as I thought it would. Write my own trainer in pure native torch.

Each trainer will be written in its own python file.

torch >= 2.1.2

cuda 11.8

I am experimenting with codebase deepspeed as of 231230.

deepspeed install is,

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | shAfter executing the command, restart the terminal to install deepspeed.

Make sure you have 11.8 CUDA and the corresponding TORCH installed,

sh scripts/install_deepspeed.shto install deepspeed.

In vs_code_launch_json, upload launch.json for debugging vscode.

- Download raw data and input dir

raw_data - Copy Model Network(just

nn.Module) innetworksdir - make

preprocess.pyand preprocess yourself - if you have to make or copy

dataset, inpututils/data/and check some sample - if you have to make or

sampler, loader, inpututils/dataand check some sample- i make some useful sampler in

custom_sampler.pyalready (reference HF's transformers) - DistributedBucketSampler : make random batch, but lengths same as possible.

- LengthGroupedSampler : descending order by

lengthcolumn, and select random indices batch. (dynamic batching) - DistributedLengthGroupedSampler: distributed dynamic batching

- i make some useful sampler in

- change

[cpu|ddp|deepspeed]_train.py- Defines the

Trainerinheritance, which is already implemented. Since the training pipeline may vary from model to model and dataset to dataset, we've used@abstractmethodto give you direct control, and if you need to implement additional inheritance, we've made it easy to do so by ripping out the parts you need and referencing them. - Basically, you can modify the

training_stepandeval_loop, which require a loss operation, and do whatever you want to accomplish, utilizingbatches,labels,criterion, andeval_metric. I was implemented examples for usingall_gatherand more in each of theddp,deepspeed,fsdpexamples, so check them out and write your own code effectively! - I've implemented

chk_addr_dict, which makes heavy use of thedictionary inplace function, to reference address values duringdebug. Always be careful that your own implementations don't run out of memory with each other!

- Defines the

- In the

mainfunction, you'll do some simple operations on thedataat the beginning and prepare the ingredients for yourmodel,optimizer, andscheduler.- learning rate scheduler must be

{"scheduler": scheduler, "interval": "step", "frequency": 1, "monitor": None} frequencyis step accumulation, if is 2, for every 2 train steps, take 1 scheduler step.monitoris for onlyReduceLROnPlateau's loss value

- learning rate scheduler must be

- run!

cd {your-workpsace}/pytorch-trainer&sh scripts/run_train_[cpu|ddp|deepseed].sh

>>>> TODO LIST Open/Close

each test wandb is here Link

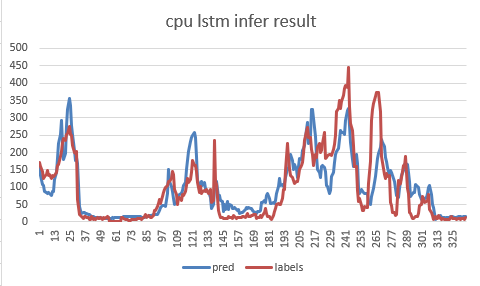

- cpu_trainer - lstm example, but it training is weird

- cpu_trainer - wandb

- cpu_trainer - continuous learning

- cpu_trainer - weird lstm training fix

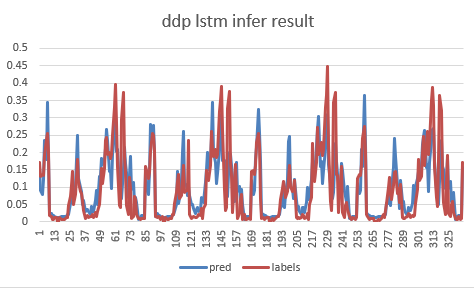

- ddp_trainer - lstm or mnist example

- ddp_trainer - sampler and dataloader

- ddp_trainer - training loop additional process?(for distributed learning)

- ddp_trainer - Reliable wandb logging for distributed learning

- ddp_trainer - wandb have to using gather or something?

- ddp_trainer - add fp16 and bf16 use

- deepspeed_trainer - lstm or mnist example

- deepspeed_trainer - sampler and dataloader

- deepspeed_trainer - training loop additional process?(for distributed learning)

- deepspeed_trainer - wandb have to using gather or something?

- deepspeed_trainer - Reliable wandb logging for distributed learning

- fsdp_trainer - change deepspeed to fsdp

- fsdp_trainer - test (wandb compare this link)

- eval epoch end all_gather on cpu, eval on cpu (?)

- Implement customizable training and eval step inheritance

- inference - py3

- huggingface - float16 model is real model dtype is float16? check and applied

- In Huggingface

transformers, when training afloat16orbfloat16model, it is actually trained by changing the model'sdtype, so if you want to reproduce this, change it viamodel.to(dtype).

- In Huggingface

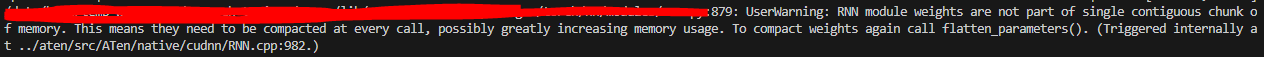

The ZeRO test is not accurate because the model was run with an lstm.

Since the LSTM requires contiguous parameters to be fully guaranteed, the partitioning(cpu-gpu) may not have worked well.

Also, I have some doubts about the CPU offload performance because I used TORCH OPTIMIZED ADAMW, not DEEPSPEED OPTIMIZED ADAMW.

However, I will share the results of what I did with 2 lstm layers set to 1000 n_layer.

| test set | RTX3090 GPU Mem (Mib) |

|---|---|

| zero2 optim offload | 2016 |

| zero2 optim offload | 1964 |

| zero3 full offload | 2044 |

| zero3 optim offload | 2010 |

| zeor3 param offload | 2044 |

| zero3 not offload | 2054 |

I think, optim offload is good but, param offload is strange...

distributed learningwill shuffle the data for each GPU

so you won't be able to find the source specified here up to scaler.

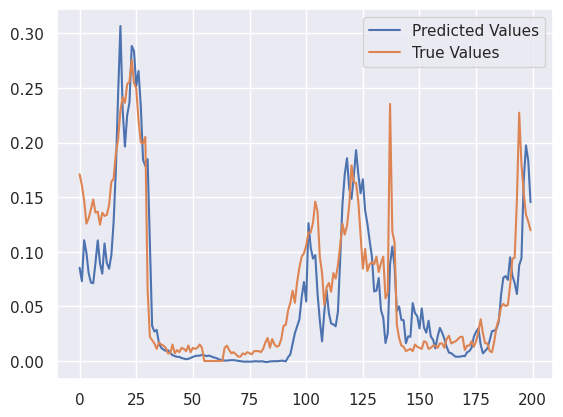

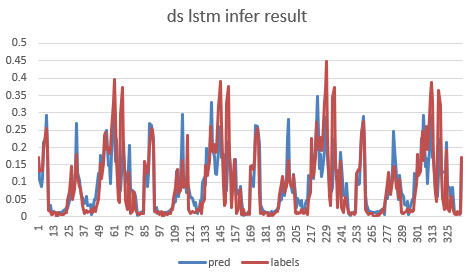

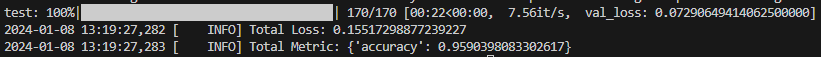

Time Series Task

| category | image |

|---|---|

| label |  |

| CPU |  |

| DDP |  |

| DeepSpeed |  |

IMDB (Binary Classification) Task

| category | image |

|---|---|

| FSDP |  |

tensorboard - I personally find it too inconvenient.

useful link: https://github.com/prigoyal/pytorch_memonger/blob/master/tutorial/Checkpointing_for_PyTorch_models.ipynb

I don't have much understanding of distirbuted learning, so I'm looking for someone to help me out, PRs are always welcome.

Bugfixes and improvements are always welcome.

If you can recommend any accelerator related blogs or videos for me to study, I would be grateful. (in issue or someting)