More details please see VST Github code and VST paper.

$ pip install -r /utils/requirements.txtWe use the training set of DUTS to train our VST for RGB SOD. Besides, we follow Egnet to generate contour maps of DUTS trainset for training. You can directly download the generated contour maps DUTS-TR-Contour from [baidu pan fetch code: ow76 | Google drive] and put it into data folder.

We use the testing set of DUTS, ECSSD, HKU-IS, PASCAL-S, DUT-O, and SOD to test our VST. After Downloading, put them into /data folder.

Your Data folder should look like this:

-- Data

|-- DUTS

| |-- DUTS-TR

| |-- | DUTS-TR-Image

| |-- | DUTS-TR-Mask

| |-- | DUTS-TR-Contour

| |-- DUTS-TE

| |-- | DUTS-TE-Image

| |-- | DUTS-TE-Mask

|-- ECSSD

| |--images

| |--GT

...

- Download the pretrained T2T-ViT_t-14 model [baidu pan fetch code: 2u34 | Google drive] and put it into

pretrained_model/folder. - Run

python train_test_eval.py --Training True --Testing True --Evaluation Truefor training, testing, and evaluation. The predictions will be inpreds/folder and the evaluation results will be inresult.txtfile.

- Download our pretrained

RGB_VST.pth[baidu pan fetch code: pe54 | Google drive] and then put it incheckpoint/folder. - Run

python train_test_eval.py --Testing True --Evaluation Truefor testing and evaluation. The predictions will be inpreds/folder and the evaluation results will be inresult.txtfile.

Our saliency maps can be downloaded from [baidu pan fetch code: 92t0 | Google drive].

Download our dataset baidu pan and fetch code: 6666

And then put it in data/ folder.

Your /data folder should look like this:

-- data

|-- input

| |-- 1.gif

| |-- 2.gif

|...

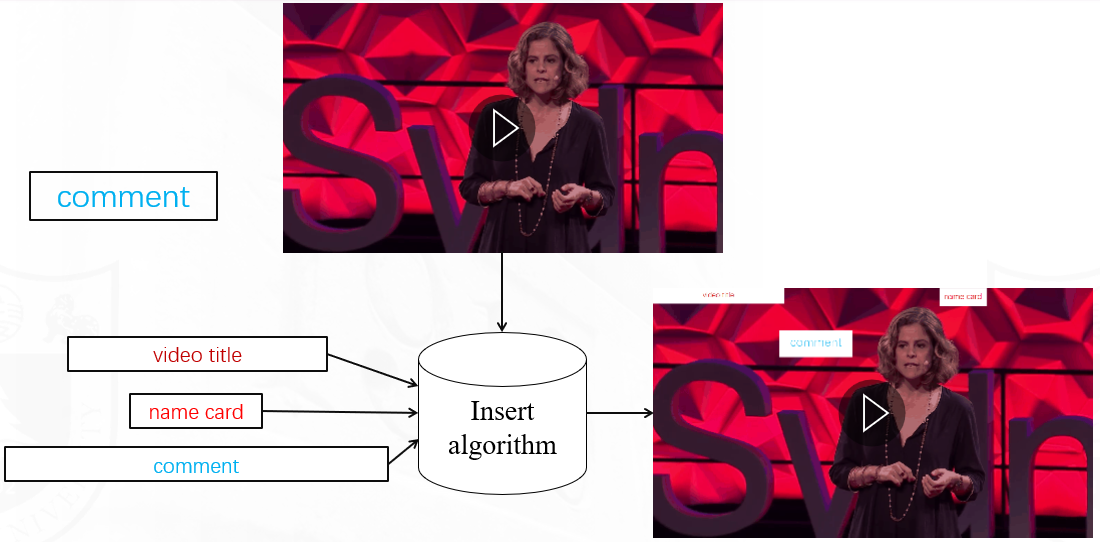

|-- comment.png

|-- name_card.png

|-- video_title.png

- Download our pretrained

RGB_VST.pth[baidu pan fetch code: pe54 | Google drive] and then put it incheckpoint/folder. - Run

Main.pyfor testing

More details please see baidu pan fetch code: 6666.

We thank the authors of VST for providing codes of VST.

If you think our work is helpful, please cite

@InProceedings{Liu_2021_ICCV,

author = {Liu, Nian and Zhang, Ni and Wan, Kaiyuan and Shao, Ling and Han, Junwei},

title = {Visual Saliency Transformer},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2021},

pages = {4722-4732}

}