📢FreeTalker - Generate both speech-driven and text-driven speaker.

Code we tested on NVIDIA GeForce RTX 2080 Ti (CUDA Version: 12.2).

conda create -n UnifiedGesture python==3.7

conda activate UnifiedGesture

pip install -r requirements.txtIf you want to use in higher CUDA version (like NVIDIA GeForce RTX 3080), please change the torch, torchvision, torchaudio version in requirements.txt to xxx+cu111.

Or simply use Torch 1.13+ and the corresponding versions torchvision and torchaudio.

Download files such as pre-trained models from Google Drive or Baidu Netdisk.

Put the pre-trained models and data:

- Diffusion model

- VQVAE

- Retargeting network

- Test data (Trinity, ZEGGS)

to according folders.

Download WavLM model and put it to ./diffusion_latent/wavlm_cache

cd ./diffusion_latent/

python sample.py --config=./configs/all_data.yml --gpu 0 --save_dir='./result_quick_start/Trinity' --audio_path="../dataset/ZEGGS/all_speech/005_Neutral_4_x_1_0.npy" --model_path='./experiments/256_seed_6_aux_model001700000_reinforce_diffusion_onlydiff_gradnorm0.1_lr1e-7_max0_seed0/ckpt/diffusion_epoch_1.pt'Optional:

- If you want to use your own audio, please directly change the path of

--audio_pathto your own audio path such as--audio_path='../dataset/Trinity/audio/Recording_006.wav' - You can refer to

generate_result()insample.pyto generate all the files rather than only one file.

You will get the generated motion in ./diffusion_latent/result_quick_start/Trinity/ folder with the name xxx_recon.npy, xxx_code.npy and xxx.npy.

Then select the target skeleton and decode the primal gesture:

cd ../retargeting/

python demo.py --target ZEGGS --input_file "../diffusion_latent/result_quick_start/Trinity/005_Neutral_4_x_1_0_minibatch_1080_[0, 0, 0, 0, 0, 3, 0]_123456_recon.npy" --ref_path './datasets/bvh2latent/ZEGGS/065_Speech_0_x_1_0.npy' --output_path '../result/inference/Trinity/' --cuda_device cuda:0or

mkdir "../diffusion_latent/result_quick_start/ZEGGS/"

cp "../diffusion_latent/result_quick_start/Trinity/005_Neutral_4_x_1_0_minibatch_1080_[0, 0, 0, 0, 0, 3, 0]_123456_recon.npy" "../diffusion_latent/result_quick_start/ZEGGS/"

python demo.py --target Trinity --input_file "../diffusion_latent/result_quick_start/ZEGGS/005_Neutral_4_x_1_0_minibatch_1080_[0, 0, 0, 0, 0, 3, 0]_123456_recon.npy" --ref_path './datasets/bvh2latent/ZEGGS/065_Speech_0_x_1_0.npy' --output_path '../result/inference/Trinity/' --cuda_device cuda:0And you will get 005_Neutral_4_x_1_0_minibatch_1080_[0, 0, 0, 0, 0, 3, 0]_123456_recon.bvh in "./result/inference/Trinity/" folder.

You can ref DiffuseStyleGesture to use Blender to visualize the generated motion.

The results are shown below, try the output with different skeletons.

0001-4320.1.mp4

Finally the problem of foot sliding can be partially dealt with using inverse kinematics.

cd ./datasets/

python process_bvh.py --step IK --source_path "../../result/inference/Trinity/" --ref_bvh "./Mixamo_new_2/ZEGGS/067_Speech_2_x_1_0.bvh"You will get 005_Neutral_4_x_1_0_minibatch_1080_[0, 0, 0, 0, 0, 3, 0]_123456_recon_fix.bvh in the folder same as before.

The results are shown below, orange indicates the result of IK optimization performed on the lower body. And you can try to modify the threshold for foot contact speed to strike a balance between foot sliding and smoothness.

0001-4320-2.mp4

Here we only use a small amount of data for illustration, please get all the data from Trinity and ZEGGS.

Place the data from step 2 in the corresponding folder.

python process_bvh.py --step Trinity --source_path "../../dataset/Trinity/" --save_path "./Trinity_ZEGGS/Trinity/"

python process_bvh.py --step ZEGGS --source_path "../../dataset/ZEGGS/clean/" --save_path "./Trinity_ZEGGS/ZEGGS/"

python process_bvh.py --step foot_contact --source_path "./Trinity_ZEGGS/Trinity/" --save_path "./Trinity_ZEGGS/Trinity_aux/"

python process_bvh.py --step foot_contact --source_path "./Trinity_ZEGGS/ZEGGS/" --save_path "./Trinity_ZEGGS/ZEGGS_aux/"

cd ../..

python process_audio.pyChange dataset_name = 'Mixamo_new_2' in the L7 of file ./retargeting/option_parser.py to dataset_name = 'Trinity_ZEGGS'

cd ./retargeting/

python datasets/preprocess.py

python train.py --save_dir=./my_model/ --cuda_device 'cuda:0'The model will be saved in: ./my_model/models/

(Optional: change the epoch of model.load(epoch=16000) in line L73 of the ./eval_single_pair.py file to what you need.)

python demo.py --mode bvh2latent --save_dir ./my_model/You will get latent result of the retargeting in the dataset ./datasets/Trinity_ZEGGS/bvh2upper_lower_root/.

Data preparation to generate lmdb files:

python process_root_vel.py

python ./datasets/latent_to_lmdb.py --base_path ./datasets/Trinity_ZEGGS/bvh2upper_lower_rootYou will get the lmdb files in the ./retargeting/datasets/Trinity_ZEGGS/bvh2upper_lower_root/lmdb_latent_vel/ folder.

cd ../codebook

python train.py --config=./configs/codebook.yml --train --gpu 0The trained model is saved in: ./result/my_codebook/, Then the code for the upper body is generated.

python VisualizeCodebook.py --config=./configs/codebook.yml --train --gpu 0cd ..

python process_code.py

python ./make_lmdb.py --base_path ./dataset/You will get the lmdb files in the ./dataset/all_lmdb_aux/ folder.

Training the diffusion model:

cd ./diffusion_latent

python end2end.py --config=./configs/all_data.yml --gpu 1 --save_dir "./result/my_diffusion"The trained diffusion model will be saved in: ./result/my_diffusion/

# collect demonstrations

python data_loader/lmdb_data_loader.py --config=./configs/all_data.yml --gpu 0

# train reward model

python reward_model_policy.py

# RL training

python reinforce.py --config=./configs/all_data.yml --gpu 0- If the

data.mbdfile is too small (8KB), check for issues generating the lmdb file. - If you meet

urllib3problem, see this issue. - You can modify the code yourself to use BEAT, TWH, etc. This will not be demonstrated here.

We are grateful to

- Skeleton-Aware Networks for Deep Motion Retargeting,

- MDM: Human Motion Diffusion Model,

- Bailando: 3D dance generation via Actor-Critic GPT with Choreographic Memory, and

- Edge: editable dance generation from music

for making their code publicly available, which helped significantly in this work.

If you find this work useful, please cite the paper with the following bibtex:

@inproceedings{10.1145/3581783.3612503,

author = {Yang, Sicheng and Wang, Zilin and Wu, Zhiyong and Li, Minglei and Zhang, Zhensong and Huang, Qiaochu and Hao, Lei and Xu, Songcen and Wu, Xiaofei and Yang, Changpeng and Dai, Zonghong},

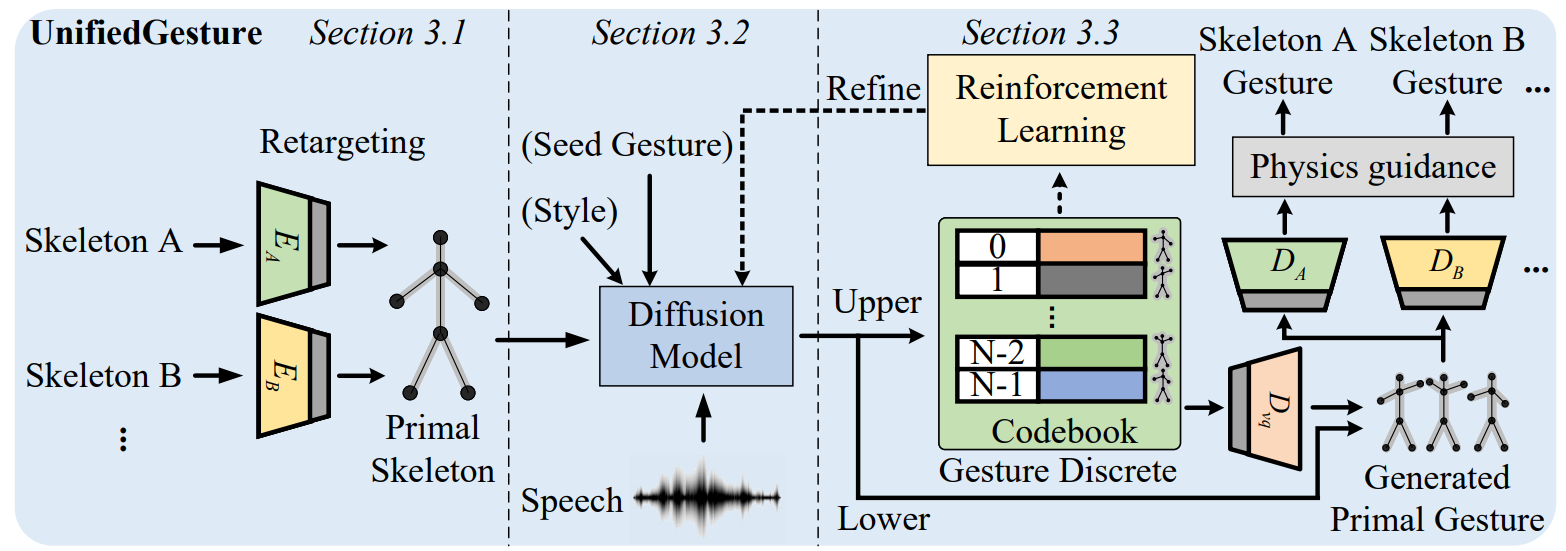

title = {UnifiedGesture: A Unified Gesture Synthesis Model for Multiple Skeletons},

year = {2023},

isbn = {9798400701085},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3581783.3612503},

doi = {10.1145/3581783.3612503},

abstract = {The automatic co-speech gesture generation draws much attention in computer animation. Previous works designed network structures on individual datasets, which resulted in a lack of data volume and generalizability across different motion capture standards. In addition, it is a challenging task due to the weak correlation between speech and gestures. To address these problems, we present UnifiedGesture, a novel diffusion model-based speech-driven gesture synthesis approach, trained on multiple gesture datasets with different skeletons. Specifically, we first present a retargeting network to learn latent homeomorphic graphs for different motion capture standards, unifying the representations of various gestures while extending the dataset. We then capture the correlation between speech and gestures based on a diffusion model architecture using cross-local attention and self-attention to generate better speech-matched and realistic gestures. To further align speech and gesture and increase diversity, we incorporate reinforcement learning on the discrete gesture units with a learned reward function. Extensive experiments show that UnifiedGesture outperforms recent approaches on speech-driven gesture generation in terms of CCA, FGD, and human-likeness.},

booktitle = {Proceedings of the 31st ACM International Conference on Multimedia},

pages = {1033–1044},

numpages = {12},

keywords = {neural motion processing, data-driven animation, gesture generation},

location = {<conf-loc>, <city>Ottawa ON</city>, <country>Canada</country>, </conf-loc>},

series = {MM '23}

}

If you have any questions, please contact us at yangsc21@mails.tsinghua.edu.cn or wangzl21@mails.tsinghua.edu.cn.