-

machine learning 이란?

-

Supervised learning

ex, Image labeling, Email spam filter, Predicting exam score

type

+ Predicting final exam score based on time spent - regression

+ Pass/non-pass based on time spent - binary classification

+ Letter grade(A, B, C, E and F) based on time spent - multi-label classification -

Unsupervised learning

un-labeled data

-

- Goal: predicting

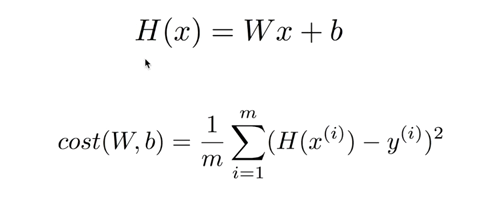

- Hypothesis and Cost(Loss) function

-

Goal: Minimize cost

minimize cost(W, b) W,b Gradient descent algorithm

-

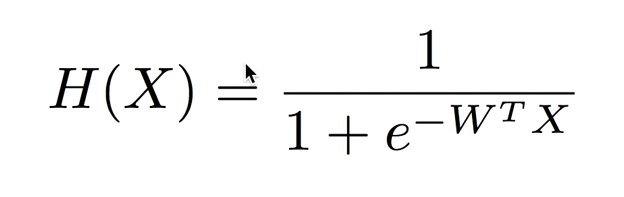

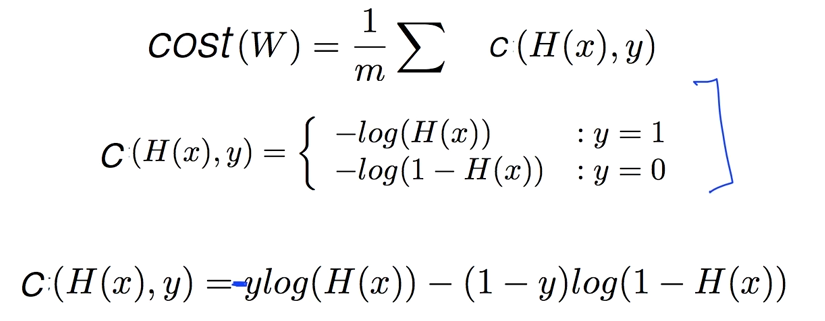

Goal: Spam Detection(Spam ot Ham), Facebook feed(show or hide), Credit Crad Fraud Detect(legitimate/fraud), Stock...

-

Hypothesis

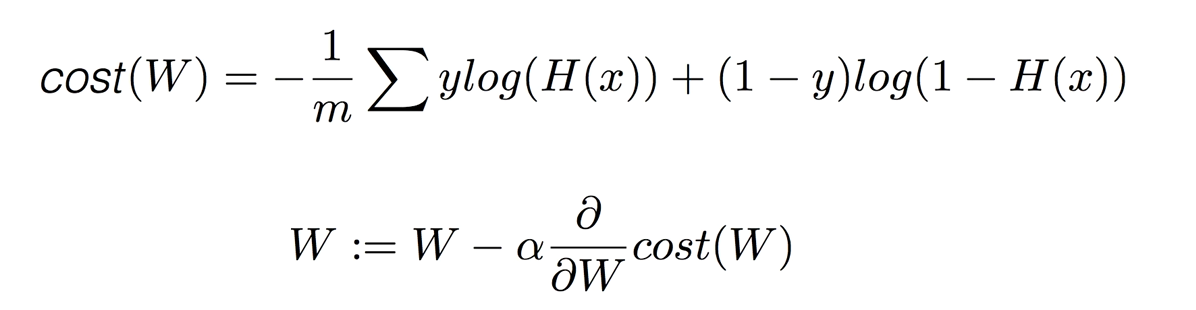

Gradient descent algorithm

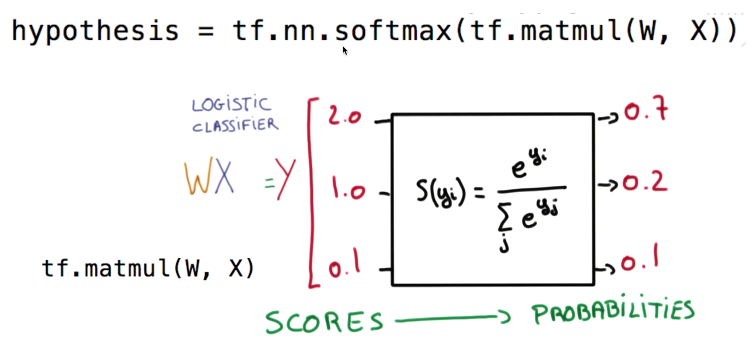

여러개의 class가 있을때 그것을 예측. like grade

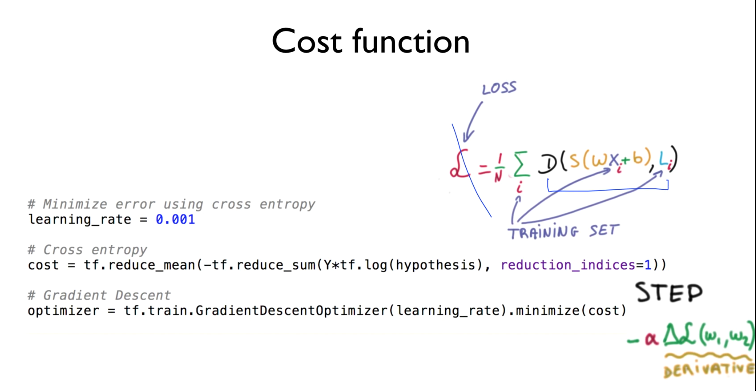

- Hypothesis

- Cost function

- Goal: Minimize cost

...

XOR

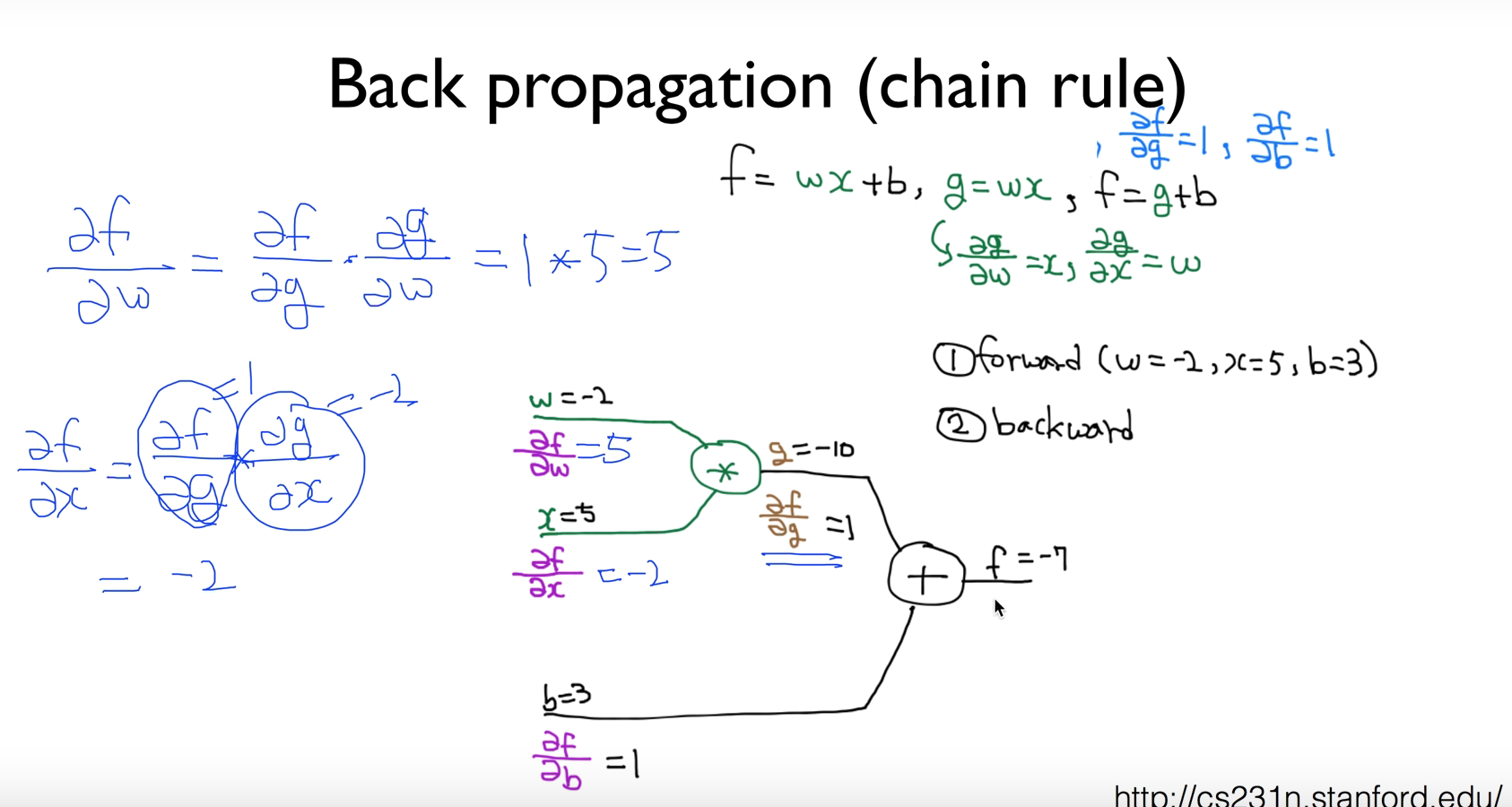

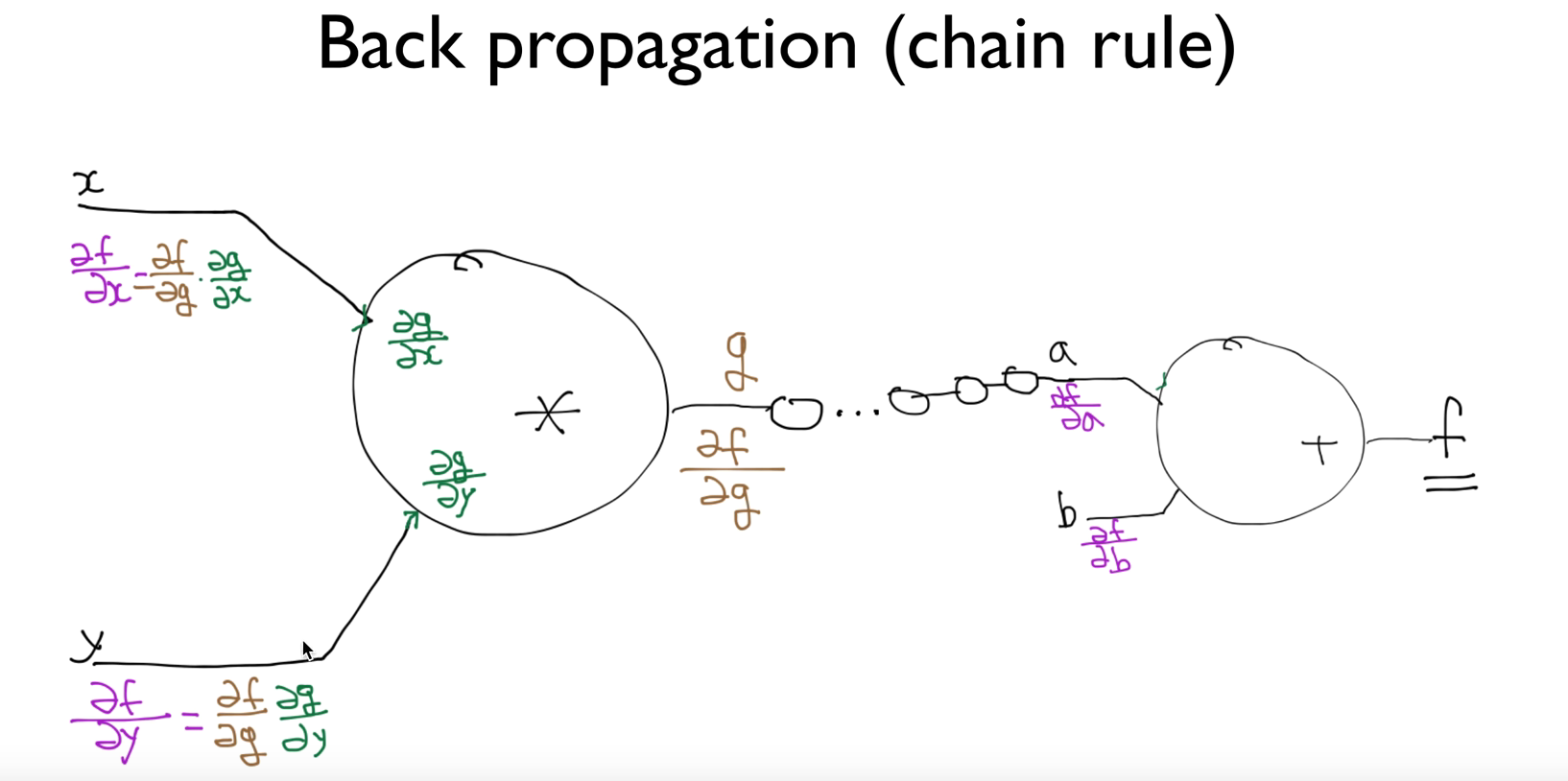

How can we learn W, and b from trading data? -> !!!

Back propagarion(chain rule)

learning_rate-> affect to cost step

Data Preprocessing -> Standardzation ex, X_std[:,0] = (X[:,0] - X[:,0].mean()) / X[:,0].std()

Online learning ->

Sigmoid -> ReLU ...

Weights -> RBM (Restricted Boltzmann Machine, encoder/decorder)

-> Xavier initialization

Overfitting -> More tranining data

-> reduce the number of features

-> Regularization(not have too big numbers in the weight) -> l2reg

-> Dropout

Ensemble

filters

Weights(depth), how many focus at once

output is one value

how many numbers can we get? (how many output with filter)

Output size:

( (N - F) / stride ) + 1

Pad

block to small image , know the edge

add zero pad the border

make same input size and output size

How many weight variables?

ex. 5*5*3*6

Pooling(sampling)

why sampling? -> make layer to smaller

max pooling

Sequence data

state

ex.

language Modeling

Speech Recognition

Machine Translation

Bot

image/video captioning

Algol:

Long Short Term Memory (LSTM)

GRU

Environment

Actor(Agent)

Action ->

<- state, reward

Q function

Q(state, action) -> quality(reward)

Policy

Max Q = maxQ(s, a)

π = argmaxQ(s, a)

How learn Q?

Q(s, a) <- r + maxQ(s`, a`)

Exploit VS Exploration

E-greedy

decaying E-greedy

add random noise

Discounted reward

Q(s, a) <- r + γmaxQ(s`, a`)

Non-deterministic(Stochastic)

learning rate

α = 0.1

Q(s, a) <- (1-α)Q(s, a) + α [r + γmaxQ(s`, a`) ]

Q(s, a) <- Q(s, a) + α [r + γmaxQ(s`, a`) - Q(s, a)]

Q-Table?

too big in real world

Q-function network

input: state

output: all action

Q-Network problems

1. correlations sample

2. non-stationary targets

Solve

1. Go deep

2. experience reply -> store result to buffer and then batch randomly

3. Separate target network