Read the paper!

IF YOU ARE NEW HERE: CHECK OUT THE OVERVIEW AND INSTALLATION SECTION TO GET STARTED!

Our first major update is launched! This update includes major changes in the workflow and the GUI.

- New features:

- New manual refinement step: allows users to refine the classification of individual bouts.

- Predict tab: Ethogram, Pie charts, Stats and Videos!

- Extended features:

- Discover tab: simultaneous split of multiple behaviors in one go

- Data upload: Sample rate of annotation files can now be set explicitly during upload.

- Active learning: Confidence threshold now accessible in the GUI

- Other changes:

- Several UX improvements

- Bug fixes

- Increased performance during active learning

Please be aware that this update is not backwards compatible with previous versions of A-SOiD.

- Download or clone the latest version of A-SOiD from this repository.

- Create a new environment using the

asoid.ymlfile (see below).

conda env create --file asoid.yml

- Activate the environment you installed A-SOiD in.

- Start A-SOiD and use it just like before.

asoid app

DeepLabCut 1,2,3, SLEAP 4, and OpenPose 5 have revolutionized the way behavioral scientists analyze data. These algorithm utilizes advances in computer vision and deep learning to automatically estimate poses. Interpreting the positions of an animal can be useful in studying behavior; however, it does not encompass the whole dynamic range of naturalistic behaviors.

Behavior identification and quantification techniques have undergone rapid development. To this end, supervised or unsupervised methods (such as B-SOiD6 ) are chosen based upon their intrinsic strengths and weaknesses (e.g. user bias, training cost, complexity, action discovery).

Here, a new active learning platform, A-SOiD, blends these strengths and in doing so, overcomes several of their inherent drawbacks. A-SOiD iteratively learns user-defined groups with a fraction of the usual training data while attaining expansive classification through directed unsupervised classification.

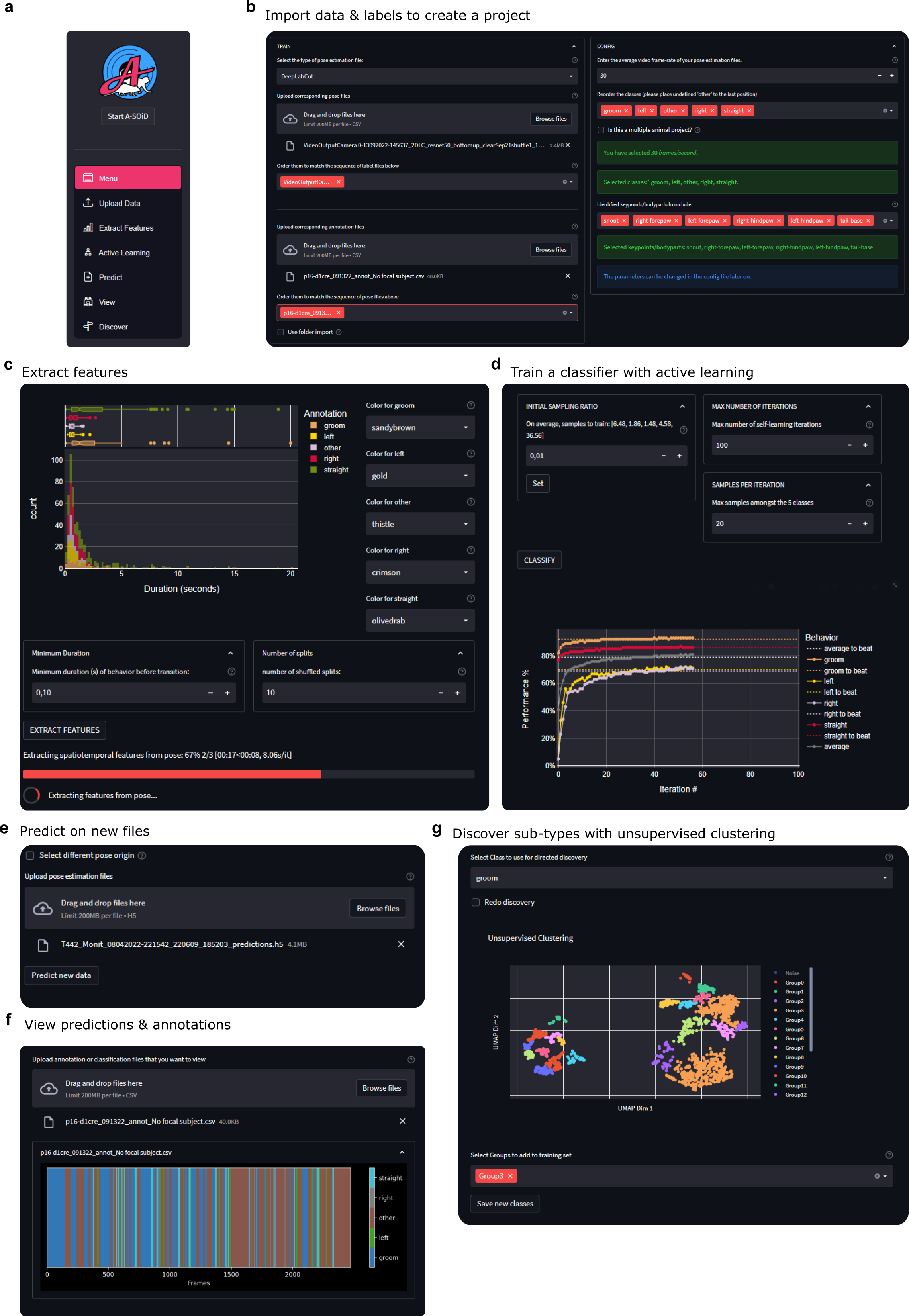

A-SOiD is a streamlit-based application that integrates the core features of A-SOiD into a user-friendly, no-coding required GUI solution that can be downloaded and used on custom data.

For this we developed a multi-step pipeline that guides users, independent of their previous machine learning abilities through the process of generating a well-trained classifier for their own use-case.

In general, users are required to provide a small labeled data set (ground truth) with behavioral categories of their choice using one of the many available labeling tools (e.g. BORIS7 ) or import their previous supervised machine learning data sets. Following the upload of data (see Fig. above, a), a A-SOiD project is created, including several parameters that further enable users to select individual animals (in social data) and exclude body parts from the feature extraction.

A-SOiD supports the following input types:

- BORIS -> exported as binary files in 0.1 sec time steps (10 Hz): Read the docs

- any annotation files in this style (one-hot encoded), including an index that specifies time steps in seconds.

You can see an example of this using pandas in our docs: Convert annotations to binary format

A-SOiD requires only a standard computer with enough RAM to support the model training during active learning. For clustering, CPU and RAM requirements are increased. Refer to B-SOiD or our paper for details.

This package is supported for Windows and Mac but can be run on Linux computers given additional installation of require packages.

For dependencies please refer to the requirements.txt file. No additional requirements.

Note: This is a quick guide to get you started. For a more detailed guide, see Installation.

Clone this repository and create a new environment in which A-SOiD will be installed automatically (recommended) Anaconda/Python3.

cd path/to/A-SOiD

Clone it directly from GitHub

git clone https://github.com/YttriLab/A-SOID.gitor download ZIP and unpack where you want.

- Create a new environment using the

asoid.ymlfile (see below).

conda env create --file asoid.yml

- Activate the environment you installed A-SOiD in.

conda activate asoid

- You can run A-SOiD now from inside your environment:

asoid app

We invite you to test A-SOiD using the CalMS21 data set. The data set can be used within the app by simply specifying the path to the train and test set files (see below). While you can reproduce our figures using the provided notebooks, the data set also allows an easy first use case to get familiar with all significant steps in A-SOiD.

- Download the data set and convert it into npy format using their provided script.

- Run A-SOiD and select 'CalMS21 (paper)' in the

Upload Datatab. - Enter the full path to both train and test files from the first challenge (e.g. 'C:\Dataset\task1_classic_classification\calms21_task1_train.npy' and 'C:\Dataset\task1_classic_classification\calms21_task1_test.npy').

- Enter a directory and prefix to create the A-SOiD project in.

- Click on 'Preprocess' and follow the remaining workflow of the app. After successful importing the data set, you can now run the CalMS21 project as any other project in A-SOiD.

The overall runtime depends on your setup and parameters set during training, but should be completed within 1h of starting the project. Tested on: AMD Ryzen 9 6900HX 3.30 GHz and 16 GB RAM; Windows 11 Home

A-SOiD was developed as a collaboration between the Yttri Lab and Schwarz Lab by:

Jens Tillmann, University Bonn

Alex Hsu, Carnegie Mellon University

Martin Schwarz, University Bonn

Eric Yttri, Carnegie Mellon University

Martin K. Schwarz SchwarzLab

Eric A. Yttri YttriLab

For recommended changes that you would like to see, open an issue.

There are many exciting avenues to explore based on this work. Please do not hesitate to contact us for collaborations.

If you are having issues, please refer to our issue page first, to see whether a similar issue was already solved. If this does not apply to your problem, please submit an issue with enough information that we can replicate it. Thank you!

A-SOiD is released under a Clear BSD License and is intended for research/academic use only.

If you are using our work, please make sure to cite us and any additional resources you were using

Tillmann, J.F., Hsu, A.I., Schwarz, M.K. et al. A-SOiD, an active-learning platform for expert-guided, data-efficient discovery of behavior. Nat Methods (2024). https://doi.org/10.1038/s41592-024-02200-1

or see Cite Us