This is the implementation of Mixed-TD: Efficient Neural Network Accelerator with Layer-Specific Tensor Decomposition.

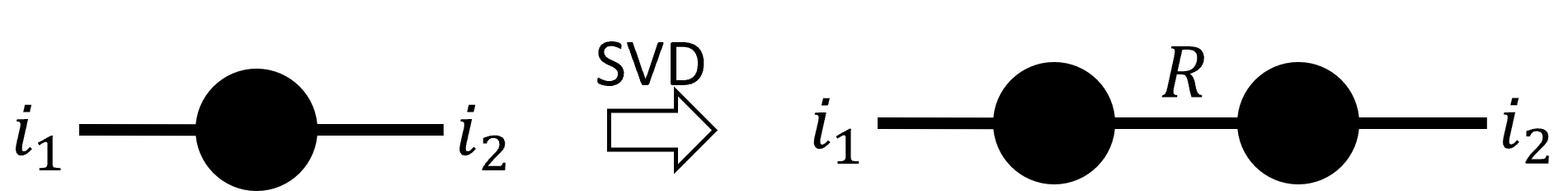

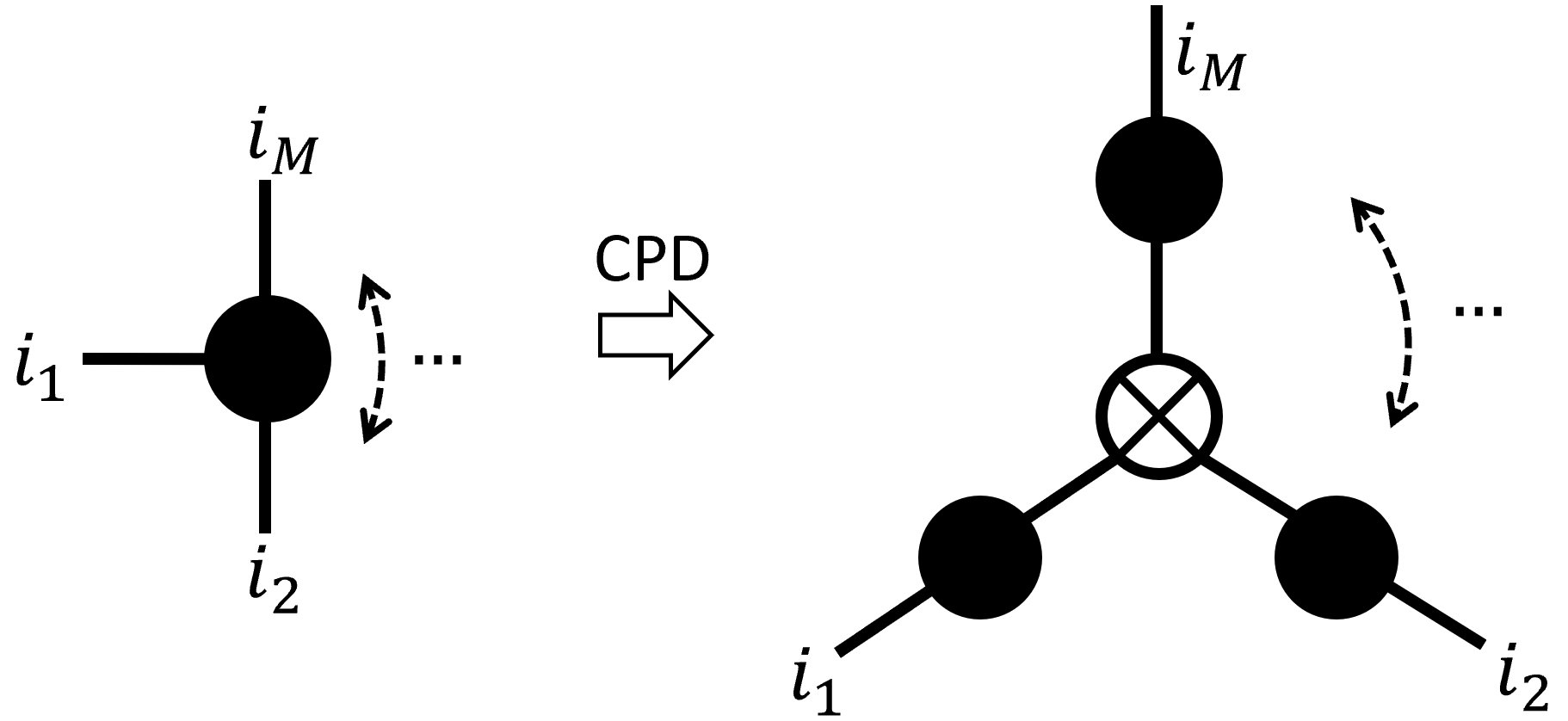

Mixed-TD is a framework that maps CNNs onto FPGAs based on a novel tensor decomposition method. The proposed method applies layer-specific Singular Value Decomposition (SVD) and Canonical Polyadic Decomposition (CPD) in a mixed manner, achieving 1.73x to 10.29x throughput per DSP compared to state-of-the-art accelerators.

|

|

git submodule update --init --recursive

conda create -n mixed-td python=3.10

conda activate mixed-td

pip install -r requirements.txt

export FPGACONVNET_OPTIMISER=~/Mixed-TD/fpgaconvnet-optimiser

export FPGACONVNET_MODEL=~/Mixed-TD/fpgaconvnet-optimiser/fpgaconvnet-model

export PYTHONPATH=$PYTHONPATH:$FPGACONVNET_OPTIMISER:$FPGACONVNET_MODEL

python search.py --gpu 0

todo

@article{yu2023mixed,

title={Mixed-TD: Efficient Neural Network Accelerator with Layer-Specific Tensor Decomposition},

author={Yu, Zhewen and Bouganis, Christos-Savvas},

journal={arXiv preprint arXiv:2306.05021},

year={2023}

}