Supplementary materials for the paper: Rule-embedded network for audio-visual voice activity detection in live musical video streams , please see here for the paper: https://arxiv.org/abs/2010.14168

Please see here(https://yuanbo2020.github.io/Audio-Visual-VAD/) for video demos.

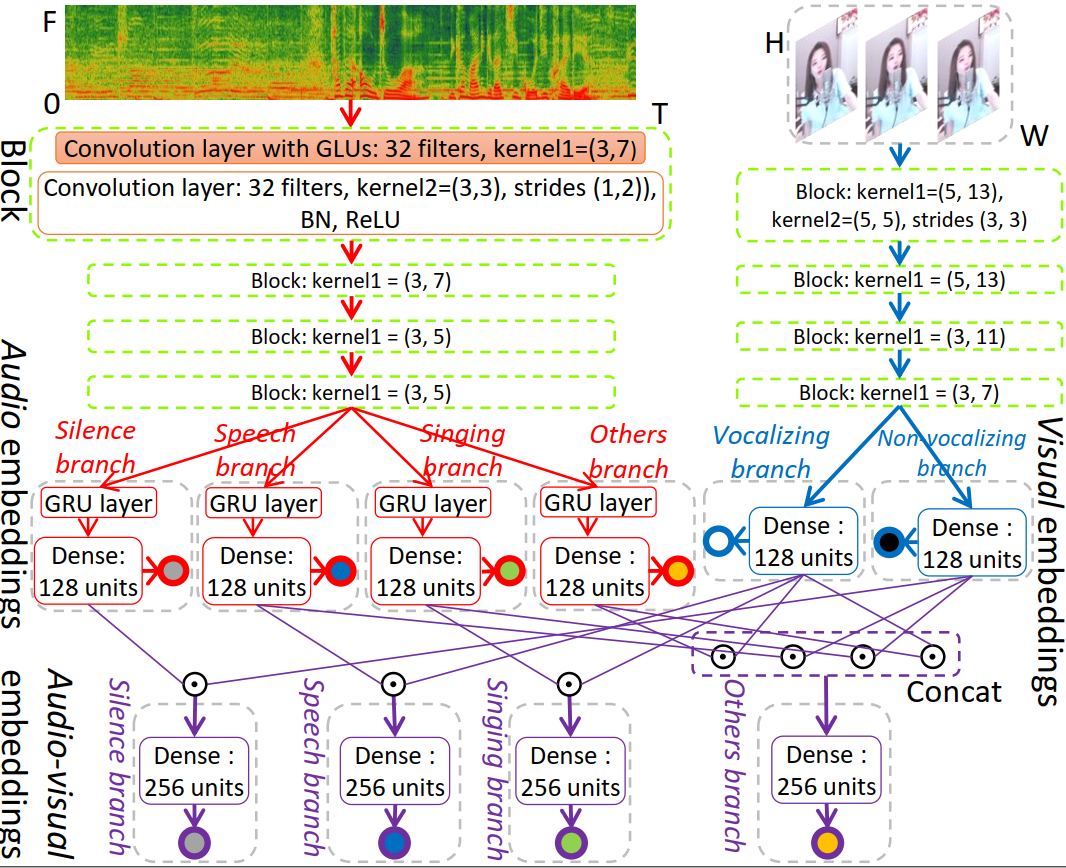

The left part is audio branch (red words) that tries to learn the high-level acoustic features of target events in audio level, and right part is image branch (blue words) attempts to judge whether the anchor is vocalizing using visual information. The bottom part is the Audio-Visual branch (purple italics), which aims to fuse the bi-modal representations to determine the probability of target events of this paper.

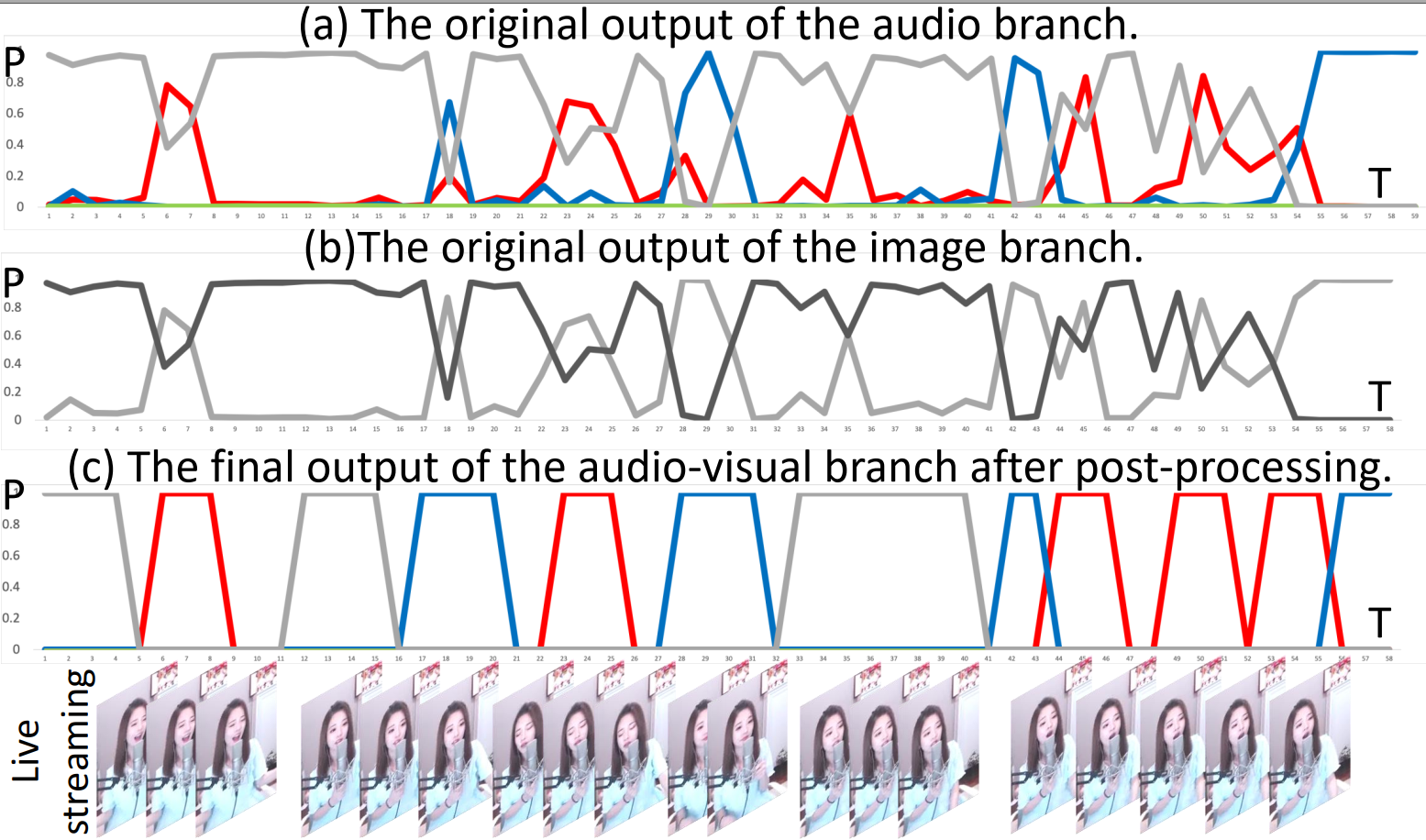

In subgraph (a), the red, blue, gray and green lines denote the probability of Singing, Speech, Others and Silence in audio, respectively.

In subgraph (b), the gray and black lines denote the probability of vocalizing and non-vocalizing, respectively.

In subgraph (c), the red, blue and gray lines denote the probability of target Singing, Speech and Others, and the other remaining part is Silence.

3. For the source code, video demos and the open dataset MAVC100 published in this paper, please check the Code, Video_demos and Open_dataset_MAVC100, respectively.

If you want to watch more intuitively, please see here: https://yuanbo2020.github.io/Audio-Visual-VAD/.

Please feel free to use the open dataset MAVC100 and consider citing our paper as

@INPROCEEDINGS{icassp2021_hou,

author={Hou, Yuanbo and Deng, Yi and Zhu, Bilei and Ma, Zejun and Botteldooren, Dick},

booktitle={ICASSP 2021 - 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

title={Rule-Embedded Network for Audio-Visual Voice Activity Detection in Live Musical Video Streams},

year={2021},

volume={},

number={},

pages={4165-4169},

doi={10.1109/ICASSP39728.2021.9413418}}