by Yueming Jin, Yonghao Long, Xiaojie Gao, Danail Stoyanov, Qi Dou, Pheng-Ann Heng.

-

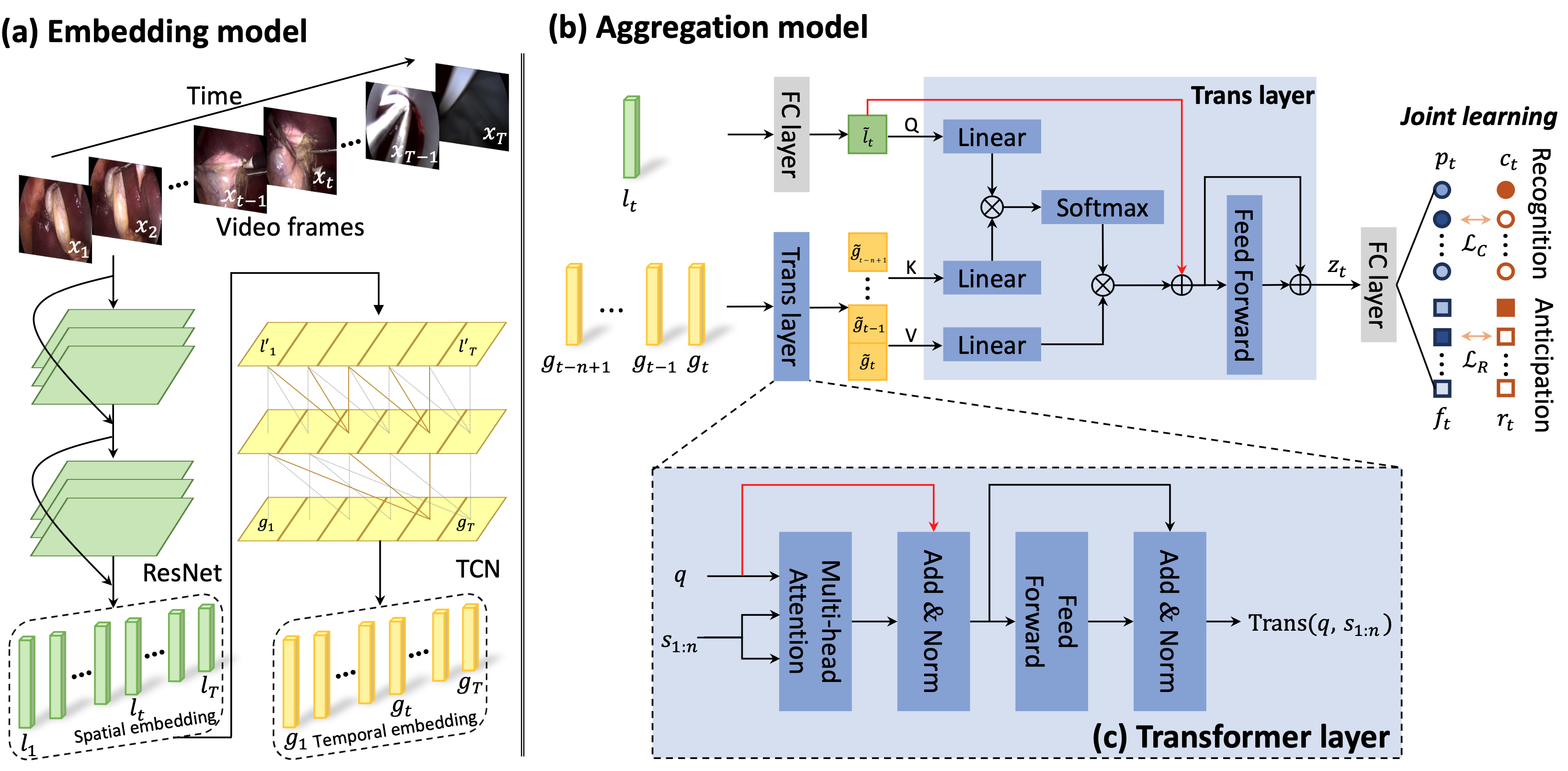

The Pytorch implementation for our paper 'Trans-SVNet: hybrid embedding aggregation Transformer for surgical workflow analysis', accepted at International Journal of Computer Assisted Radiology and Surgery (IJCARS).

-

This is the extension version of our 2021 MICCAI paper, and tackles both workflow recognition and workflow anticipation.

-

We validate our method on two types of surgeries with three datasets Cholec80, M2CAI 2016 Challenge and CATARACTS.

-

Please refer to TMRNet repository for data preprocessing.

-

Check dependencies:

- pytorch 1.0+ - opencv-python - numpy - sklearn -

Clone this repo

git clone https://github.com/YuemingJin/Trans-SVNet_Journal

-

Generate labels and prepare data path information

-

Run

$ generate_phase_anticipation.pyto generate the label of workflow anticipation -

Run

$ get_paths_labels.pyto generate the files needed for the training

- Training

-

Run

$ train_embedding.pyto train ResNet50 backbone -

Run

$ generate_LFB.pyto generate spatial embeddings -

Run

$ tecno.pyto train TCN for temporal modeling -

Run

$ tecno_trans.pyto train Transformer

Our trained models can be downloaded from Dropbox.

- Run

$ trans_SV_output.pyto generate the predictions for evaluation

We use the evaluation protocol of M2CAI challenge for evaluating our method. Please refer to TMRNet repository for evaluation script.

Note:

We take the training&testing procedure for Cholec80 dataset (folder: ./code_80/) as an example.

For M2CAI dataset, the same code can be used.

For CATA dataset, code can be found in ./code_CATA/ folder and training&testing procedure are the same.

If this repository is useful for your research, please cite:

@ARTICLE{jin2022trans,

author={Jin, Yueming and Long, Yonghao and Gao, Xiaojie and Stoyanov, Danail and Dou, Qi and Heng, Pheng-Ann},

journal={International Journal of Computer Assisted Radiology and Surgery},

title={Trans-SVNet: hybrid embedding aggregation Transformer for surgical workflow analysis},

volume={17},

number={12},

pages={2193--2202},

year={2022},

publisher={Springer}

}

For further question about the code or paper, please contact 'ymjin5341@gmail.com'