Solve the CartPole-v0 with Monte-Carlo Policy Gradient (REINFORCE)!

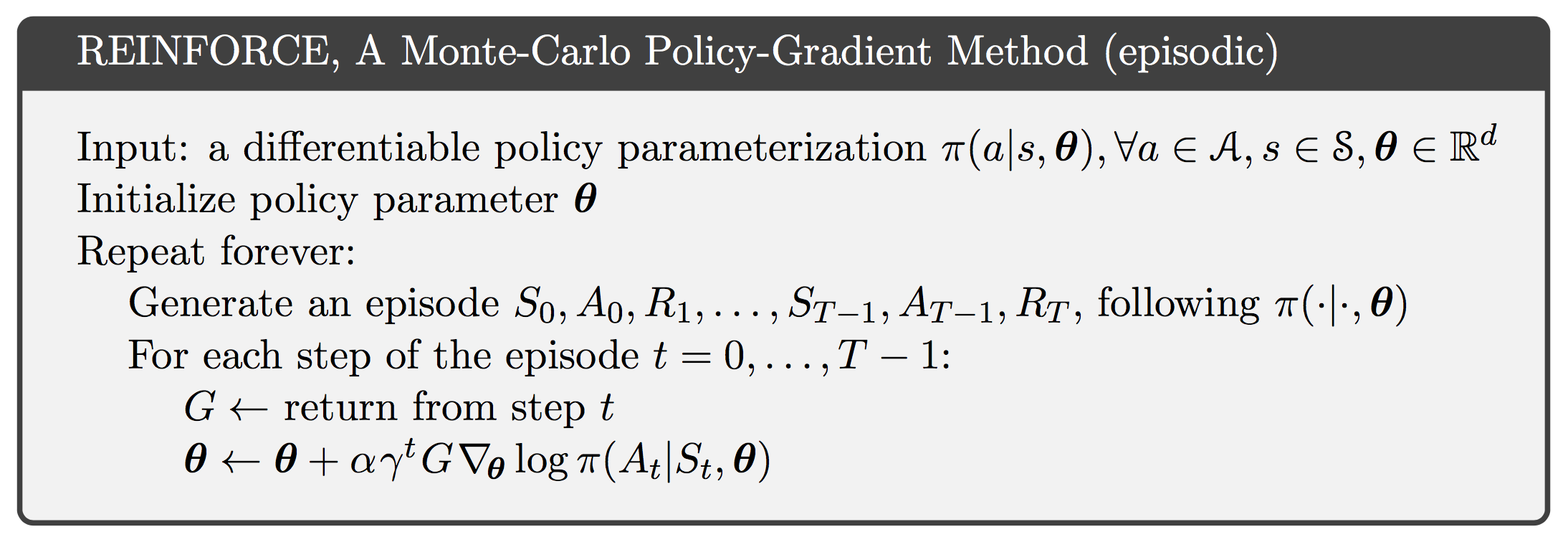

Take a look at the boxed pseudocode below.

: return (cumulative discounted reward) following time T

: probability of taking action

in state

My interpretation of this method is that the actions selected more frequently are the more beneficial choices, thus we try to repeat these actions if similar states are visited.

The box pseudocode is from Reinforcement Learning: An Introduction by Richard S. Sutton and Andrew G. Barto.

In my code, the loop of updating weights iterates from to

, and the cumulative discounted reward is computed by

.

Besides, the term is ommited in

.

Since the optimizer will minimize the loss, we will need to multiply the product of and

by

.