This repository provides implementation of domains, DEHRL and results visualization for paper:

- Diversity−Driven Extensible Hierarchical Reinforcement Learning. Yuhang Song, Jianyi Wang †, Thomas Lukasiewicz ∗, Zhenghua Xu ∗, Mai Xu. Co-first author†. Corresponding author∗. [Oral Presentation Slides], [Paper], [Supplementary Material], [arXiv].

The paper is published on Proceedings of the 33rd National Conference on Artificial Intelligence (AAAI 2019), as an Oral Presentation. The work is conducted by Intelligent Systems Lab @ Computer Science in University of Oxford.

Specifically, this repository includes simple guidelines to:

If you find our code or paper useful for your research, please cite:

@inproceedings{SWLXX-AAAI-2019,

title = "Diversity-Driven Extensible Hierarchical Reinforcement Learning",

author = "Yuhang Song and Jianyi Wang and Thomas Lukasiewicz and Zhenghua Xu and Mai Xu",

booktitle = "Proceedings of the 33rd National Conference on Artificial Intelligence (AAAI 2019)",

year = "2019",

month = "January",

publisher = "AAAI Press",

}

Note that the project is based on an RL implemention by Ilya Kostrikov, we thank a lot for his contribution to the community.

All domains share the same interface as OpenAI Gym.

See overcooked.py, minecraft.py, gridworld.py for more details.

Note that MineCraft is based on the implemention by Michael Fogleman, we thank a lot for his contribution to the community.

Besides, be aware that MineCraft only supports single thread training and it requires the xserver.

In order to install requirements, follow:

# create env

conda create -n dehrl python=3.6.7 -y

# source in env

source ~/.bashrc

source activate dehrl

pip install --upgrade torch torchvision

pip install --upgrade tensorflow

pip install opencv-contrib-python

conda install scikit-image -y

pip install --upgrade imutils

pip install gym[atari]

mkdir dehrl

cd dehrl

git clone https://github.com/YuhangSong/DEHRL.git

mkdir results

cd DEHRL

pip install requirements.txtRun commands here to enter the virtual environment before proceeding to following commands:

source ~/.bashrc

source activate dehrlThe domain is somehow inspired by a game in the same name (though significantly different and coded by ourselves):

We thank them a lot for this inspiration.

Goal-type: any.

CUDA_VISIBLE_DEVICES=0 python main.py --algo ppo --use-gae --lr 2.5e-4 --clip-param 0.1 --value-loss-coef 1 --num-processes 8 --actor-critic-mini-batch-size 256 --actor-critic-epoch 4 --exp code_release --obs-type 'image' --env-name "OverCooked" --reward-level 1 --setup-goal any --new-overcooked --num-hierarchy 2 --num-subpolicy 5 --hierarchy-interval 4 --num-steps 128 128 --reward-bounty 0.1875 --distance mass_center --transition-model-mini-batch-size 64 --train-mode together --clip-reward-bounty --clip-reward-bounty-active-function linear --summarize-behavior-interval 5 --aux r_0Goal-type: fix

CUDA_VISIBLE_DEVICES=0 python main.py --algo ppo --use-gae --lr 2.5e-4 --clip-param 0.1 --value-loss-coef 1 --num-processes 8 --actor-critic-mini-batch-size 256 --actor-critic-epoch 4 --exp code_release --obs-type 'image' --env-name "OverCooked" --reward-level 1 --setup-goal fix --new-overcooked --num-hierarchy 2 --num-subpolicy 5 --hierarchy-interval 4 --num-steps 128 128 --reward-bounty 0.1875 --distance mass_center --transition-model-mini-batch-size 64 --train-mode together --clip-reward-bounty --clip-reward-bounty-active-function linear --summarize-behavior-interval 5 --aux r_0Goal-type: random

CUDA_VISIBLE_DEVICES=0 python main.py --algo ppo --use-gae --lr 2.5e-4 --clip-param 0.1 --value-loss-coef 1 --num-processes 8 --actor-critic-mini-batch-size 256 --actor-critic-epoch 4 --exp code_release --obs-type 'image' --env-name "OverCooked" --reward-level 1 --setup-goal random --new-overcooked --num-hierarchy 2 --num-subpolicy 5 --hierarchy-interval 4 --num-steps 128 128 --reward-bounty 0.1875 --distance mass_center --transition-model-mini-batch-size 64 --train-mode together --clip-reward-bounty --clip-reward-bounty-active-function linear --summarize-behavior-interval 5 --aux r_0Goal-type: any

CUDA_VISIBLE_DEVICES=0 python main.py --algo ppo --use-gae --lr 2.5e-4 --clip-param 0.1 --value-loss-coef 1 --num-processes 8 --actor-critic-mini-batch-size 256 --actor-critic-epoch 4 --exp code_release --obs-type 'image' --env-name "OverCooked" --reward-level 2 --setup-goal any --new-overcooked --num-hierarchy 3 --num-subpolicy 5 5 --hierarchy-interval 4 12 --num-steps 128 128 128 --reward-bounty 1 --distance mass_center --transition-model-mini-batch-size 64 64 --train-mode together --clip-reward-bounty --clip-reward-bounty-active-function linear --summarize-behavior-interval 5 --aux r_0Goal-type: fix

CUDA_VISIBLE_DEVICES=0 python main.py --algo ppo --use-gae --lr 2.5e-4 --clip-param 0.1 --value-loss-coef 1 --num-processes 8 --actor-critic-mini-batch-size 256 --actor-critic-epoch 4 --exp code_release --obs-type 'image' --env-name "OverCooked" --reward-level 2 --setup-goal fix --new-overcooked --num-hierarchy 3 --num-subpolicy 5 5 --hierarchy-interval 4 12 --num-steps 128 128 128 --reward-bounty 1 --distance mass_center --transition-model-mini-batch-size 64 64 --train-mode together --clip-reward-bounty --clip-reward-bounty-active-function linear --summarize-behavior-interval 5 --aux r_0Goal-type: random

CUDA_VISIBLE_DEVICES=0 python main.py --algo ppo --use-gae --lr 2.5e-4 --clip-param 0.1 --value-loss-coef 1 --num-processes 8 --actor-critic-mini-batch-size 256 --actor-critic-epoch 4 --exp code_release --obs-type 'image' --env-name "OverCooked" --reward-level 2 --setup-goal random --new-overcooked --num-hierarchy 3 --num-subpolicy 5 5 --hierarchy-interval 4 12 --num-steps 128 128 128 --reward-bounty 1 --distance mass_center --transition-model-mini-batch-size 64 64 --train-mode together --clip-reward-bounty --clip-reward-bounty-active-function linear --summarize-behavior-interval 5 --aux r_0Following curves are produced at commit point 7ea3aec9eabcd421d5660042d3e50333454f928e.

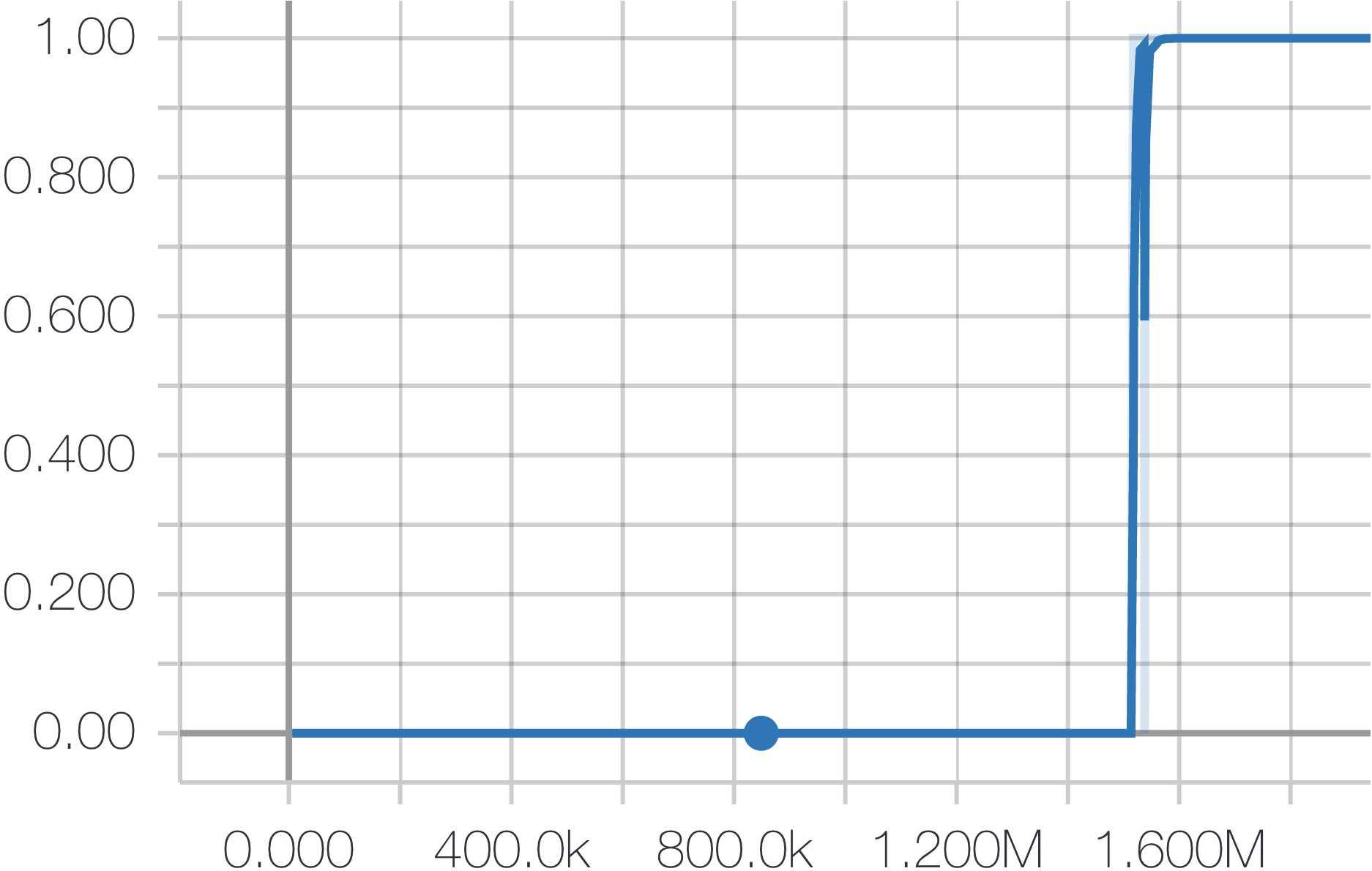

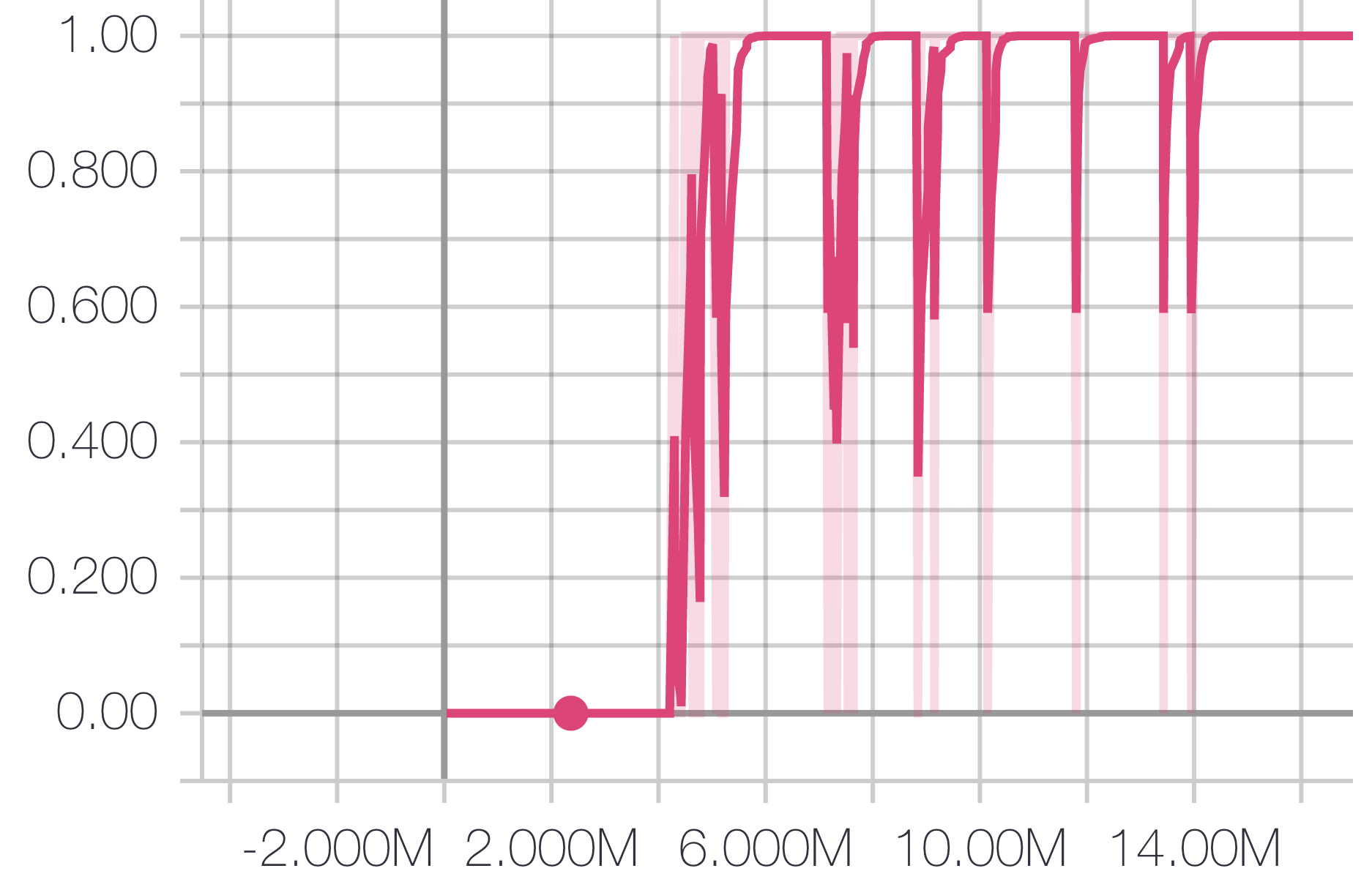

| Level 1; Any | Level 1; Fix | Level 1; Random |

|---|---|---|

|

|

|

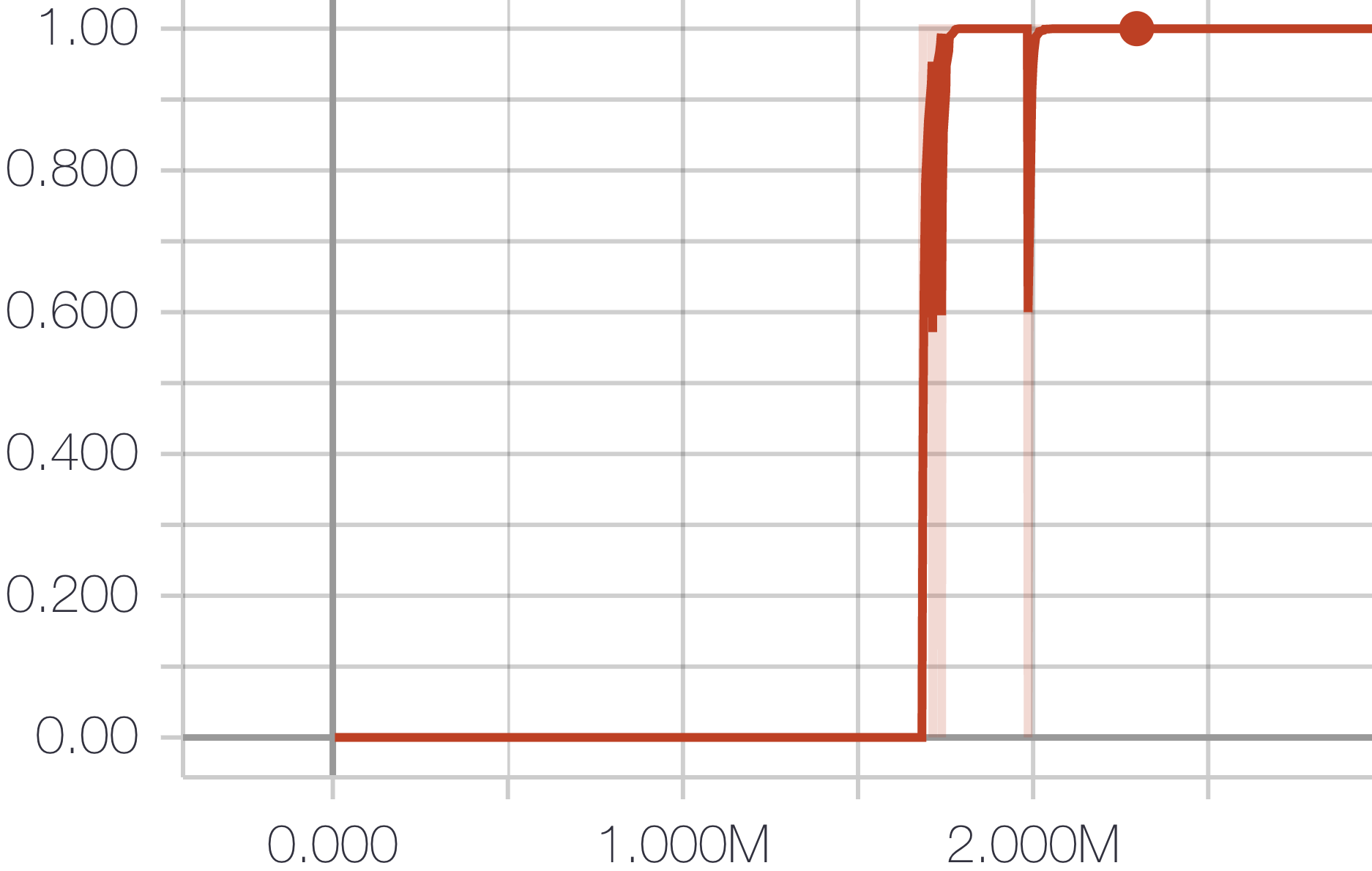

| Level 2; Any | Level 2; Fix | Level 2; Random |

|

|

|

If you cannot reproduce above curves, checkout to above points and run following commands again. In the mean time, open an issue immediately, since we believe we are introducing unintentional changes to the code when adding more domains to present.

The code antomatically check and reload everything from the log dir you set, and everything (model/curves/videos, etc.) is saved every 10 minutes. Thus, please feel save to continue your experiment from where you stopped by simply typing in the same command.

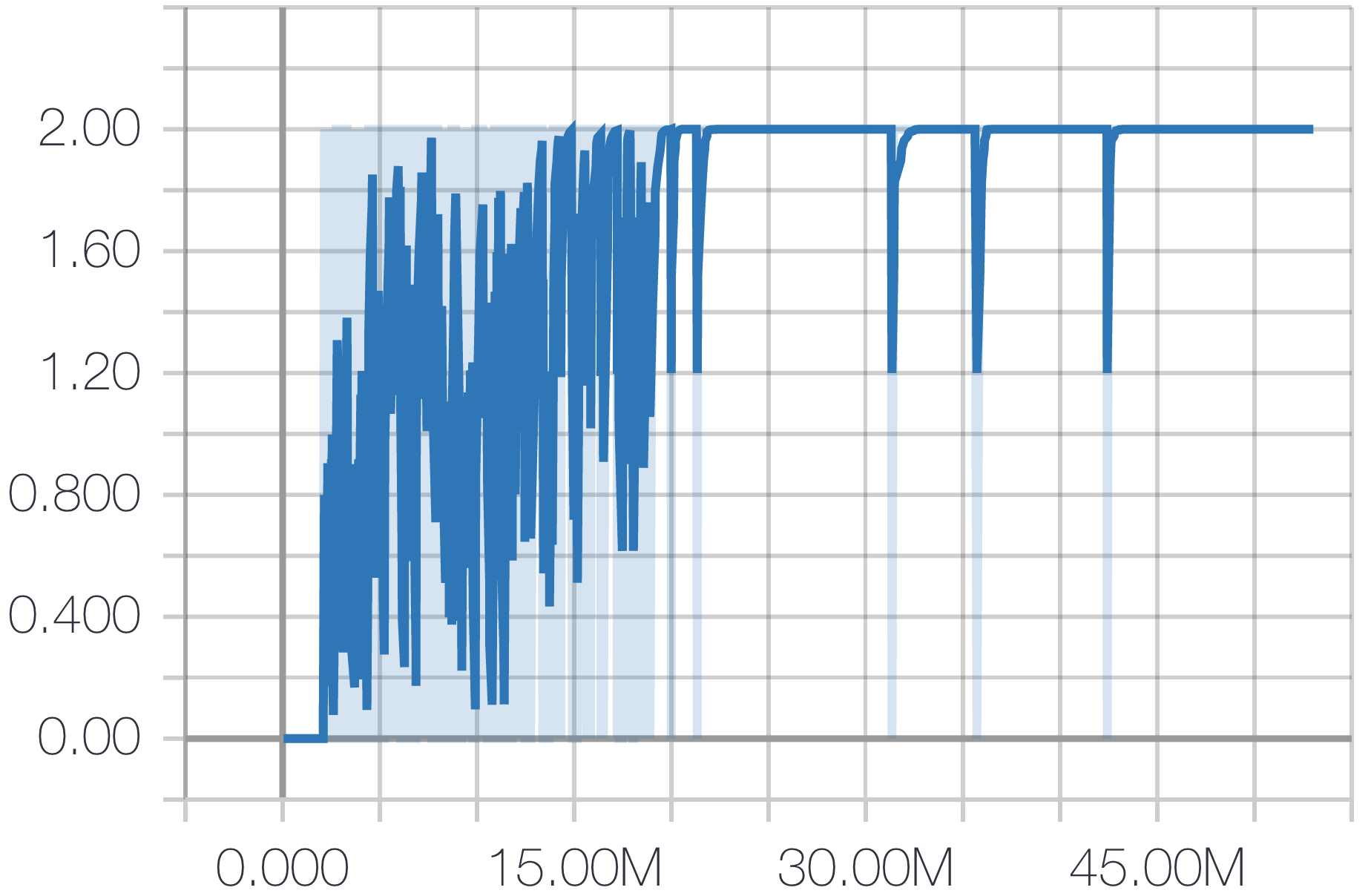

CUDA_VISIBLE_DEVICES=0 python main.py --algo ppo --use-gae --lr 2.5e-4 --clip-param 0.1 --value-loss-coef 1 --num-processes 1 --actor-critic-mini-batch-size 256 --actor-critic-epoch 4 --exp code_release --obs-type 'image' --env-name "MineCraft" --num-hierarchy 4 --num-subpolicy 8 8 8 --hierarchy-interval 4 4 4 --num-steps 128 128 128 128 --reward-bounty 1 --distance l1 --transition-model-mini-batch-size 64 64 64 --train-mode together --clip-reward-bounty --clip-reward-bounty-active-function linear --summarize-behavior-interval 10 --aux r_0We also save the world build by the agent, set

window.loadWorld('<path-to-the-save-file>.sav')

to the path of .sav file and run

python replay.py

to have a third person view of the built world.

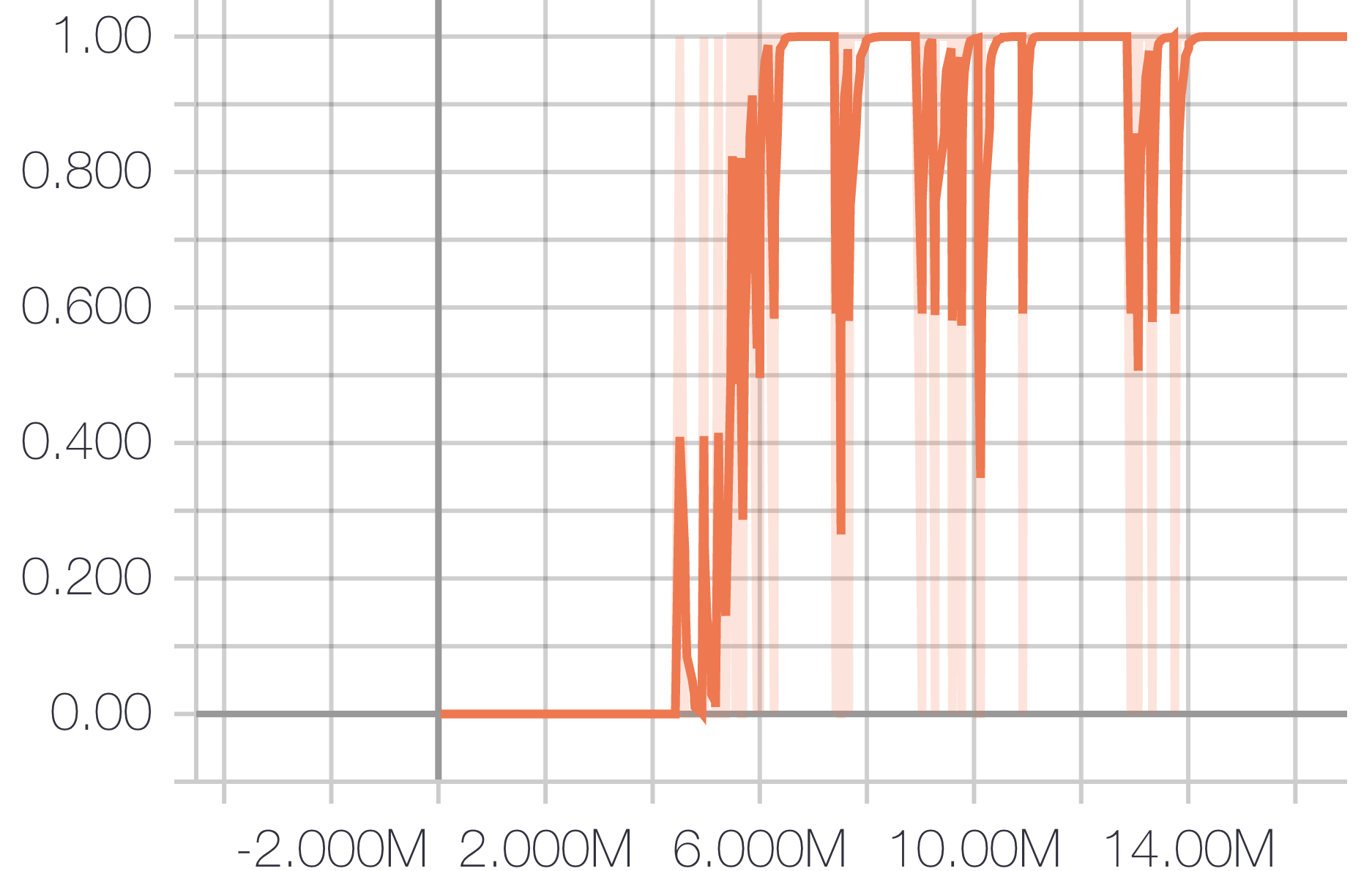

The agent can reach the key when the skull is not presented, video is here.

CUDA_VISIBLE_DEVICES=0 python main.py --algo ppo --use-gae --lr 2.5e-4 --clip-param 0.1 --value-loss-coef 1 --num-processes 8 --actor-critic-mini-batch-size 256 --actor-critic-epoch 4 --exp code_release --obs-type 'image' --env-name "MontezumaRevengeNoFrameskip-v4" --num-hierarchy 3 --num-subpolicy 5 5 --hierarchy-interval 8 4 --num-steps 128 128 128 --reward-bounty 1 --distance mass_center --transition-model-mini-batch-size 64 64 --train-mode together --clip-reward-bounty --clip-reward-bounty-active-function linear --summarize-behavior-interval 5 --aux r_0But due to the skull is affecting the mass centre of the state significantly, our method fails when the skull is moving in the state. Necessary solution is to generate a mask that can locate the part of the state that is controlled by the agent. Luckily, a recent paper under review by ICLR 2019 solves this problem. But their code is not released yet, so we implement their idea to generate the mask.

However, their idea works ideally on simple domain like GridWorld. Run following command to see the learnt masks on GridWorld,

CUDA_VISIBLE_DEVICES=0 python main.py --algo ppo --use-gae --lr 2.5e-4 --clip-param 0.1 --value-loss-coef 1 --num-processes 8 --actor-critic-mini-batch-size 256 --actor-critic-epoch 4 --exp code_release --obs-type 'image' --env-name "GridWorld" --num-hierarchy 2 --num-subpolicy 5 --hierarchy-interval 4 --num-steps 128 128 --reward-bounty 1 --distance mass_center --transition-model-mini-batch-size 64 --inverse-mask --num-grid 7 --train-mode together --clip-reward-bounty --clip-reward-bounty-active-function linear --summarize-behavior-interval 5 --aux r_0Following video is produced at commit point 04baecee316234a7f3fdcd51d1908c971211fdce.

If you cannot reproduce above curves, checkout to above points and run following commands again. In the mean time, open an issue immediately, since we believe we are introducing unintentional changes to the code when adding more domains to present.

But it performs poorly on MontezumaRevenge. We are waiting for their official release to further advance our DEHRL on domains with uncontrollable objects.

Number of subpolicies: 4

CUDA_VISIBLE_DEVICES=0 python main.py --algo ppo --use-gae --lr 3e-4 --clip-param 0.1 --actor-critic-epoch 10 --entropy-coef 0 --value-loss-coef 1 --gamma 0.99 --tau 0.95 --num-processes 8 --actor-critic-mini-batch-size 256 --actor-critic-epoch 4 --exp code_release --obs-type 'image' --env-name "Explore2DContinuous" --episode-length-limit 32 --num-hierarchy 2 --num-subpolicy 4 --hierarchy-interval 4 --num-steps 128 128 --reward-bounty 1 --distance l2 --transition-model-mini-batch-size 64 --train-mode together --clip-reward-bounty --clip-reward-bounty-active-function linear --summarize-behavior-interval 5 --aux r_0 --log-behaviorNumber of subpolicies: 8

CUDA_VISIBLE_DEVICES=0 python main.py --algo ppo --use-gae --lr 3e-4 --clip-param 0.1 --actor-critic-epoch 10 --entropy-coef 0 --value-loss-coef 1 --gamma 0.99 --tau 0.95 --num-processes 8 --actor-critic-mini-batch-size 256 --actor-critic-epoch 4 --exp code_release --obs-type 'image' --env-name "Explore2DContinuous" --episode-length-limit 32 --num-hierarchy 2 --num-subpolicy 8 --hierarchy-interval 4 --num-steps 128 128 --reward-bounty 1 --distance l2 --transition-model-mini-batch-size 64 --train-mode together --clip-reward-bounty --clip-reward-bounty-active-function linear --summarize-behavior-interval 5 --aux r_0 --log-behavior| Number of subpolicies: 4 | Number of subpolicies: 8 |

|---|---|

|

|

where the dot is current position and crosses are resulted states of different subpolicies.

Pybullet is a free alternative for Mujoco, with even better / more complex continuous control tasks.

Install by pip install -U pybullet.

|

|

|

|

|---|

Number of subpolicies: 4

CUDA_VISIBLE_DEVICES=0 python main.py --algo ppo --use-gae --lr 3e-4 --clip-param 0.1 --actor-critic-epoch 10 --entropy-coef 0 --value-loss-coef 1 --gamma 0.99 --tau 0.95 --num-processes 8 --actor-critic-mini-batch-size 256 --actor-critic-epoch 10 --exp AntBulletEnv-0 --obs-type 'image' --env-name "AntBulletEnv-v1" --num-hierarchy 2 --num-subpolicy 4 --hierarchy-interval 128 --extend-drive 1 --num-steps 2048 2048 --reward-bounty 1 --distance l2 --transition-model-mini-batch-size 64 --train-mode together --unmask-value-function --summarize-behavior-interval 15 --aux r_3 --summarize-rendered-behavior --summarize-state-prediction| Rendered Observations | Resulted States of Different Sub-policies |

|---|---|

|

|

where the dot is current position and crosses are resulted states of different subpolicies.

Note that for above PyBullet domains

- We manually extract position information from observation, and use it to do diverse-driven. This is due to we leave extracting useful information from observation as a future work, and mainly focus on verifying our diverse-drive solution.

- We remove the reward returned by the original enviriment so that the experiment is aimed to let DEHRL discover diverse subpolicies in an unsupervise manner. Of course extrinsic reward can be included, but the goal set out by current environment is too simply to show the effectiveness of diverse sub-policies.

- Thus, the part of experiment is only to show our method scale well to continuous control tasks, futher investigation is a promising future work.

Other available environments in PyBullet can be found here.

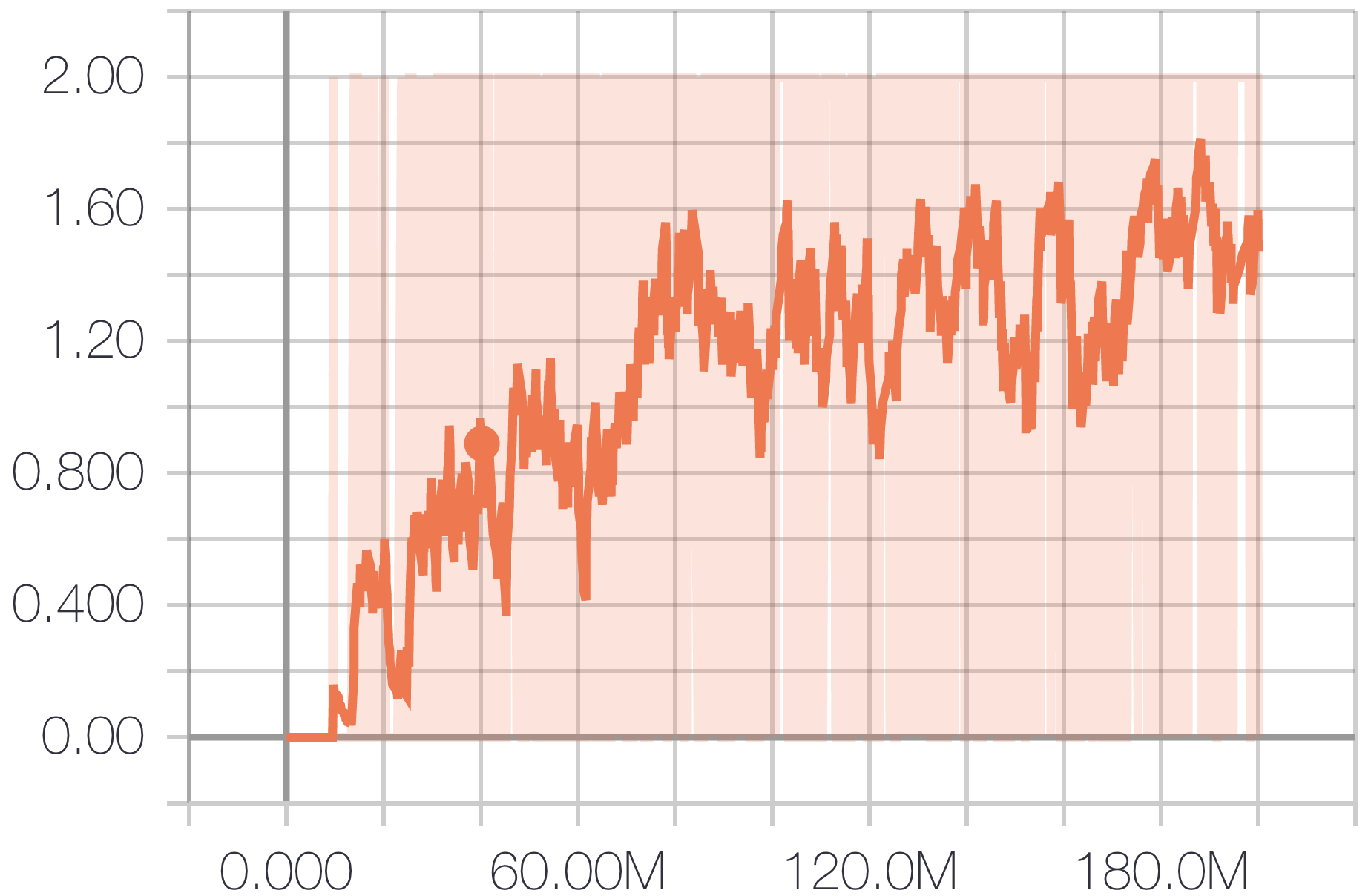

Following results on Explore2D are produced at 9c2c8bbe5a8e18df21fbda1183e3e2a229a340d1.

Random policy baseline

CUDA_VISIBLE_DEVICES=0 python main.py --algo ppo --use-gae --lr 2.5e-4 --clip-param 0.1 --value-loss-coef 1 --num-processes 8 --actor-critic-mini-batch-size 256 --actor-critic-epoch 4 --exp 2d_explore --obs-type 'image' --env-name "Explore2D" --episode-length-limit 1024 --num-hierarchy 2 --num-subpolicy 5 --hierarchy-interval 4 --num-steps 128 128 --reward-bounty 0 --distance l2 --transition-model-mini-batch-size 64 --train-mode together --clip-reward-bounty --clip-reward-bounty-active-function linear --summarize-behavior-interval 5 --aux r_0Number of levels: 2

CUDA_VISIBLE_DEVICES=0 python main.py --algo ppo --use-gae --lr 2.5e-4 --clip-param 0.1 --value-loss-coef 1 --num-processes 8 --actor-critic-mini-batch-size 256 --actor-critic-epoch 4 --exp 2d_explore --obs-type 'image' --env-name "Explore2D" --episode-length-limit 1024 --num-hierarchy 2 --num-subpolicy 5 --hierarchy-interval 4 --num-steps 128 128 --reward-bounty 1 --distance l2 --transition-model-mini-batch-size 64 --train-mode together --clip-reward-bounty --clip-reward-bounty-active-function linear --summarize-behavior-interval 5 --aux r_0Number of levels: 3

CUDA_VISIBLE_DEVICES=0 python main.py --algo ppo --use-gae --lr 2.5e-4 --clip-param 0.1 --value-loss-coef 1 --num-processes 8 --actor-critic-mini-batch-size 256 --actor-critic-epoch 4 --exp 2d_explore --obs-type 'image' --env-name "Explore2D" --episode-length-limit 1024 --num-hierarchy 3 --num-subpolicy 5 5 --hierarchy-interval 4 4 --num-steps 128 128 128 --reward-bounty 1 --distance l2 --transition-model-mini-batch-size 64 64 --train-mode together --clip-reward-bounty --clip-reward-bounty-active-function linear --summarize-behavior-interval 5 --aux r_0Number of levels: 4

CUDA_VISIBLE_DEVICES=0 python main.py --algo ppo --use-gae --lr 2.5e-4 --clip-param 0.1 --value-loss-coef 1 --num-processes 8 --actor-critic-mini-batch-size 256 --actor-critic-epoch 4 --exp 2d_explore --obs-type 'image' --env-name "Explore2D" --episode-length-limit 1024 --num-hierarchy 4 --num-subpolicy 5 5 5 --hierarchy-interval 4 4 4 --num-steps 128 128 128 128 --reward-bounty 1 --distance l2 --transition-model-mini-batch-size 64 64 64 --train-mode together --clip-reward-bounty --clip-reward-bounty-active-function linear --summarize-behavior-interval 5 --aux r_0Visualize Explore2D: replace main.py to vis_explore2d.py in corresponding command.

| Level 1 | Level 2 | Level 3 | Level 4 |

|---|---|---|---|

|

|

|

|

Number of levels: 2

CUDA_VISIBLE_DEVICES=0 python main.py --algo ppo --use-gae --lr 2.5e-4 --clip-param 0.1 --value-loss-coef 1 --num-processes 8 --actor-critic-mini-batch-size 256 --actor-critic-epoch 4 --exp 2d_explore --obs-type 'image' --env-name "Explore2D" --episode-length-limit 1024 --num-hierarchy 2 --num-subpolicy 9 --hierarchy-interval 4 --num-steps 128 128 --reward-bounty 1 --distance l2 --transition-model-mini-batch-size 64 --train-mode together --clip-reward-bounty --clip-reward-bounty-active-function linear --summarize-behavior-interval 5 --aux r_0Number of levels: 3

CUDA_VISIBLE_DEVICES=0 python main.py --algo ppo --use-gae --lr 2.5e-4 --clip-param 0.1 --value-loss-coef 1 --num-processes 8 --actor-critic-mini-batch-size 256 --actor-critic-epoch 4 --exp 2d_explore --obs-type 'image' --env-name "Explore2D" --episode-length-limit 1024 --num-hierarchy 3 --num-subpolicy 9 9 --hierarchy-interval 4 4 --num-steps 128 128 128 --reward-bounty 1 --distance l2 --transition-model-mini-batch-size 64 64 --train-mode together --clip-reward-bounty --clip-reward-bounty-active-function linear --summarize-behavior-interval 5 --aux r_0Number of levels: 4

CUDA_VISIBLE_DEVICES=0 python main.py --algo ppo --use-gae --lr 2.5e-4 --clip-param 0.1 --value-loss-coef 1 --num-processes 8 --actor-critic-mini-batch-size 256 --actor-critic-epoch 4 --exp 2d_explore --obs-type 'image' --env-name "Explore2D" --episode-length-limit 1024 --num-hierarchy 4 --num-subpolicy 9 9 9 --hierarchy-interval 4 4 4 --num-steps 128 128 128 128 --reward-bounty 1 --distance l2 --transition-model-mini-batch-size 64 64 64 --train-mode together --clip-reward-bounty --clip-reward-bounty-active-function linear --summarize-behavior-interval 5 --aux r_0| Level 1 | Level 2 | Level 3 | Level 4 |

|---|---|---|---|

|

|

|

|

The code log multiple curves to help analysis the training process, run:

tensorboard --logdir ../results/code_release/ --port 6009

and visit http://localhost:6009 for visualization with tensorboard.

Besides, if you add argument --log-behavior, multiple videos are saved in the logdir.

However, this will cost extra memory, so be careful when you are using it.

Please feel free to contact us or open an issue (or a pull request if you already solve it, cheers!), we will keep tracking of the issues and updating the code.

Hope you have fun with our work!